Generative AI: Revolutionizing Data with Synthetic Generation & Augmentation

Explore how Generative AI is transforming data challenges by creating synthetic datasets. Learn about its role in overcoming scarcity, imbalance, and privacy concerns, accelerating AI innovation, and democratizing access to high-quality training resources.

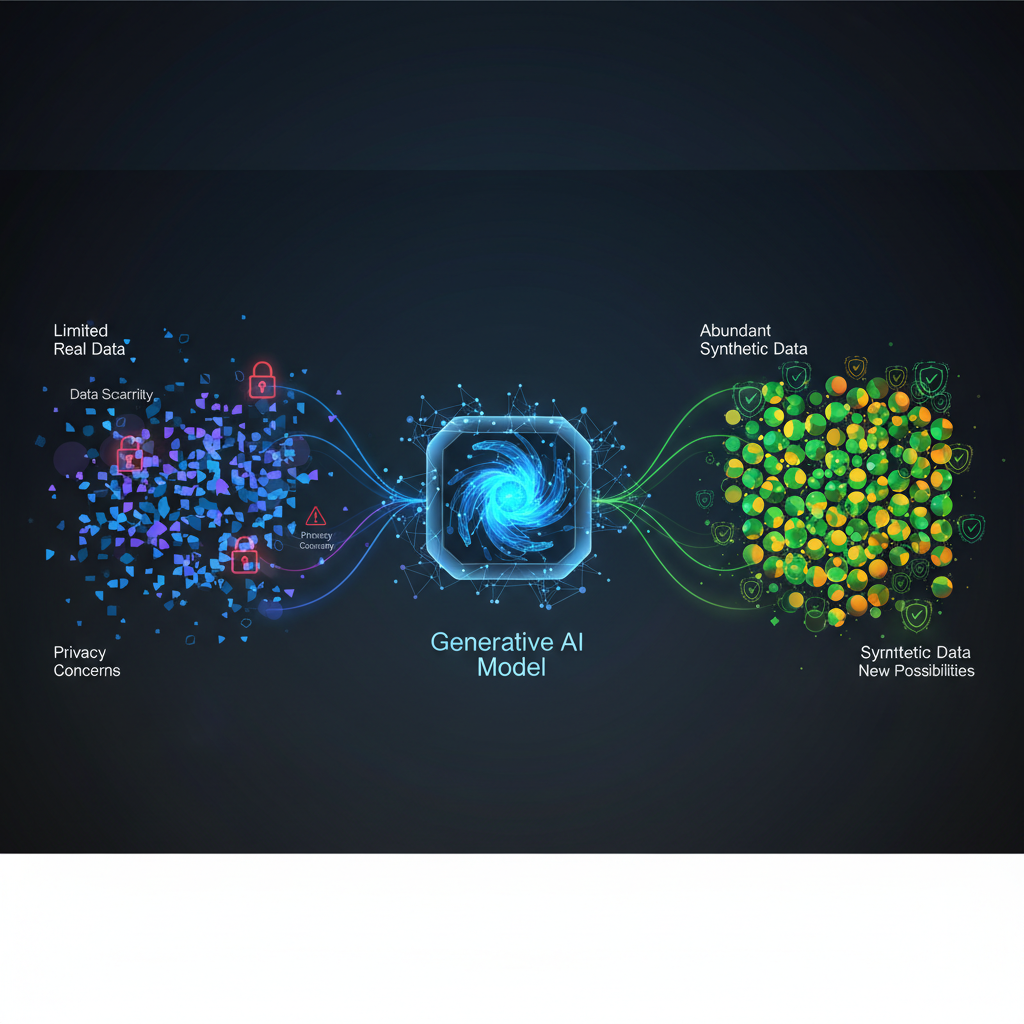

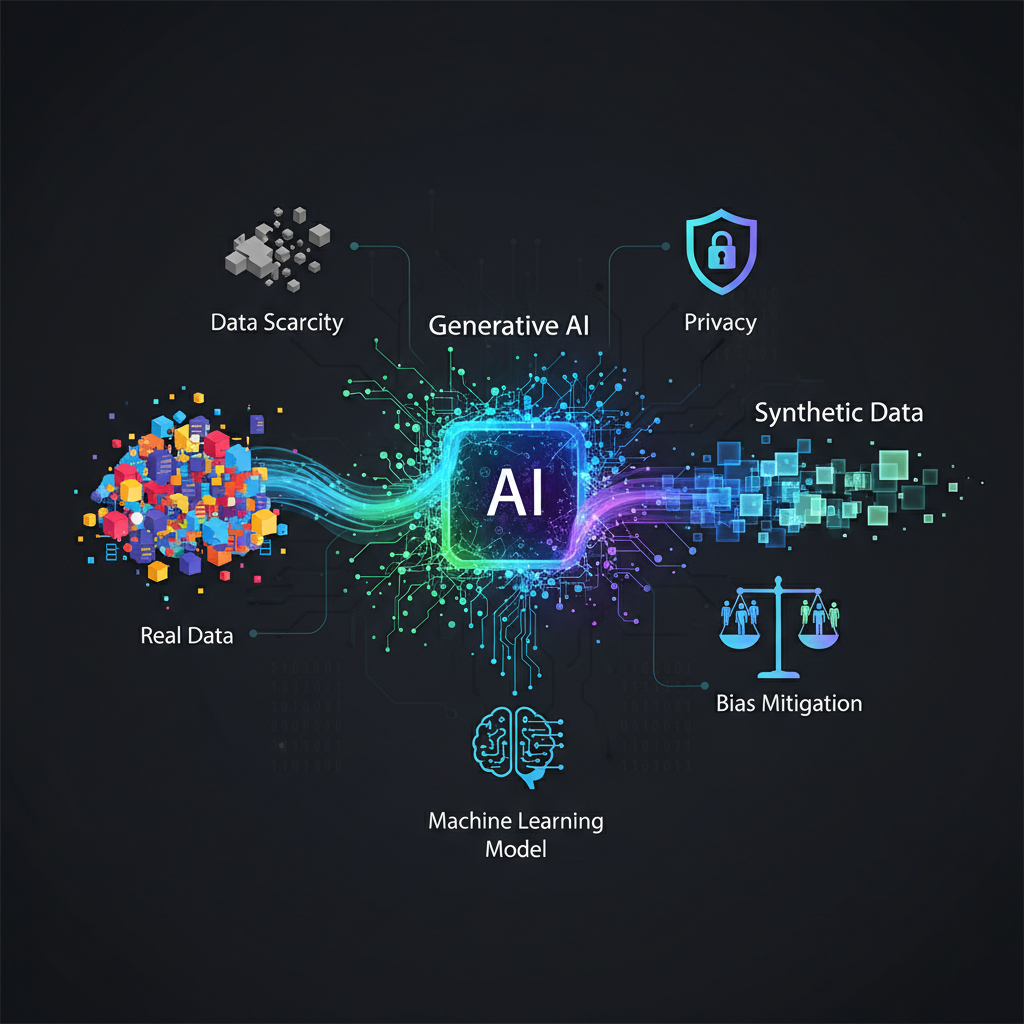

The lifeblood of any successful machine learning model is data. Yet, the journey from raw information to a perfectly curated dataset is often fraught with challenges: scarcity, imbalance, privacy concerns, and the sheer cost of collection and labeling. What if we could conjure data out of thin air – data that mirrors the statistical properties of the real world, but without its inherent limitations?

This is the promise of Generative AI for Synthetic Data Generation and Augmentation, a field that has rapidly moved from academic curiosity to a cornerstone of modern AI development. With the explosion of sophisticated generative models, we're witnessing a paradigm shift in how we approach data, offering unprecedented opportunities to accelerate innovation, enhance privacy, and democratize access to high-quality training resources.

The Data Dilemma: Why Synthetic Data Matters Now More Than Ever

In an increasingly data-driven world, the irony is that high-quality, usable data remains a bottleneck for many AI initiatives. Consider these common hurdles:

- Data Scarcity: For rare events (e.g., specific medical conditions, financial fraud, manufacturing defects), real-world data is inherently limited. Training robust models on such sparse data is incredibly challenging.

- Privacy and Regulations: Strict regulations like GDPR, CCPA, and HIPAA make it difficult, if not impossible, to share or even use real-world sensitive data without extensive anonymization, which often degrades data utility.

- Bias and Imbalance: Real-world datasets often reflect existing societal biases or contain imbalanced class distributions, leading to unfair or underperforming models.

- Cost and Time: Collecting, labeling, and cleaning massive datasets is an expensive, time-consuming, and labor-intensive process.

- Edge Cases: For critical systems like autonomous vehicles, testing every conceivable edge case in the real world is impractical and dangerous.

Generative AI offers a compelling solution to these problems by creating synthetic data – artificial data that retains the statistical characteristics, patterns, and relationships of real data, but without being a direct copy of any specific real-world instance. This makes it a powerful tool for overcoming data limitations and fostering more robust, ethical, and efficient AI systems.

The Architects of Synthetic Realities: Core Generative Models

The ability to create high-fidelity synthetic data hinges on the advancements in generative AI. Several key architectures have emerged as frontrunners in this domain, each with its unique strengths and mechanisms.

Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, revolutionized generative modeling. They operate on an adversarial principle, pitting two neural networks against each other:

- Generator (G): This network takes random noise as input and tries to produce synthetic data samples that resemble real data.

- Discriminator (D): This network acts as a critic, receiving both real data and synthetic data from the Generator. Its task is to distinguish between the two, classifying samples as "real" or "fake."

The Generator's goal is to fool the Discriminator into thinking its synthetic outputs are real, while the Discriminator's goal is to accurately identify fakes. This continuous competition drives both networks to improve: the Generator learns to create increasingly realistic data, and the Discriminator becomes better at detecting subtle differences. The training process continues until the Discriminator can no longer reliably tell the difference between real and synthetic data, at which point the Generator is deemed successful.

Strengths: GANs are renowned for their ability to produce incredibly realistic samples, especially in image generation (e.g., photorealistic faces, objects). Challenges: Training GANs can be notoriously unstable, often suffering from issues like mode collapse (where the Generator produces only a limited variety of samples) or vanishing gradients. Variants: Many GAN variants exist, such as Conditional GANs (cGANs) for controlled generation (e.g., generating a specific type of image), StyleGANs for high-resolution image synthesis with style control, and BigGAN for large-scale, diverse image generation.

Variational Autoencoders (VAEs)

VAEs offer a different, more probabilistic approach to generative modeling. They consist of two main parts:

- Encoder: This network takes an input data sample and compresses it into a lower-dimensional latent space representation. Unlike traditional autoencoders, VAEs learn to map inputs to a probability distribution (mean and variance) in the latent space, rather than a single point.

- Decoder: This network takes a sample from the latent space (drawn from the learned distribution) and reconstructs it back into the original data space.

The VAE is trained to minimize the reconstruction error (how well the decoded output matches the original input) and to ensure that the latent space distributions are well-structured and close to a prior distribution (e.g., a standard normal distribution). This probabilistic approach makes VAEs excellent for generating new samples by simply sampling from the latent space and passing it through the decoder.

Strengths: VAEs are generally more stable to train than GANs and provide a structured, continuous latent space, which is useful for interpolation and understanding data relationships. Challenges: Generated samples from VAEs can sometimes appear blurrier or less "crisp" compared to those from GANs, particularly for complex data like images.

Diffusion Models

Diffusion models are the latest sensation in generative AI, powering state-of-the-art image generation systems like DALL-E 2, Stable Diffusion, and Midjourney. Their mechanism is inspired by physics:

- Forward Diffusion Process: This process gradually adds Gaussian noise to an input data sample over several steps, slowly transforming it into pure noise. This process is fixed and doesn't require learning.

- Reverse Diffusion Process: This is the learned part. A neural network is trained to reverse the forward process, starting from pure noise and iteratively denoising it to reconstruct a coherent data sample. The model learns to predict the noise that was added at each step, or directly predict the denoised image.

During generation, the model starts with random noise and applies the learned reverse diffusion steps, gradually refining the noise into a high-quality synthetic sample.

Strengths: Diffusion models are renowned for producing exceptionally high-quality, diverse, and realistic outputs. They are often more stable to train than GANs and excel at conditional generation (e.g., text-to-image synthesis). Challenges: They can be computationally intensive for both training and inference, especially when generating very high-resolution outputs, requiring many sequential denoising steps.

Autoregressive Models

While often associated with Large Language Models (LLMs) like GPT, autoregressive models can also be adapted for synthetic data generation, particularly for sequential or tabular data.

- Mechanism: These models predict the next element in a sequence based on all preceding elements. For text, this means predicting the next word given the previous words. For tabular data, a row can be treated as a sequence of features, and the model learns to predict the value of each feature given the values of previous features in that row.

Strengths: Excellent at capturing long-range dependencies and complex sequential patterns. Challenges: Can be slow for generation as each element must be generated sequentially. May struggle with complex, non-sequential relationships inherent in some tabular datasets.

Tabular Data Specific Models

Tabular data, with its mix of categorical, numerical, discrete, and continuous features, presents unique challenges for generative models. Specialized approaches have emerged:

- CTGAN (Conditional Tabular GAN): Specifically designed to handle the diverse data types and complex correlations found in tabular datasets. It employs conditional sampling to ensure generated samples cover the full data distribution and uses transformations to handle different feature types.

- TVAE (Tabular VAE): A VAE variant optimized for tabular data, often incorporating techniques to handle mixed data types effectively.

- Synthesizer Frameworks (e.g., SDV - Synthetic Data Vault): These are comprehensive libraries that encapsulate various generative models tailored for tabular data. They provide tools not only for generation but also for evaluating the quality and privacy of the synthetic data.

Practical Applications: Where Synthetic Data Shines

The ability to generate high-quality synthetic data unlocks a plethora of practical applications across industries, addressing critical bottlenecks and driving innovation.

1. Data Augmentation

Data augmentation is perhaps the most straightforward and widely adopted use of synthetic data. By generating diverse variations of existing data, we can significantly expand training datasets, making models more robust and generalizable.

- Computer Vision: Imagine training a model to detect rare medical anomalies in X-rays or specific types of defects in manufacturing. Real-world examples are scarce. Generative models can create synthetic images with different angles, lighting conditions, noise levels, occlusions, and even entirely new variations of the anomalies, effectively multiplying the training data. For autonomous vehicles, synthetic environments and scenarios (e.g., extreme weather, unusual road conditions, rare pedestrian behaviors) can be generated to train and test perception systems safely.

- Natural Language Processing (NLP): For tasks like sentiment analysis, text classification, or question answering, generative models can create paraphrases, rephrase sentences, generate domain-specific terminology, or even create entire synthetic dialogues. This is particularly valuable for low-resource languages or highly specialized domains where annotated text data is limited.

2. Privacy Preservation

One of the most impactful applications of synthetic data is in addressing privacy concerns, allowing organizations to share and analyze data without compromising sensitive information.

- Healthcare: Hospitals and research institutions can generate synthetic patient records (including demographics, diagnoses, treatments, and outcomes) that mimic the statistical properties of real patient data. These synthetic datasets can then be shared with researchers, used for model development, or even for educational purposes without exposing actual patient identities, complying with regulations like HIPAA.

- Finance: Financial institutions can create synthetic transaction data for fraud detection model development, risk assessment, or anti-money laundering (AML) analysis. This allows for collaborative research and development with external partners without revealing confidential customer transactions.

- Government/Public Sector: Agencies can share anonymized-yet-realistic demographic or behavioral data for policy analysis, urban planning, or public health research, fostering data-driven decision-making while protecting individual privacy.

3. Addressing Data Imbalance

Many real-world datasets suffer from severe class imbalance, where one class is vastly underrepresented (e.g., fraud cases vs. legitimate transactions, rare disease diagnoses). Models trained on such data tend to perform poorly on the minority class.

- Fraud Detection: Instead of relying solely on the few real fraud cases, generative models can synthesize a large number of realistic fraud scenarios, effectively balancing the dataset and significantly improving the model's ability to detect fraudulent activities.

- Manufacturing Quality Control: If defects are rare, synthetic defect images can be generated to provide sufficient training data for automated visual inspection systems, leading to more accurate defect detection.

4. System Testing & Simulation

For complex, safety-critical systems, synthetic data can create virtual environments for rigorous testing and simulation.

- Autonomous Driving: Beyond augmenting perception data, generative models can create entire synthetic driving simulations, including dynamic traffic, diverse weather conditions, and "edge cases" that are difficult or dangerous to encounter in the real world. This allows for extensive testing of decision-making algorithms before real-world deployment.

- Robotics: Robots can be trained and tested in virtual environments using synthetic sensor data (e.g., lidar, camera, tactile sensors). This reduces wear and tear on physical hardware, accelerates development cycles, and allows for testing in hazardous conditions.

5. Model Development & Benchmarking

Synthetic data provides a controlled environment for research and development.

- Algorithm Comparison: Researchers can create standardized, reproducible synthetic datasets with known properties to benchmark and compare the performance of different machine learning algorithms without being constrained by proprietary or constantly changing real-world data.

- Hyperparameter Tuning: Synthetic data can be used for initial hyperparameter tuning, allowing for faster iteration cycles before deploying models on real data.

6. Data Exploration & Understanding

Generative models can help data scientists gain deeper insights into their data. By generating synthetic samples, one can visually inspect the learned distributions, identify hidden relationships, and understand the impact of different features, especially in complex, high-dimensional datasets.

Navigating the Synthetic Landscape: Challenges and Future Directions

While the potential of synthetic data is immense, its widespread adoption still faces several challenges that are active areas of research:

- Fidelity vs. Utility: The primary challenge is ensuring that synthetic data is not just "realistic" in appearance but also "useful" – meaning models trained on it perform as well as, or even better than, models trained on real data. This requires robust evaluation metrics beyond mere visual inspection.

- Evaluation Metrics: Developing comprehensive and standardized metrics to assess the quality, privacy, and utility of synthetic data is crucial. This includes statistical similarity (how well synthetic data matches real data distributions), machine learning utility (how well models trained on synthetic data generalize to real data), and quantifiable privacy guarantees.

- Privacy Guarantees: While synthetic data inherently offers privacy benefits, quantifying the level of privacy protection is complex. Integrating concepts like Differential Privacy directly into generative models is an active research area to provide provable privacy guarantees.

- Generalization to Real-World Data: A critical question is whether models trained solely on synthetic data can generalize effectively to unseen real-world data. Bridging this "synthetic-to-real" gap is essential for practical adoption.

- Ethical Considerations: Synthetic data, if generated from biased real data, can perpetuate and even amplify those biases. Ensuring fairness and preventing the generation of harmful or misleading content (e.g., deepfakes, misinformation) are paramount ethical considerations.

- Scalability: Generating vast quantities of high-quality synthetic data efficiently, especially for complex data types or large-scale simulations, remains a computational challenge.

- Multimodal Data Generation: The next frontier involves generating synthetic data that spans multiple modalities simultaneously (e.g., synthetic video with corresponding audio, text descriptions, and sensor readings), which is crucial for advanced AI systems.

Empowering the Practitioner: Resources and Tools

For those eager to dive into the world of synthetic data, a growing ecosystem of tools and resources is available:

-

Libraries/Frameworks:

- SDV (Synthetic Data Vault): A powerful Python library specifically designed for tabular synthetic data generation, offering various models (CTGAN, TVAE, Gaussian Copula) and comprehensive evaluation tools.

- YData-Synthetic: Another Python library focused on synthetic data generation, particularly for tabular data.

- Faker: While not an ML-based generative model,

Fakeris invaluable for generating realistic dummy data (e.g., names, addresses, emails) to populate databases or create placeholders, complementing ML-generated synthetic data. - TensorFlow/PyTorch: The foundational deep learning frameworks for implementing and experimenting with GANs, VAEs, and Diffusion Models from scratch or using existing implementations.

- Hugging Face Diffusers: An excellent library for easily experimenting with and deploying state-of-the-art diffusion models for image generation.

-

Research Papers: Stay updated by following top-tier machine learning conferences such as NeurIPS, ICML, ICLR, CVPR, and AAAI, which consistently feature groundbreaking research on generative models and synthetic data.

-

Online Courses/Tutorials: Platforms like Coursera, Udacity, and fast.ai offer courses on generative AI, often including practical examples of synthetic data generation. Look for courses that emphasize hands-on application.

-

Blogs & Articles: Many companies and research groups specializing in synthetic data (e.g., Gretel.ai, Mostly AI, Synthesis AI) publish insightful blog posts and articles detailing their advancements and practical use cases.

Conclusion

Generative AI for synthetic data generation and augmentation is more than just a passing trend; it's a fundamental shift in how we approach data in the age of AI. By offering solutions to data scarcity, privacy concerns, and the high costs of data acquisition, synthetic data is poised to unlock new frontiers in machine learning development across virtually every industry. As generative models continue to evolve in sophistication and fidelity, the ability to conjure realistic, useful, and privacy-preserving data will become an indispensable skill for AI practitioners and a critical enabler for the next generation of intelligent systems. The future of AI is not just about building better models; it's about building better data, and generative AI is leading the charge.