AI-Powered Test Generation: Revolutionizing QA for Modern Software & AI Systems

Discover how AI-powered test generation and self-healing test suites are transforming quality assurance. Move beyond traditional testing to proactive, intelligent QA for complex applications, including LLMs and generative AI, ensuring robust software at unprecedented speed.

The relentless pace of modern software development, driven by DevOps and MLOps methodologies, demands an equally agile approach to quality assurance. Traditional testing, often characterized by manual test case creation and brittle automation, struggles to keep up. This bottleneck is exacerbated by the increasing complexity of applications, especially those incorporating sophisticated AI models like Large Language Models (LLMs), generative AI, and computer vision. The solution? An intelligent revolution in QA: AI-Powered Test Generation and Self-Healing Test Suites.

This paradigm shift isn't just about making testing faster; it's about making it smarter, more resilient, and seamlessly integrated into the continuous delivery pipeline. By leveraging advanced AI techniques, we can move beyond reactive bug fixing to proactive quality assurance, ensuring robust, high-quality software and AI systems at an unprecedented speed.

The Unbearable Weight of Test Maintenance

Before diving into the AI-powered solutions, let's acknowledge the elephant in the room for many QA teams: test maintenance. Automation, while powerful, often comes with a significant hidden cost. UI changes, API updates, or even minor refactors can cause dozens, if not hundreds, of automated tests to fail. These "flaky" tests erode trust in the automation suite, lead to wasted developer time investigating false positives, and ultimately slow down release cycles. This "test maintenance nightmare" is a primary driver behind the need for more intelligent, adaptive testing solutions.

Furthermore, the rise of AI-driven applications introduces new testing complexities. How do you test a non-deterministic LLM? How do you ensure the fairness and robustness of a computer vision model against adversarial attacks? Traditional, script-based testing often falls short, necessitating a more dynamic and intelligent approach.

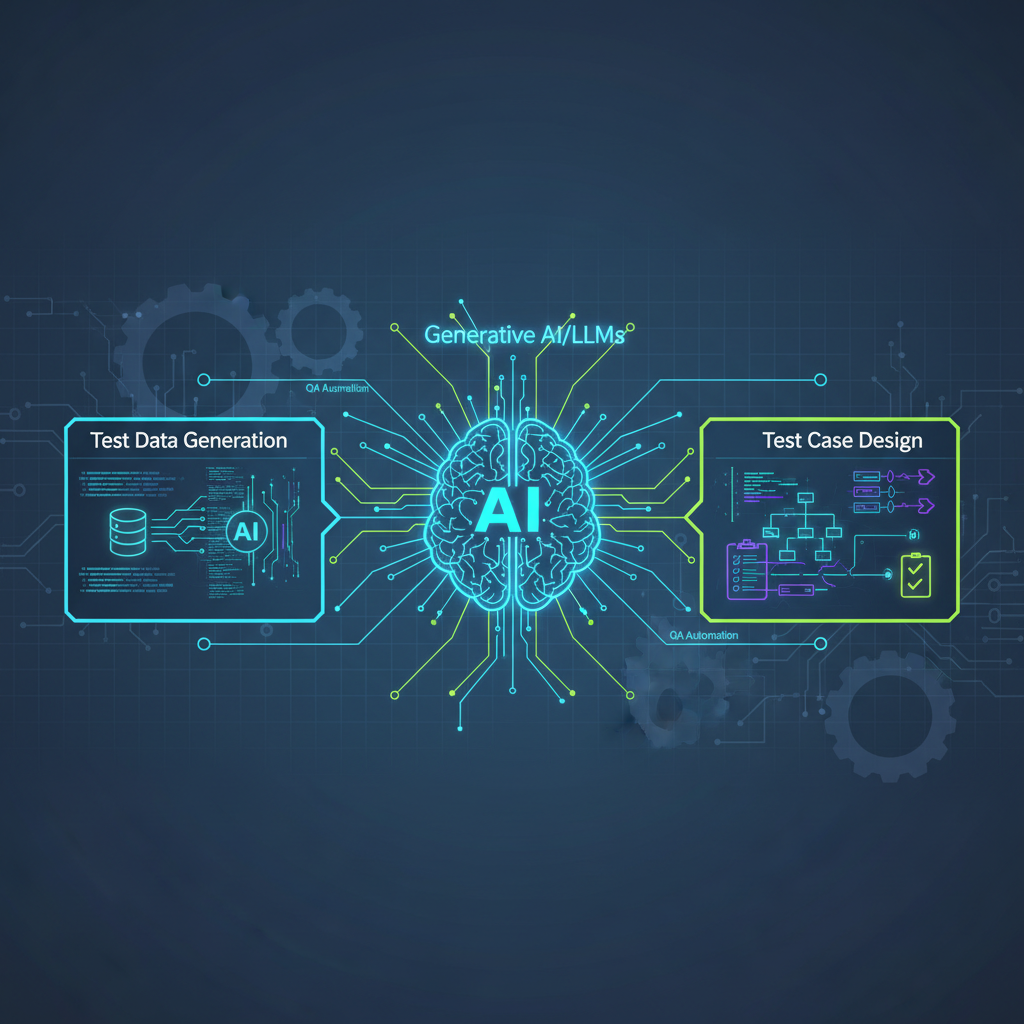

AI-Powered Test Case Generation (TCG): Beyond Manual Scripting

The first pillar of this revolution is the ability of AI to generate test cases autonomously. This moves us away from the laborious, error-prone process of manual test script writing, allowing testers and developers to focus on higher-level strategy and exploratory testing.

LLM-based Test Case Generation

Large Language Models (LLMs) are proving to be game-changers in TCG. Their ability to understand natural language, reason, and generate coherent text makes them ideal for translating human requirements into executable test scenarios.

-

Functional Test Cases from Natural Language: Imagine feeding an LLM a user story like, "As a registered user, I want to be able to reset my password so I can regain access to my account." The LLM can then generate a step-by-step test case:

- Navigate to the login page.

- Click on "Forgot Password."

- Enter a valid registered email address.

- Click "Send Reset Link."

- Verify a confirmation message is displayed.

- Check email inbox for the reset link.

- Click the reset link and verify navigation to the password reset page.

- Enter a new password and confirm it.

- Click "Reset Password."

- Verify successful password change and redirection to login.

This capability drastically reduces the time spent on initial test case creation, especially for well-defined functional requirements. Tools can then convert these steps into executable code (e.g., Playwright, Selenium, Cypress).

-

Edge Cases and Negative Scenarios: One of the most valuable contributions of LLMs is their ability to infer less obvious test cases. By analyzing requirements and common failure patterns, they can suggest:

- Invalid Inputs: What happens if a user enters an invalid email format, an empty password, or a password that doesn't meet complexity requirements?

- Boundary Conditions: Testing with minimum/maximum allowed values, or just outside these boundaries.

- Concurrency Issues: What if multiple users try to access the same resource simultaneously?

- Security Vulnerabilities: Prompting an LLM with security best practices can lead it to suggest tests for SQL injection, XSS, or broken authentication attempts.

-

Synthetic Test Data Generation: For data-intensive applications and especially ML models, having diverse and representative test data is crucial. LLMs can generate synthetic data (e.g., user profiles, product catalogs, financial transactions) that adheres to specified schemas, distributions, and business rules. This is particularly useful when real data is sensitive, scarce, or difficult to obtain. For example, an LLM could generate a CSV file of 100 customer records with varying demographics, purchase histories, and valid email formats, all within specified constraints.

-

API Test Generation: Given an OpenAPI (Swagger) specification, an LLM can analyze the endpoints, request/response schemas, authentication methods, and generate a comprehensive suite of API tests. This includes:

- Valid Requests: Constructing requests with correct parameters and bodies.

- Invalid Requests: Sending malformed JSON, missing required headers, or incorrect data types.

- Authentication Tests: Verifying token expiration, invalid credentials, and unauthorized access attempts.

- Performance Tests: Suggesting scenarios for load testing specific endpoints.

Model-Based Testing (MBT) with AI

MBT traditionally involves creating a model of the system under test (SUT) and then generating tests from that model. AI elevates MBT by automating the model creation and optimizing test path generation.

- Learning Application Behavior: AI, particularly through techniques like Reinforcement Learning (RL) or advanced graph analysis, can observe user interactions, analyze application logs, and even parse UI elements to build a dynamic model of the application's state transitions and user flows.

- Optimal Test Path Generation: Once a model is learned, AI can then generate optimal test paths to achieve maximum coverage (e.g., covering all states, all transitions) or to target specific areas of risk. An RL agent, for instance, could explore different UI paths, receiving "rewards" for reaching new states or triggering specific events, thereby discovering complex user journeys that might lead to bugs.

Fuzz Testing with AI

Fuzz testing involves feeding a program with large amounts of malformed, unexpected, or random data to uncover vulnerabilities or crashes. AI enhances fuzzing by making it "intelligent."

- Grammar-Based Fuzzing: Instead of purely random inputs, AI can learn the input grammar or protocol of a system and generate inputs that are syntactically valid but semantically unexpected.

- Coverage-Guided Fuzzing: AI can analyze code coverage during fuzzing and prioritize inputs that lead to new code paths, increasing the efficiency of bug discovery.

- Anomaly Detection: AI can learn what constitutes "normal" behavior and then generate inputs that specifically try to trigger deviations from this norm, making it more effective at finding subtle vulnerabilities.

Self-Healing Test Suites: The End of Brittle Automation

The second, equally critical pillar is the ability of test suites to adapt and "heal" themselves in the face of application changes. This directly addresses the test maintenance nightmare, transforming brittle automation into resilient, trustworthy assets.

Dynamic Locator Adaptation

One of the most common causes of test failures is changes to UI element locators (e.g., id, xpath, css selector). When a developer renames an id or refactors the DOM structure, tests break. Self-healing tackles this head-on:

- AI-Powered Element Identification: When a test fails because a locator is no longer valid, AI/ML models step in. These models, often trained on vast datasets of UI elements, use a combination of techniques:

- Computer Vision: Analyzing the visual appearance of the UI (e.g., button shape, color, text content) to identify the intended element.

- Natural Language Processing (NLP): Extracting text labels, tooltips, or nearby text to match the element's semantic meaning.

- DOM Structure Analysis: Understanding the element's relationship to other stable elements in the Document Object Model (e.g., "the button next to the 'Submit' label").

- Automatic Locator Updates: Once the AI identifies the "new" element, it can automatically update the test script's locator strategy, either by suggesting a new, more robust locator or by directly modifying the test code. This happens in real-time or as part of a review process, preventing the test from failing in subsequent runs.

Example:

A test script might look for a login button using id="loginButton". If a developer changes it to id="userAuthButton", the test fails. A self-healing system would:

- Detect the failure.

- Analyze the screenshot/DOM at the point of failure.

- Using computer vision and NLP, identify a button that visually resembles the old "Login Button" and has text like "Log In" or "Sign In."

- Propose or automatically update the locator to something like

text="Log In"or a more stablexpathbased on its new position.

Test Step Correction and Flow Adaptation

Sometimes, application flows change subtly. An extra confirmation dialog might appear, or the order of steps might be slightly altered. Instead of failing outright, AI can attempt to infer and correct the test's execution path.

- Contextual Understanding: AI models can learn the typical flow of an application. If an unexpected pop-up appears, the AI might recognize it as a common confirmation dialog and attempt to click "OK" or "Continue" to proceed with the original test intent.

- Dynamic Path Adjustment: If a step's order changes, AI can use its understanding of the application's states to re-sequence the actions or find an alternative path to achieve the desired outcome, minimizing test failures due to minor UI/flow refactors.

Root Cause Analysis & Prioritization

When tests do fail (because some changes are too significant for self-healing, or it's a genuine bug), AI can significantly accelerate the debugging process.

- Failure Pattern Analysis: AI can analyze historical test failure patterns, correlating them with recent code changes, deployment environments, or even specific user stories. This helps pinpoint the likely culprit much faster than manual investigation.

- Log and Code Change Correlation: By integrating with source control and logging systems, AI can suggest which recent code commits or configuration changes are most likely responsible for a test failure.

- Prioritization: Not all failed tests are equally critical. AI can prioritize failures based on their impact (e.g., blocking critical user flows), frequency, or correlation with high-risk areas of the application, guiding developers to address the most important issues first.

Resilience to Data Changes

Applications often rely on external data sources or APIs. If a data schema changes or an external service returns different values, tests can break. AI can help adapt:

- Dynamic Data Mapping: If a field name changes in an API response, AI can learn the new mapping based on content similarity or semantic meaning, allowing tests to continue using the correct data.

- Synthetic Data Adaptation: For tests relying on synthetic data, AI can regenerate or modify the data to conform to new schemas or business rules, ensuring test relevance.

Continuous Testing Integration in DevOps/MLOps: The AI-Powered Pipeline

The true power of AI-powered test generation and self-healing lies in their seamless integration into modern CI/CD (Continuous Integration/Continuous Delivery) and MLOps (Machine Learning Operations) pipelines.

Automated Test Orchestration

In a fast-paced environment, running the entire test suite on every commit can be time-consuming. AI can intelligently orchestrate test execution:

- Change-Based Test Selection: AI can analyze code changes (e.g., Git diffs) and identify which parts of the application have been affected. It then dynamically selects and runs only the relevant subset of tests, significantly reducing pipeline execution time. For example, a change in the user authentication module might trigger only security and login-related tests, not the entire e-commerce checkout flow.

- Risk-Based Prioritization: Based on historical data (e.g., areas with frequent bugs, critical features), AI can prioritize which tests to run first, ensuring that high-risk areas are validated quickly.

- Flaky Test Management: AI can identify consistently flaky tests, quarantine them, or suggest improvements, preventing them from blocking the pipeline.

Intelligent Feedback Loops

AI-powered insights are most valuable when they are delivered promptly and actionably to developers.

- Real-time Notifications: Integrating with communication platforms like Slack or Microsoft Teams, AI can send immediate notifications about critical test failures, including potential root causes and suggested fixes.

- Automated Issue Creation: For confirmed bugs, AI can automatically create detailed tickets in issue tracking systems like Jira, pre-populating them with logs, screenshots, and relevant context.

- Dashboard and Reporting: AI can generate intelligent dashboards that highlight trends in test quality, identify areas of concern, and provide insights into the overall health of the application.

Monitoring Test Health

AI doesn't just test the application; it also monitors the health and effectiveness of the test suite itself.

- Identifying Redundant Tests: AI can detect tests that consistently pass without providing new coverage or finding bugs, suggesting they might be redundant and can be removed or optimized.

- Performance Monitoring: AI can track the execution time of tests, identifying slow tests that might be bottlenecks in the CI/CD pipeline.

- Coverage Gaps: By analyzing code coverage data and application usage patterns, AI can highlight areas of the application that are under-tested, prompting the generation of new tests.

Practical Applications & Transformative Benefits

The adoption of AI in QA is not merely an academic exercise; it offers tangible, transformative benefits for organizations:

- Drastically Reduced Manual Effort: Imagine a world where testers spend less time writing boilerplate test cases and more time on complex exploratory testing, performance analysis, and security audits. AI automates the mundane, freeing up human creativity.

- Accelerated Release Cycles: By minimizing test maintenance overhead and intelligently orchestrating test execution, AI-powered systems enable true continuous testing, allowing faster feedback loops and quicker time-to-market.

- Superior Test Coverage: AI can explore application states and generate test cases that human testers might overlook, especially for complex systems or AI-driven features, leading to higher quality and fewer production bugs.

- Enhanced Test Reliability and Trust: Self-healing capabilities significantly reduce false positives, ensuring that when a test fails, it's almost certainly a genuine issue, restoring trust in the automation suite.

- Early and Cost-Effective Bug Detection: Issues identified earlier in the development cycle are exponentially cheaper to fix. AI's ability to generate comprehensive tests and provide rapid feedback ensures bugs are caught before they escalate.

- Robustness for AI Systems: For MLOps, AI-powered testing is indispensable. It can generate adversarial examples to test model robustness, create diverse datasets to check for bias, and simulate real-world scenarios to validate model performance, ensuring the reliability and fairness of AI applications.

Challenges and the Road Ahead

While the promise is immense, several challenges need to be addressed for widespread adoption:

- Explainability and Trust: If an AI generates a test or performs a self-healing action, why did it do that? Understanding the AI's reasoning is crucial for debugging and building trust in the system.

- Over-Generation and Relevance: AI can generate a vast number of tests. The challenge is to ensure these tests are meaningful, non-redundant, and truly critical, avoiding "test bloat."

- Deep Contextual Understanding: For AI to generate truly insightful tests, it needs to understand not just the syntax but also the business logic and user intent behind the application. This requires sophisticated models and integration with business requirements.

- Integration Complexity: Integrating these advanced AI capabilities into existing, often diverse, testing frameworks and CI/CD pipelines can be complex. Standards and robust APIs are needed.

- Ethical Considerations: Especially when testing AI systems with AI, there's a risk of introducing or overlooking biases. Ensuring AI-generated tests are fair, comprehensive, and cover critical safety scenarios is paramount.

- Real-world Scalability: Moving from proof-of-concept to enterprise-grade solutions that can handle the scale and complexity of large organizations requires significant engineering effort.

Conclusion

The convergence of AI, DevOps, and MLOps is ushering in a new era for quality assurance. AI-powered test generation and self-healing test suites are not futuristic concepts; they are becoming essential tools for organizations striving for continuous innovation and uncompromising quality. By embracing these intelligent automation capabilities, we can transform QA from a bottleneck into an accelerator, ensuring that the software and AI systems we build are not only delivered faster but are also more reliable, robust, and trustworthy than ever before. The future of quality is intelligent, adaptive, and continuously evolving.