Foundation Models in Robotics: Bridging AI and the Physical World

Explore how the integration of Foundation Models (FMs) with Robotics and Embodied AI is creating a paradigm shift. This convergence offers a thrilling blend of cutting-edge research, addressing historical limitations in robotics.

In the rapidly evolving landscape of artificial intelligence, a new frontier is emerging that promises to redefine the capabilities of autonomous systems: the integration of Foundation Models (FMs) with Robotics and Embodied AI. This convergence is not merely an incremental step but a paradigm shift, bridging the gap between the abstract intelligence of large-scale models and the tangible reality of the physical world. For AI practitioners and enthusiasts alike, this field offers a thrilling blend of cutting-edge research, profound technical challenges, and immense practical potential.

Historically, robotics has grappled with fundamental limitations in generalization, common sense reasoning, and natural language understanding. Each new task often required extensive, task-specific data collection and meticulous programming. Enter foundation models – pre-trained on vast, diverse datasets – which bring a wealth of world knowledge, reasoning capabilities, and multi-modal understanding. By infusing robots with the cognitive prowess of models like large language models (LLMs) and large vision models (LVPs), we are moving towards a future where robots can understand complex instructions, adapt to novel situations, and interact with humans in a far more intuitive and capable manner.

The Dawn of Embodied Intelligence: Why Now?

The timing for this convergence couldn't be more opportune. Several factors are fueling this exciting development:

- Breakthroughs in Foundation Models: The past few years have witnessed an explosion in the capabilities of FMs. LLMs like GPT-3/4, PaLM, and LLaMA demonstrate remarkable language understanding, generation, and reasoning. Vision-language models (VLMs) such as CLIP, DALL-E, and GPT-4V can seamlessly connect visual information with textual descriptions. These models, trained on internet-scale data, possess a form of "common sense" and world knowledge previously unattainable by robotic systems.

- The "Embodied AI" Imperative: While FMs excel in virtual environments, the ultimate test of intelligence lies in navigating and interacting with the physical world. Embodied AI, which focuses on agents (robots, virtual avatars) that perceive, act, and learn within an environment, provides the real-world constraints and complexities necessary to push AI to its limits. This interaction grounds abstract knowledge in physical reality.

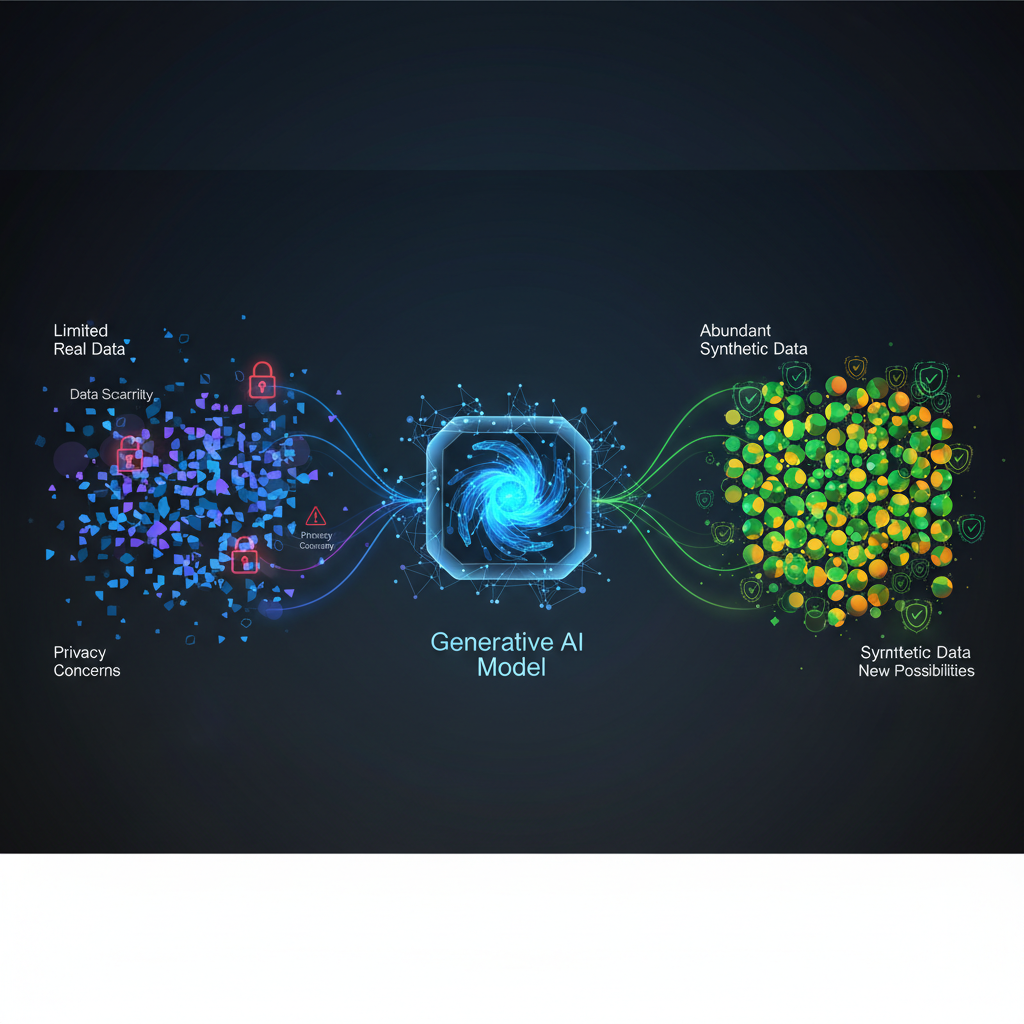

- Data Efficiency and Generalization: One of robotics' biggest hurdles is its insatiable appetite for task-specific data. FMs, with their pre-trained knowledge, offer a pathway to significantly reduce this requirement. They can be fine-tuned or adapted with much less robot-specific data, leading to faster deployment and broader applicability.

- Multi-Modal Integration: The physical world is inherently multi-modal. Robots need to see, hear, touch, and understand language. The development of multi-modal foundation models that can process and integrate text, vision, and even proprioceptive or tactile data is crucial for robust embodied intelligence.

This confluence of advancements is leading to a new era where robots are not just automated machines but intelligent agents capable of understanding, reasoning, and adapting.

Key Concepts and Technologies Powering Embodied FMs

Integrating foundation models into robotic systems involves a sophisticated interplay of various AI paradigms. Here are some of the core technologies and concepts at play:

Large Language Models (LLMs) for High-Level Reasoning

LLMs are becoming the "brains" for high-level planning and instruction interpretation. They can:

- Parse Natural Language Instructions: Translate human commands like "Please clean up the kitchen counter" into a sequence of actionable steps.

- Perform Task Decomposition: Break down complex goals into sub-goals (e.g., "clean kitchen counter" -> "identify dirty items," "pick up items," "wipe surface," "put items away").

- Leverage Common Sense Reasoning: Use their vast knowledge base to infer unstated details or handle ambiguities (e.g., if asked to "get a drink," an LLM might infer "from the fridge" or "a glass of water").

- Generate Explanations and Ask Clarifying Questions: Enhance human-robot interaction by explaining their actions or seeking clarification when uncertain.

Example: An LLM might receive the instruction "Make me coffee." It could then generate a plan: [Go to coffee machine, get mug, add coffee grounds, add water, press brew button, bring coffee to user].

Vision-Language Models (VLMs) for Perception and Grounding

VLMs are critical for connecting linguistic instructions with visual perceptions of the environment. They enable robots to:

- Object Recognition and Localization: Identify objects specified in natural language (e.g., "the red mug," "the book on the table").

- Scene Understanding: Comprehend the spatial relationships and context of objects in a scene.

- Grounding Language to Visual Inputs: Associate abstract concepts or commands with concrete visual features. For instance, if told "put the apple next to the banana," a VLM helps the robot identify both fruits visually.

Example: A VLM like CLIP can embed images and text into a shared latent space. Given an image of a kitchen and the text "find the sugar," the VLM can identify the region in the image most semantically similar to "sugar."

Reinforcement Learning (RL) and Imitation Learning for Control

While FMs provide high-level plans, executing these plans in the physical world often requires precise, real-time control.

- Reinforcement Learning (RL): Often used to fine-tune FM-generated policies or to learn low-level motor skills. An FM might propose a high-level action, and an RL agent learns the optimal sequence of joint movements to execute that action in a dynamic environment.

- Imitation Learning (IL) / Behavior Cloning: Training robots by observing human demonstrations. FMs can enhance this by providing richer representations of states and actions, or by suggesting diverse demonstrations based on their understanding of the task.

Example: An LLM might decide the robot needs to "pick up the cup." An RL policy, potentially guided by the FM, would then learn the exact trajectory and grip force required to successfully grasp the cup without dropping it.

Prompting Techniques for Robotics

Just as LLMs can be "prompted" for various tasks, researchers are exploring how to prompt robots:

- Zero-shot/Few-shot Prompting: Enabling robots to perform novel tasks with minimal or no explicit training, leveraging the FM's pre-trained knowledge.

- Chain-of-Thought Prompting: Encouraging the FM to generate intermediate reasoning steps, which can lead to more robust and explainable robotic behaviors.

- Multi-modal Prompting: Using a combination of text, images, and even demonstrations as prompts to guide robot actions.

Robot Learning Architectures

The integration of FMs into robotic systems requires novel architectures. Common approaches include:

- Hierarchical Architectures: FMs act as high-level planners, generating symbolic plans or skill sequences, while lower-level controllers (e.g., RL policies) execute these plans.

- End-to-End Architectures: Some approaches attempt to train FMs to directly output robot actions, though this is often more challenging due to the complexity of real-world physics.

- FM as Reward Functions: FMs can be used to provide dense, semantic rewards for RL agents, guiding them towards desired behaviors based on high-level goals.

Sim-to-Real Transfer

Training robots directly in the physical world is time-consuming and expensive. Simulators offer a safe and scalable alternative. FMs, with their ability to generalize, are proving instrumental in bridging the "sim-to-real" gap, allowing policies learned in simulation to transfer more effectively to real robots.

Practical Applications and Transformative Value

The implications of foundation models for robotics are far-reaching, promising to revolutionize various sectors:

- Enhanced Human-Robot Interaction (HRI): Imagine a robot assistant that understands nuanced verbal commands, asks clarifying questions, and explains its actions in natural language. This will make robots far more accessible and useful in homes, healthcare, and service industries.

- Accelerated Robot Deployment: The ability to "program" a robot by simply telling it what to do, rather than writing complex code or performing extensive teleoperation, could drastically lower the barrier to entry for robotics in manufacturing, logistics, and agriculture.

- Robots in Unstructured Environments: FMs offer the potential for robots to operate effectively in dynamic, unpredictable environments like disaster zones, construction sites, or even our homes, where pre-programmed solutions are insufficient. They can adapt to unforeseen obstacles and novel situations.

- Personalized Robotics: Robots could quickly adapt their behavior based on individual user preferences or novel situations, leading to truly personalized robotic assistants that learn and grow with their users.

- Skill Transfer and Compositionality: FMs can enable robots to learn new skills more rapidly by leveraging existing knowledge and composing simpler actions into complex behaviors. A robot that knows how to "grasp" and "move" can quickly learn to "stack" or "sort."

Pioneering Examples in the Field

The research community is already demonstrating impressive progress:

- Google's RT-X (Robotics Transformer X): A large-scale model trained on diverse robot data from multiple labs, showcasing impressive generalization across various robots and tasks. It learns a universal policy that can control different robot morphologies.

- OpenAI's "SayCan": This work uses LLMs to select appropriate skills for a robot based on natural language instructions and environmental context. The LLM evaluates the "affordance" (can the robot do it?) of various skills in the current state, enabling zero-shot execution of new tasks.

- PaLM-E: An embodied multi-modal model from Google that integrates visual and linguistic inputs to perform complex robotic tasks. It can reason over visual scenes and language instructions to generate action plans.

- Voyager (Google DeepMind): An LLM-powered embodied agent designed for open-ended learning in Minecraft. Voyager continuously explores, acquires new skills, and curates an ever-growing skill library, demonstrating long-term autonomy and self-improvement.

- VIMA (Visual-Motor Pre-training): A generalist robot manipulation model that can execute a wide range of tasks specified by multi-modal prompts (images, videos, text). It learns a general-purpose motor policy that can be prompted to perform new tasks without task-specific training.

These examples highlight the nascent but rapidly accelerating capabilities of FM-driven robots, moving beyond rigid programming to more flexible, intelligent, and adaptable systems.

The Road Ahead: Challenges and Opportunities

While the promise is immense, significant challenges remain:

- Grounding: Effectively grounding abstract language commands into concrete physical actions and perceptions is still a complex problem. How do we ensure the robot's understanding aligns with human intent in the physical world?

- Safety and Alignment: Given the emergent properties of large models, ensuring that FM-driven robots operate safely and align with human intentions, especially in critical applications, is paramount. Robust safety protocols and interpretable models are crucial.

- Real-time Performance: Adapting large, computationally intensive FMs for real-time robot control, particularly on resource-constrained hardware, requires innovative optimization techniques.

- Embodied Common Sense: While FMs have vast textual common sense, imbuing robots with practical, physical common sense (e.g., understanding object stability, material properties, or social norms in physical interaction) beyond what's learned from text/image data is a deep research area.

- Data Collection for Embodied FMs: Developing efficient, scalable, and diverse methods for collecting high-quality interaction data from robots and humans is essential for training even more capable embodied FMs.

- Evaluation Metrics: New benchmarks and evaluation metrics are needed to accurately assess the performance and generalization capabilities of FM-powered embodied agents in complex, open-ended tasks.

Conclusion

The fusion of foundation models with robotics and embodied AI marks a pivotal moment in the history of artificial intelligence. It promises to unlock a new generation of intelligent, adaptable, and intuitive robots that can seamlessly integrate into our lives and workplaces. From transforming human-robot interaction to enabling robots to thrive in unstructured environments, the potential impact is revolutionary.

For practitioners, this field offers fertile ground for innovation, demanding expertise across machine learning, robotics, computer vision, and natural language processing. For enthusiasts, it provides a fascinating glimpse into a future where the line between intelligent software and physical agents blurs, paving the way for truly intelligent machines that learn, reason, and act in our world. The journey is just beginning, but the destination—a world populated by truly intelligent and helpful robots—is within sight.