Generative AI for Synthetic Data: Revolutionizing AI Training and Privacy

Explore how Generative AI is transforming data scarcity, privacy, and bias challenges in AI development. Learn why synthetic data is becoming crucial for training, testing, and deploying robust AI systems.

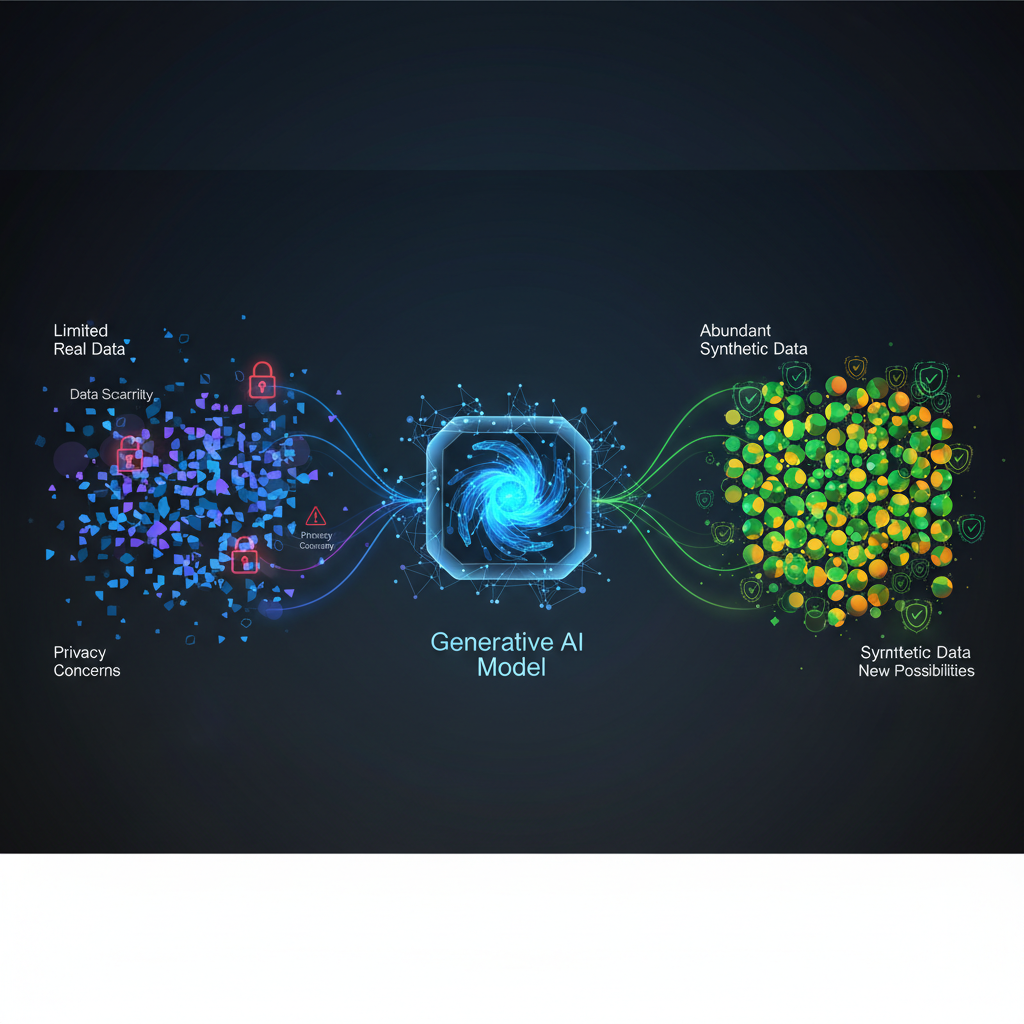

The insatiable appetite of Artificial Intelligence for data is well-known. From the earliest neural networks to today's colossal foundation models, data has been the lifeblood of progress. Yet, this dependency comes with significant challenges: data is often scarce, expensive to acquire, riddled with privacy concerns, or inherently biased. Enter Generative AI for Synthetic Data Generation – a rapidly evolving field poised to revolutionize how we train, test, and deploy AI systems.

This isn't merely a theoretical concept; it's a practical solution addressing some of the most pressing bottlenecks in AI development today. By creating artificial data that mimics the statistical properties and relationships of real-world data, synthetic data offers a powerful pathway to overcome data limitations, enhance privacy, and even mitigate bias.

The Growing Imperative for Synthetic Data

Why is synthetic data gaining such prominence now? Several converging factors highlight its critical importance:

- Data Scarcity & Cost: For many niche applications or emerging markets, collecting sufficient high-quality, labeled real-world data is a monumental task, often prohibitively expensive or simply impossible. Think about rare medical conditions, specific industrial defects, or low-resource languages.

- Privacy Regulations: The global landscape of data privacy (GDPR, HIPAA, CCPA, etc.) has made sharing and utilizing sensitive real-world data increasingly complex and risky. Synthetic data provides a privacy-preserving alternative, allowing development without exposing confidential information.

- Ethical AI & Bias Mitigation: Real-world datasets often reflect societal biases, leading to AI models that perpetuate or even amplify these issues. Synthetic data offers a proactive approach to balance datasets, augment underrepresented classes, and create more fair and equitable training environments.

- Simulation & Edge Cases: In domains like autonomous vehicles, robotics, or cybersecurity, testing AI in real-world scenarios can be dangerous, costly, or simply infeasible for rare "edge cases." Synthetic environments and data allow for robust testing and training without real-world consequences.

- Foundation Model Enablement: The rise of massive foundation models, particularly in multimodal domains, requires vast and diverse datasets. Synthetic data can help bridge gaps and augment these foundational training sets.

- "Data-Centric AI" Movement: The shift towards prioritizing data quality and quantity over solely focusing on model architecture improvements perfectly aligns with the synthetic data paradigm. Improving the data directly leads to better models.

The Generative AI Revolution: Powering Synthetic Data

The true catalyst for the current synthetic data boom is the breathtaking progress in Generative AI. While earlier methods existed, the advent of sophisticated generative models has dramatically improved the fidelity, diversity, and utility of synthetic data.

Let's explore the key players:

1. Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow et al. in 2014, revolutionized generative modeling. They consist of two neural networks, a Generator and a Discriminator, locked in a zero-sum game:

- The Generator creates synthetic data samples (e.g., images, tabular rows) from random noise.

- The Discriminator tries to distinguish between real data samples and the synthetic samples produced by the generator.

The generator's goal is to fool the discriminator, while the discriminator's goal is to correctly identify real vs. fake. This adversarial process drives both networks to improve, resulting in increasingly realistic synthetic data.

Example Use Case (Computer Vision): Imagine you need to train an object detection model for a specific type of industrial defect, but real images are scarce. A Conditional GAN (cGAN) could be trained on a small set of real defect images. You could then condition the generator to produce images of defects under varying lighting, angles, and backgrounds, significantly augmenting your training set.

# Conceptual GAN training loop (simplified)

for epoch in range(num_epochs):

# Train Discriminator

real_images = load_real_data()

fake_images = generator(random_noise())

d_loss_real = discriminator.train(real_images, labels=1)

d_loss_fake = discriminator.train(fake_images, labels=0)

d_loss = d_loss_real + d_loss_fake

# Train Generator

fake_images = generator(random_noise())

g_loss = discriminator.train(fake_images, labels=1) # Generator tries to fool D

# Log losses, save samples

# Conceptual GAN training loop (simplified)

for epoch in range(num_epochs):

# Train Discriminator

real_images = load_real_data()

fake_images = generator(random_noise())

d_loss_real = discriminator.train(real_images, labels=1)

d_loss_fake = discriminator.train(fake_images, labels=0)

d_loss = d_loss_real + d_loss_fake

# Train Generator

fake_images = generator(random_noise())

g_loss = discriminator.train(fake_images, labels=1) # Generator tries to fool D

# Log losses, save samples

Challenges with GANs: GANs are powerful but notoriously difficult to train, often suffering from mode collapse (where the generator produces a limited variety of samples) and training instability.

2. Variational Autoencoders (VAEs)

VAEs are a type of generative model that learn a compressed, latent representation of the input data. They consist of an Encoder that maps input data to a latent space (a distribution, typically Gaussian) and a Decoder that reconstructs data from samples drawn from this latent space.

Unlike GANs, VAEs have a clear objective function (reconstruction loss + KL divergence loss) that makes them more stable to train. They are excellent for generating diverse samples and interpolating between data points in the latent space.

Example Use Case (Healthcare): To share patient data for research without compromising privacy, a VAE could be trained on electronic health records (EHRs). The encoder learns the underlying structure of patient profiles. New synthetic patient records can then be generated by sampling from the learned latent distribution and passing these samples through the decoder. This ensures statistical similarity while creating entirely new, non-identifiable records.

3. Diffusion Models

The current darlings of generative AI, Diffusion Models (e.g., DALL-E 2, Stable Diffusion, Midjourney), have achieved unprecedented levels of realism and diversity, particularly in image generation. They work by gradually adding noise to an image until it becomes pure noise (the "forward diffusion process"), and then learning to reverse this process, step-by-step, to reconstruct a clean image from noise (the "reverse diffusion process").

Why they excel for synthetic data:

- High Fidelity: Produce incredibly realistic and high-resolution outputs.

- Diversity: Less prone to mode collapse than GANs, generating a wide variety of samples.

- Conditional Generation: Easily adapted for conditional generation (e.g., "generate a cat wearing a hat") by incorporating text embeddings or other conditioning information.

Example Use Case (Robotics/Autonomous Systems): For training autonomous vehicles, generating diverse driving scenarios is crucial. A diffusion model could be trained on real-world driving footage. Then, by conditioning the model with parameters like "heavy rain," "nighttime," "dense traffic," or "pedestrian crossing," it can generate synthetic video sequences or 3D environments that simulate these complex and often rare conditions, allowing for robust testing and training of perception and planning algorithms.

4. Large Language Models (LLMs)

LLMs, like GPT-3/4, Llama, and others, are not just for chatbots. Their ability to understand context, generate coherent text, and follow instructions makes them powerful tools for generating synthetic textual and even structured tabular data.

Example Use Case (NLP): For low-resource languages or specialized domains (e.g., legal, medical), training data for NLP tasks like sentiment analysis or named entity recognition is scarce. An LLM can be prompted to generate synthetic sentences, paragraphs, or even entire documents that adhere to specific linguistic styles or domain knowledge.

# Conceptual LLM prompt for synthetic data

prompt = """

Generate 10 diverse customer reviews for a new smart coffee maker.

Each review should include:

- A rating out of 5 stars.

- Specific feedback on ease of use, coffee quality, and smart features.

- Varying sentiment (positive, neutral, negative).

"""

synthetic_reviews = llm.generate(prompt, num_samples=10)

# Example output:

# "Rating: 4/5. The smart features are amazing, but the coffee could be hotter."

# "Rating: 2/5. Too complicated to set up, and the coffee tastes bland."

# Conceptual LLM prompt for synthetic data

prompt = """

Generate 10 diverse customer reviews for a new smart coffee maker.

Each review should include:

- A rating out of 5 stars.

- Specific feedback on ease of use, coffee quality, and smart features.

- Varying sentiment (positive, neutral, negative).

"""

synthetic_reviews = llm.generate(prompt, num_samples=10)

# Example output:

# "Rating: 4/5. The smart features are amazing, but the coffee could be hotter."

# "Rating: 2/5. Too complicated to set up, and the coffee tastes bland."

LLMs can also generate synthetic tabular data by understanding column relationships and data types, filling in missing values, or creating entirely new rows that maintain statistical properties.

Practical Applications Across Industries

The implications of high-quality synthetic data are vast and touch almost every sector leveraging AI.

Computer Vision

- Object Detection & Segmentation: Augmenting datasets for rare objects (e.g., specific manufacturing defects, unusual wildlife) or challenging conditions (e.g., extreme weather, occlusions) in autonomous driving, industrial inspection, or medical imaging.

- Facial Recognition & Biometrics: Generating diverse synthetic faces to train models that are more robust to variations in ethnicity, age, and gender, promoting fairness and reducing bias, all without using real, privacy-sensitive human images.

- Synthetic Environments: Creating entire virtual worlds for training and testing robotics, drones, and autonomous agents, allowing for rapid iteration and safe exploration of dangerous scenarios.

Natural Language Processing (NLP)

- Low-Resource Languages: Generating synthetic text for languages with limited digital footprints, enabling the development of translation tools, chatbots, and sentiment analysis for underserved communities.

- Data Augmentation: Creating paraphrases, rephrased sentences, or synthetic dialogues to improve the robustness and generalization of NLP models, especially for tasks like intent classification or question answering.

- Chatbot Training: Generating diverse conversational turns, user queries, and system responses to train more natural, comprehensive, and resilient chatbots and virtual assistants.

- Sensitive Text Generation: Creating synthetic medical notes or legal documents for training specialized NLP models without exposing real, confidential information.

Tabular Data

- Financial Fraud Detection: Generating synthetic fraudulent transactions to train models, as real fraud data is inherently scarce and highly sensitive. This helps models learn patterns of anomaly without waiting for real-world events.

- Healthcare & Pharma: Creating synthetic patient records, clinical trial data, or drug discovery datasets for research, model development, and regulatory testing, ensuring patient privacy while enabling innovation.

- Customer Behavior Simulation: Generating synthetic customer profiles, purchase histories, and interaction data for market analysis, recommendation systems, and A/B testing, allowing businesses to experiment without impacting real customers.

- Software Testing: Generating realistic test data for databases and applications, covering edge cases and ensuring robust software performance.

Robotics & Simulation

- Reinforcement Learning: Training robotic agents in highly realistic synthetic environments (simulators) before deploying them in the physical world. This reduces wear and tear on hardware, speeds up learning, and allows for exploration of dangerous states.

- Autonomous Systems: Generating diverse and challenging driving scenarios (e.g., sudden obstacles, complex intersections, varied weather conditions) to rigorously test and train self-driving car algorithms, improving safety and reliability.

Privacy-Preserving ML

- Data Sharing: Enabling organizations to share synthetic versions of their sensitive datasets with partners, researchers, or regulatory bodies without exposing raw, identifiable information, fostering collaboration and innovation.

- Federated Learning Augmentation: Providing synthetic data to local clients in a federated learning setup to improve model training, especially when local real data is scarce, while minimizing the exposure of real data.

Technical Depth & Navigating the Challenges

While the promise of synthetic data is immense, its effective implementation comes with its own set of technical challenges that practitioners must navigate.

1. Model Selection & Training Complexity

Choosing the right generative model (GAN, VAE, Diffusion, LLM) depends heavily on the data type, desired fidelity, diversity requirements, and available computational resources. Each model type has its nuances:

- GANs: Difficult to train, prone to mode collapse, but can achieve high realism.

- VAEs: More stable, good for diverse sampling and interpolation, but can sometimes produce blurrier outputs.

- Diffusion Models: State-of-the-art for realism and diversity, but computationally intensive for training and inference.

- LLMs: Excellent for text and structured data, but generation quality depends heavily on prompt engineering and model size.

Effective training often requires deep expertise in hyperparameter tuning, architectural choices, and understanding potential failure modes.

2. Fidelity, Diversity, and Privacy: The Trilemma

This is arguably the most critical and challenging aspect.

- Fidelity: How statistically similar is the synthetic data to the real data? Does it preserve key relationships, distributions, and outliers?

- Diversity: Does the synthetic data cover the full range of variations present in the real data, avoiding mode collapse?

- Privacy: Does the synthetic data guarantee that no sensitive information from individual real data points can be reconstructed or inferred?

Achieving all three simultaneously is a complex balancing act. For instance, generating highly faithful synthetic data might inadvertently increase the risk of privacy leakage if the model "memorizes" specific real data points. Techniques like Differential Privacy are being integrated into generative models to provide provable privacy guarantees, but often at the cost of some fidelity or utility.

3. Evaluation Metrics: Proving Utility and Safety

Unlike traditional ML where accuracy or F1-score are clear metrics, evaluating synthetic data is multifaceted. Robust evaluation requires:

- Statistical Similarity Metrics: Comparing distributions (e.g., Kolmogorov-Smirnov test), correlations, and descriptive statistics between real and synthetic datasets.

- Machine Learning Utility Metrics: Training a downstream ML model on synthetic data and evaluating its performance on real test data. This is often the most practical measure of "goodness."

- Privacy Metrics: Quantifying the risk of membership inference attacks or attribute inference attacks on the synthetic data.

- Diversity Metrics: Assessing the coverage of the synthetic data in the feature space.

- Human Evaluation: For modalities like images or text, human perception studies are crucial to assess realism and coherence.

Developing standardized and universally accepted evaluation frameworks remains an active area of research.

4. Bias Transfer and Mitigation

Generative models learn from the data they are trained on. If the real data contains biases (e.g., underrepresentation of certain demographics, historical inequities), the generative model will likely replicate or even amplify these biases in the synthetic data.

Mitigation strategies include:

- Bias Detection: Employing fairness metrics to identify biases in both real and synthetic data.

- Conditional Generation: Explicitly conditioning the generative process to balance specific attributes (e.g., "generate more images of women in STEM roles").

- Re-weighting/Sampling: Adjusting the training process to give more weight to underrepresented groups.

- Post-processing: Applying techniques to synthetic data to reduce detected biases.

5. Scalability and Efficiency

Generating large volumes of high-quality synthetic data, especially for complex modalities like high-resolution video, 3D environments, or massive tabular datasets, can be computationally intensive and time-consuming. Research is ongoing into more efficient generative architectures and distributed training methods.

6. Interpretability and Controllability

Understanding why a generative model produces a certain output and having fine-grained control over the generation process (e.g., modifying specific attributes of an image or a tabular record) is crucial for many applications. This area is less mature compared to interpretability in discriminative models.

The Future is Synthetic

Generative AI for Synthetic Data Generation is not just a passing trend; it's a fundamental shift in how we approach data in AI. As models become more sophisticated and evaluation metrics more robust, synthetic data will increasingly become an indispensable tool for:

- Democratizing AI: Lowering the barrier to entry for smaller teams and researchers by providing access to high-quality data without the immense cost and effort of real-world collection.

- Accelerating Research & Development: Enabling faster iteration and experimentation in AI development by providing an endless supply of diverse training data.

- Building Fairer AI: Proactively addressing biases and promoting ethical AI by allowing for the creation of balanced and representative datasets.

- Ensuring Privacy: Protecting sensitive information while still deriving valuable insights and building powerful AI solutions.

For AI practitioners and enthusiasts, understanding the principles, capabilities, and challenges of synthetic data generation is no longer optional – it's becoming a core competency. The ability to leverage these powerful generative models to create, evaluate, and deploy synthetic data will define the next generation of AI innovation. The future of AI is data-driven, and increasingly, that data will be synthetic.