Generative AI for Synthetic Data: Unlocking New Frontiers in AI Development

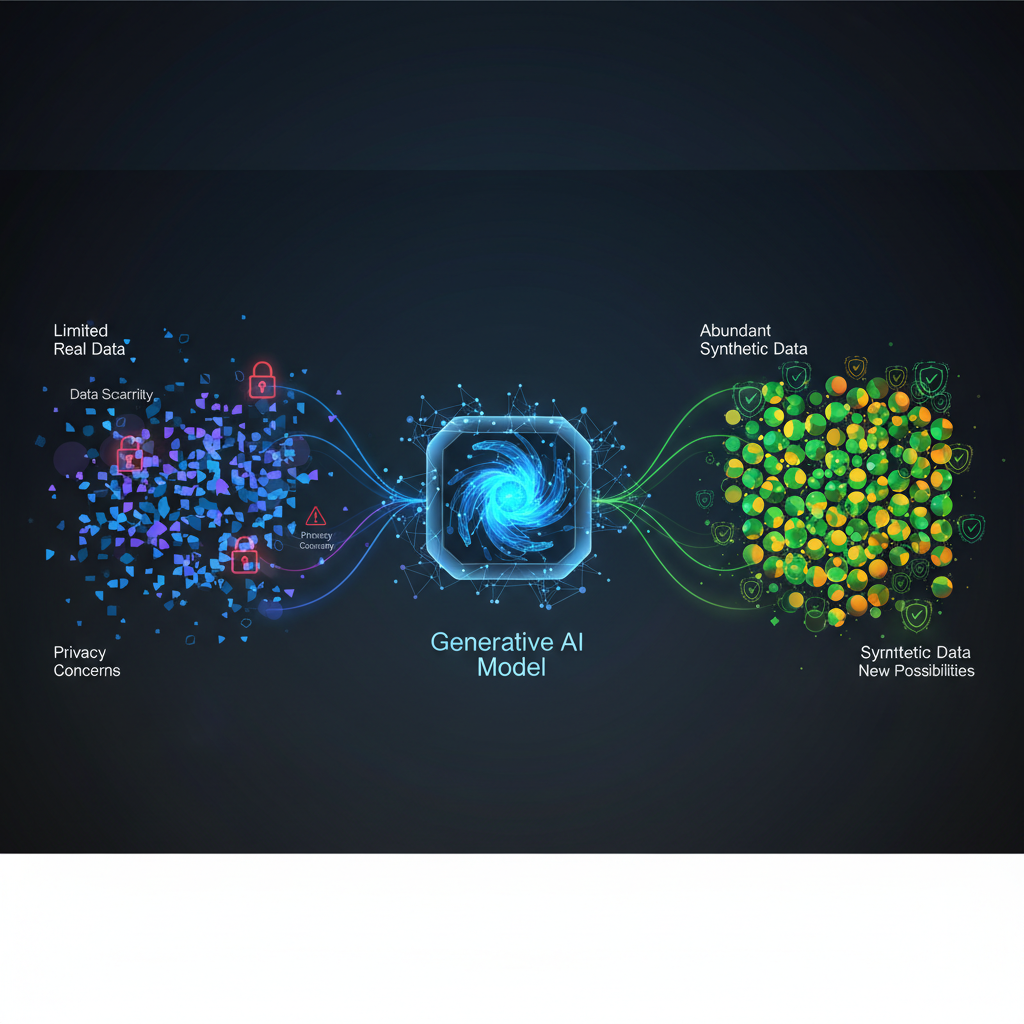

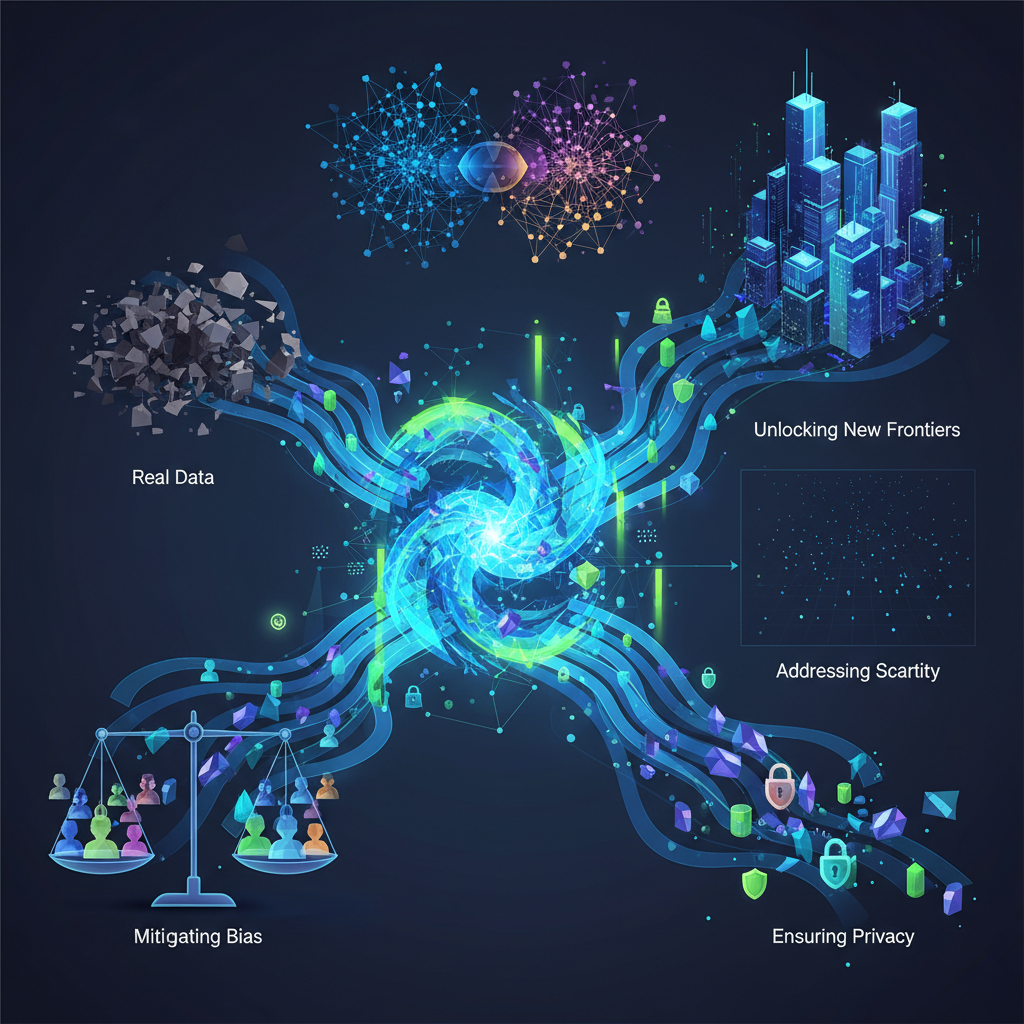

Explore how Generative AI is revolutionizing data creation, addressing scarcity, bias, and privacy challenges. This rapidly evolving field, powered by models like GANs and Diffusion Models, fundamentally changes how we approach data in AI.

The lifeblood of artificial intelligence is data. Yet, in our pursuit of ever more powerful and accurate models, we constantly bump up against the limitations of real-world data: it's scarce, expensive to collect and label, often contains biases, and is increasingly burdened by privacy regulations. Enter Generative AI for Synthetic Data Generation – a rapidly evolving field that promises to unlock new frontiers in AI development by creating artificial data that mirrors the properties of real data.

Once a niche research area, synthetic data generation has exploded into prominence, driven by the remarkable advancements in generative models like Diffusion Models, GANs, and large autoregressive transformers. This isn't just about creating pretty pictures; it's about fundamentally changing how we approach data in AI, offering solutions to some of the most persistent challenges in machine learning.

The Data Dilemma: Why Synthetic Data Matters Now More Than Ever

The AI revolution has, paradoxically, highlighted the "data problem." While model architectures become increasingly sophisticated, the quality and quantity of data often remain the bottleneck. Here's why synthetic data is not just a luxury, but a necessity:

- Data Scarcity & Cold Start Problems: Many critical applications, from rare disease diagnosis to fraud detection, suffer from a lack of sufficient real-world examples for specific events. New products or services also face a "cold start" problem, lacking user data to train initial models.

- Privacy & Regulatory Compliance: Strict regulations like GDPR and CCPA make sharing and using sensitive real data a legal and ethical minefield. Synthetic data offers a privacy-preserving alternative.

- Bias & Fairness: Real-world datasets often reflect societal biases, leading to unfair or discriminatory AI models. Correcting these biases requires careful data manipulation or augmentation.

- Cost & Efficiency: Collecting, cleaning, and labeling vast amounts of real data is incredibly expensive and time-consuming. Imagine the cost of labeling millions of images for autonomous vehicles or medical scans.

- Robustness & Edge Cases: Models trained on typical data can fail spectacularly when encountering unusual or "edge" cases. Generating synthetic data for these scenarios can significantly improve model robustness.

These challenges have fueled the demand for intelligent data generation techniques, positioning synthetic data as a cornerstone of the emerging "data-centric AI" paradigm.

The Generative AI Toolkit: How We Create Synthetic Data

The magic behind synthetic data lies in the power of modern generative AI models. These models learn the underlying distribution of real data and then generate new samples that adhere to that learned distribution, effectively creating "new" data points that never existed in the original dataset.

Let's explore the key players in this generative toolkit:

1. Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, revolutionized generative modeling. They operate on an adversarial principle, pitting two neural networks against each other:

- Generator (G): Takes random noise as input and tries to produce synthetic data that looks real.

- Discriminator (D): Receives both real data and synthetic data from the generator, and its job is to distinguish between the two.

How it works: The generator constantly tries to fool the discriminator, while the discriminator constantly tries to get better at identifying fakes. This adversarial game drives both networks to improve. Eventually, if trained successfully, the generator becomes adept at producing highly realistic data that even the discriminator can't reliably tell apart from real data.

Strengths: Can produce incredibly realistic samples, especially for images (e.g., StyleGAN for human faces). Challenges: Notoriously difficult to train due to training instability and a phenomenon called "mode collapse" (where the generator produces only a limited variety of samples). Example Application: Generating synthetic images of faces for privacy-preserving research, or creating synthetic medical images for data augmentation.

# Conceptual (simplified) GAN training loop

import torch

import torch.nn as nn

import torch.optim as optim

# Assume Generator (G) and Discriminator (D) models are defined

# Assume real_data_loader provides batches of real data

def train_gan(G, D, real_data_loader, epochs=100):

criterion = nn.BCEWithLogitsLoss()

optimizer_G = optim.Adam(G.parameters(), lr=0.0002)

optimizer_D = optim.Adam(D.parameters(), lr=0.0002)

for epoch in range(epochs):

for i, real_images in enumerate(real_data_loader):

batch_size = real_images.size(0)

# Train Discriminator

optimizer_D.zero_grad()

# Real data loss

labels_real = torch.ones(batch_size, 1)

outputs_real = D(real_images)

loss_D_real = criterion(outputs_real, labels_real)

# Fake data loss

noise = torch.randn(batch_size, G.latent_dim)

fake_images = G(noise).detach() # Detach to stop gradients from flowing to G

labels_fake = torch.zeros(batch_size, 1)

outputs_fake = D(fake_images)

loss_D_fake = criterion(outputs_fake, labels_fake)

loss_D = loss_D_real + loss_D_fake

loss_D.backward()

optimizer_D.step()

# Train Generator

optimizer_G.zero_grad()

noise = torch.randn(batch_size, G.latent_dim)

fake_images = G(noise)

labels_real = torch.ones(batch_size, 1) # Generator wants D to think fakes are real

outputs_fake = D(fake_images)

loss_G = criterion(outputs_fake, labels_real)

loss_G.backward()

optimizer_G.step()

if i % 100 == 0:

print(f"Epoch [{epoch}/{epochs}], Step [{i}/{len(real_data_loader)}], "

f"Loss D: {loss_D.item():.4f}, Loss G: {loss_G.item():.4f}")

# Conceptual (simplified) GAN training loop

import torch

import torch.nn as nn

import torch.optim as optim

# Assume Generator (G) and Discriminator (D) models are defined

# Assume real_data_loader provides batches of real data

def train_gan(G, D, real_data_loader, epochs=100):

criterion = nn.BCEWithLogitsLoss()

optimizer_G = optim.Adam(G.parameters(), lr=0.0002)

optimizer_D = optim.Adam(D.parameters(), lr=0.0002)

for epoch in range(epochs):

for i, real_images in enumerate(real_data_loader):

batch_size = real_images.size(0)

# Train Discriminator

optimizer_D.zero_grad()

# Real data loss

labels_real = torch.ones(batch_size, 1)

outputs_real = D(real_images)

loss_D_real = criterion(outputs_real, labels_real)

# Fake data loss

noise = torch.randn(batch_size, G.latent_dim)

fake_images = G(noise).detach() # Detach to stop gradients from flowing to G

labels_fake = torch.zeros(batch_size, 1)

outputs_fake = D(fake_images)

loss_D_fake = criterion(outputs_fake, labels_fake)

loss_D = loss_D_real + loss_D_fake

loss_D.backward()

optimizer_D.step()

# Train Generator

optimizer_G.zero_grad()

noise = torch.randn(batch_size, G.latent_dim)

fake_images = G(noise)

labels_real = torch.ones(batch_size, 1) # Generator wants D to think fakes are real

outputs_fake = D(fake_images)

loss_G = criterion(outputs_fake, labels_real)

loss_G.backward()

optimizer_G.step()

if i % 100 == 0:

print(f"Epoch [{epoch}/{epochs}], Step [{i}/{len(real_data_loader)}], "

f"Loss D: {loss_D.item():.4f}, Loss G: {loss_G.item():.4f}")

2. Variational Autoencoders (VAEs)

VAEs offer a more stable and interpretable approach to generative modeling compared to GANs. They are built upon the concept of autoencoders but with a probabilistic twist:

- Encoder: Maps the input data to a latent space, but instead of a single point, it outputs parameters (mean and variance) of a probability distribution (typically Gaussian).

- Decoder: Samples from this learned latent distribution and reconstructs the original input.

How it works: The VAE is trained to minimize two objectives: the reconstruction loss (how well the decoder reconstructs the input) and a regularization loss (Kullback-Leibler divergence) that forces the latent space to conform to a prior distribution (e.g., a standard normal distribution). This regularization ensures that the latent space is continuous and well-structured, allowing for smooth interpolation and meaningful sampling.

Strengths: More stable to train than GANs, provide a well-structured latent space for interpolation and sampling, and are excellent for structured data (e.g., tabular data). Challenges: Generated samples can sometimes appear blurrier or less sharp than those from GANs, especially for complex images. Example Application: Generating synthetic tabular data (e.g., customer demographics, financial transactions) while preserving statistical properties.

3. Diffusion Models

Diffusion models have emerged as the state-of-the-art for high-fidelity image and audio generation. They are inspired by thermodynamics and operate in two phases:

- Forward Diffusion Process: Gradually adds Gaussian noise to the data over several steps, slowly transforming it into pure noise. This process is fixed and requires no learning.

- Reverse Diffusion Process: A neural network learns to reverse this noise-adding process, step by step, effectively denoisifying the data back to its original form. By starting with pure noise and applying the learned reverse steps, the model can generate new, realistic data.

How it works: The neural network is trained to predict the noise that was added at each step of the forward process. During generation, it starts with random noise and iteratively removes the predicted noise, gradually revealing a coherent image or audio sample.

Strengths: Produce incredibly high-quality and diverse samples, often surpassing GANs in realism and mode coverage. Training is more stable than GANs. Challenges: Can be computationally intensive for sampling, though faster sampling methods (e.g., DDIM) are rapidly improving this. Example Application: Generating ultra-realistic synthetic images for computer vision datasets, creating diverse synthetic audio clips, or even generating synthetic medical scans.

4. Autoregressive Models (e.g., Transformers)

Autoregressive models predict the next element in a sequence based on all preceding elements. While not traditionally thought of as "image generators," they are powerful for sequential data like text, code, and time series. Large language models (LLMs) like GPT-series are prime examples.

How it works: These models learn the probability distribution of sequences. When generating, they sample one token (word, character, number) at a time, conditioned on all previously generated tokens.

Strengths: Excellent for sequential data, capable of generating highly coherent and contextually relevant data. Challenges: Can be slow for very long sequences, and sometimes struggle with generating truly novel or diverse content beyond their training distribution. Example Application: Generating synthetic medical records, financial transaction histories, customer reviews, or even synthetic code snippets for testing.

# Conceptual example: Generating synthetic text with a pre-trained LLM

from transformers import pipeline

# Load a text generation pipeline (e.g., using GPT-2)

generator = pipeline('text-generation', model='gpt2')

# Generate synthetic customer reviews based on a prompt

prompt = "The new AI-powered gadget is"

synthetic_review = generator(prompt, max_length=50, num_return_sequences=1,

truncation=True)[0]['generated_text']

print(f"Synthetic Review: {synthetic_review}")

# Generate synthetic medical notes

medical_prompt = "Patient presented with symptoms of"

synthetic_note = generator(medical_prompt, max_length=100, num_return_sequences=1,

truncation=True)[0]['generated_text']

print(f"Synthetic Medical Note: {synthetic_note}")

# Conceptual example: Generating synthetic text with a pre-trained LLM

from transformers import pipeline

# Load a text generation pipeline (e.g., using GPT-2)

generator = pipeline('text-generation', model='gpt2')

# Generate synthetic customer reviews based on a prompt

prompt = "The new AI-powered gadget is"

synthetic_review = generator(prompt, max_length=50, num_return_sequences=1,

truncation=True)[0]['generated_text']

print(f"Synthetic Review: {synthetic_review}")

# Generate synthetic medical notes

medical_prompt = "Patient presented with symptoms of"

synthetic_note = generator(medical_prompt, max_length=100, num_return_sequences=1,

truncation=True)[0]['generated_text']

print(f"Synthetic Medical Note: {synthetic_note}")

Practical Applications and Use Cases

The theoretical power of these models translates into immense practical value across various industries:

-

Healthcare:

- Privacy-Preserving Research: Generate synthetic patient data for medical research without exposing sensitive patient information (HIPAA compliance).

- Rare Disease Detection: Create synthetic images of rare conditions to train diagnostic models, overcoming data scarcity.

- Medical Imaging Augmentation: Generate diverse synthetic MRI or X-ray images to improve model robustness.

-

Finance:

- Fraud Detection: Synthesize examples of rare fraud patterns to improve the detection capabilities of models.

- Risk Modeling: Generate synthetic financial transaction data to test new risk models or stress-test existing ones.

- Data Sharing: Financial institutions can share synthetic market data with partners for collaborative analysis without revealing proprietary information.

-

Autonomous Driving & Robotics:

- Simulation & Digital Twins: Generate vast amounts of synthetic sensor data (LiDAR, camera, radar) from simulations, which is far safer and cheaper than real-world collection.

- Edge Case Generation: Create synthetic scenarios for dangerous or rare events (e.g., unusual weather, unexpected obstacles) to train robust autonomous systems.

- Data Labeling Automation: Synthetic data from simulations often comes with perfect, automatically generated labels.

-

E-commerce & Retail:

- Personalization: Generate synthetic user behavior data to test new recommendation algorithms without impacting real users.

- Inventory Optimization: Simulate various demand scenarios using synthetic sales data to optimize inventory management.

- A/B Testing: Create synthetic customer profiles and interactions to pre-test marketing campaigns.

-

Bias Mitigation & Fairness:

- Debiasing Datasets: Analyze biases in real data (e.g., underrepresentation of certain demographics) and generate synthetic samples to balance the dataset, leading to fairer models.

- Augmenting Underrepresented Groups: Create synthetic data for minority classes or demographic groups to ensure models perform equitably across all populations, preventing algorithmic discrimination.

-

Software Development & Testing:

- Test Data Generation: Automatically generate realistic test data for databases, APIs, and user interfaces, significantly speeding up development cycles.

- Security Testing: Create synthetic adversarial examples to test the robustness of machine learning models against attacks.

Practical Considerations and Challenges

While the promise of synthetic data is vast, its implementation comes with its own set of challenges:

-

Fidelity vs. Diversity vs. Privacy:

- Fidelity: How realistic is the synthetic data? Does it accurately reflect the statistical properties of the real data?

- Diversity: Does the synthetic data cover the full range of variations present in the real data, including rare events?

- Privacy: Does the synthetic data truly protect the privacy of individuals in the original dataset? Can sensitive information be reverse-engineered? Balancing these three often conflicting goals is a core challenge.

-

Utility Assessment: The ultimate test of synthetic data is its "utility" – how well models trained on it perform on real-world tasks. This requires robust metrics beyond visual inspection. Common approaches include:

- Training a downstream ML model on synthetic data and comparing its performance to a model trained on real data.

- Statistical comparisons (e.g., comparing distributions, correlations, or specific statistical tests).

- Privacy metrics (e.g., differential privacy guarantees, membership inference attacks).

-

Computational Resources: Training state-of-the-art generative models, especially Diffusion Models and large Transformers, requires significant computational power (GPUs, TPUs) and time.

-

Ethical Implications: While synthetic data can mitigate bias, it can also inadvertently amplify existing biases or introduce new ones if the generative model itself learns and propagates these biases. Careful monitoring and ethical considerations are paramount. For instance, if a model is trained on biased real data, it might generate synthetic data that reinforces those biases.

-

Data Leakage: Even with synthetic data, there's a risk of "data leakage" where specific real data points might be memorized and reproduced by the generator, especially if the training dataset is small or the model is overfitted. Techniques like differential privacy can help mitigate this.

The Future is Synthetic

Generative AI for synthetic data generation is more than just a trend; it's a fundamental shift in how we approach data in the age of AI. As generative models continue to advance, becoming more efficient, controllable, and capable of handling diverse data modalities, synthetic data will become an indispensable tool for:

- Democratizing AI: Lowering the barrier to entry for AI development by reducing reliance on expensive and scarce real data.

- Accelerating Innovation: Enabling faster experimentation and iteration in model development.

- Building Fairer and More Robust AI: By providing controlled environments to address bias and improve generalization.

- Ensuring Privacy: Offering a powerful mechanism to develop and deploy AI solutions while respecting individual privacy rights.

The journey from raw data to actionable intelligence is complex. Synthetic data generation, powered by cutting-edge generative AI, offers a compelling pathway to navigate this complexity, promising a future where data limitations are no longer an insurmountable barrier, but an opportunity for intelligent creation.