Generative AI: Revolutionizing Scientific Discovery and Material Design

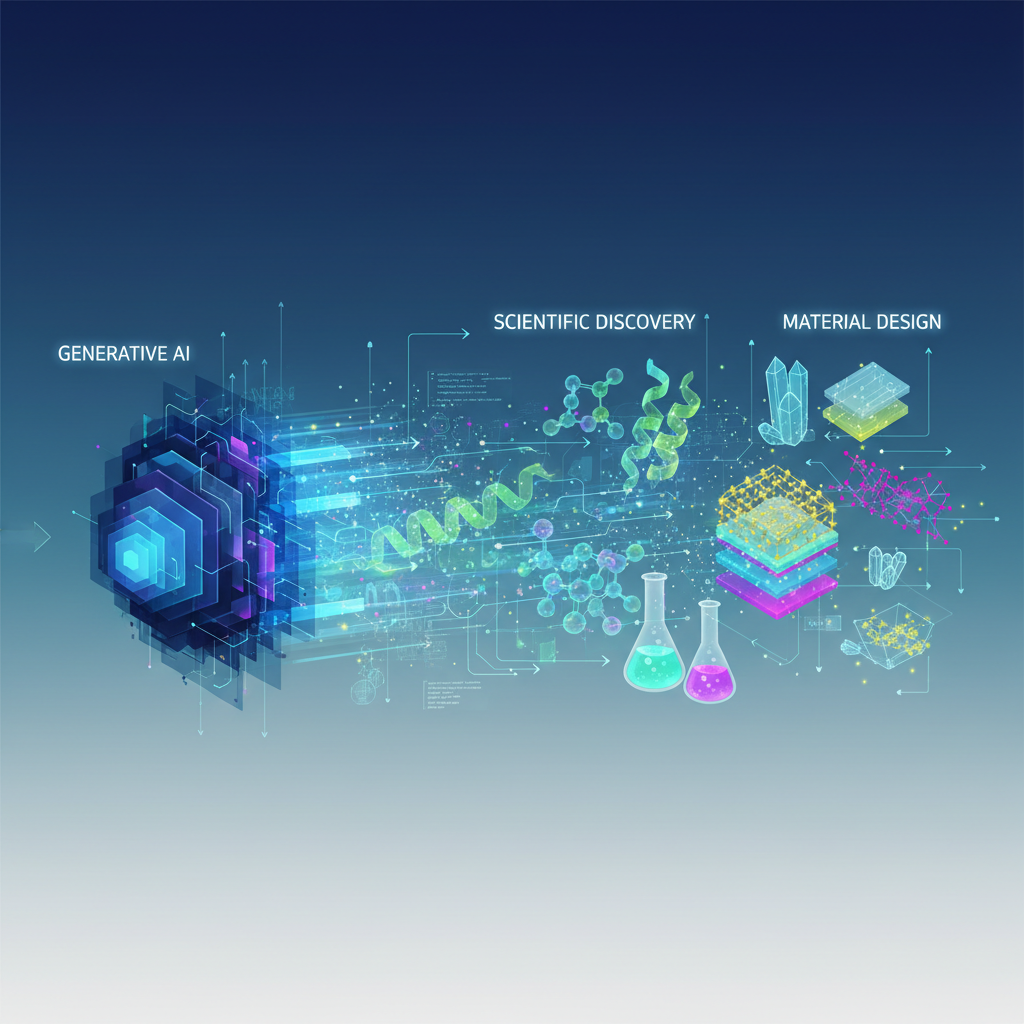

Generative AI is transforming scientific discovery, moving beyond text and image generation to actively design novel molecules, proteins, and materials. This revolutionary shift promises to accelerate solutions for critical global challenges, from healthcare to sustainability.

The relentless march of scientific discovery has always been a testament to human ingenuity, but it has also been a journey fraught with immense challenges. From the painstaking synthesis of new materials to the decades-long odyssey of drug development, progress often feels like navigating a vast, uncharted ocean with only a compass and a small map. Enter Generative AI – a revolutionary paradigm shift that is transforming this arduous voyage into an intelligent, accelerated exploration.

No longer confined to generating compelling images or coherent text, Generative AI is now actively designing the very building blocks of life and matter. It's crafting novel molecules, engineering bespoke proteins, and proposing groundbreaking materials, promising to unlock solutions to some of humanity's most pressing problems, from combating disease to fostering sustainability. This isn't just about automation; it's about intelligent creation, pushing the boundaries of what's possible and fundamentally reshaping the landscape of scientific innovation.

The Dawn of Generative Science: Why Now?

The convergence of several powerful trends has propelled Generative AI into the scientific spotlight:

- Explosive Growth of Gener Generative AI: The past few years have witnessed an unprecedented surge in the capabilities of generative models. Large Language Models (LLMs) like GPT-4, diffusion models like DALL-E and Stable Diffusion, and advanced Variational Autoencoders (VAEs) have demonstrated an astonishing ability to generate novel, coherent, and complex data across various modalities. The underlying principles of these models – learning latent representations and generating new samples from them – are now being skillfully adapted to the intricate world of molecules, proteins, and materials.

- Urgent Need for Acceleration: Traditional scientific discovery and drug development are notoriously slow, expensive, and often rely on a trial-and-error approach. The average cost to bring a new drug to market can exceed $2 billion and take over a decade. Generative AI offers the potential to dramatically compress these timelines and costs by intelligently exploring vast chemical, biological, and material spaces, identifying promising candidates in silico before costly experimental validation.

- Interdisciplinary Convergence: This field thrives at the exciting intersection of AI, chemistry, biology, materials science, and medicine. This cross-pollination of ideas and methodologies is fostering novel research directions and driving collaborative innovation, creating a vibrant ecosystem for groundbreaking discoveries.

- Data Availability & Computational Power: The increasing availability of large, high-quality scientific datasets (genomic, proteomic, chemical, structural, reaction databases) provides the fuel for these data-hungry models. Simultaneously, advancements in high-performance computing (GPUs, TPUs, cloud infrastructure) make it feasible to train and deploy these sophisticated models on complex scientific problems.

Core Generative AI Paradigms in Scientific Discovery

At its heart, generative AI aims to learn the underlying distribution of a given dataset and then generate new samples that resemble this distribution but are novel. In scientific contexts, this means learning the "rules" of molecular structure, protein folding, or material properties, and then inventing new instances that adhere to these rules while optimizing for desired characteristics.

Several key generative model architectures are being leveraged:

1. Variational Autoencoders (VAEs)

VAEs are neural networks that learn a compressed, latent representation of input data. They consist of an encoder that maps input data (e.g., a molecular graph or SMILES string) to a lower-dimensional latent space, and a decoder that reconstructs the original data from this latent representation. By sampling points from this learned latent space and passing them through the decoder, VAEs can generate novel, chemically valid molecules.

Example: Molecular Generation with VAEs Imagine you want to generate new drug-like molecules.

- Encoder: Takes a SMILES string (e.g.,

CCO) or a molecular graph as input and outputs a mean and variance vector defining a point in the latent space. - Latent Space: A continuous, often multi-dimensional space where similar molecules are clustered together.

- Decoder: Takes a sampled point from the latent space and generates a new SMILES string or molecular graph.

A key advantage of VAEs is their ability to perform interpolation in the latent space. By taking two known molecules, finding their latent representations, and then sampling points along the path between them, VAEs can generate "hybrid" molecules that combine properties of the original two. This allows for fine-grained control over molecular properties. Furthermore, the latent space can be optimized to be continuous and smooth, enabling efficient search for molecules with desired properties using techniques like Bayesian optimization.

2. Generative Adversarial Networks (GANs)

GANs consist of two competing neural networks: a generator and a discriminator.

- Generator: Tries to create synthetic data (e.g., molecular structures) that are indistinguishable from real data.

- Discriminator: Tries to distinguish between real data and data generated by the generator.

Through this adversarial training process, both networks improve. The generator learns to produce increasingly realistic samples, while the discriminator becomes better at identifying fakes. When applied to molecules, GANs can generate novel chemical structures.

Example: Drug-like Molecule Generation with GANs

- Generator: Takes random noise as input and outputs a molecular representation (e.g., a molecular fingerprint or a graph).

- Discriminator: Takes either a real molecule from a database or a molecule generated by the generator and outputs a probability that it's "real."

- Training: The generator is rewarded for fooling the discriminator, and the discriminator is rewarded for correctly identifying real vs. fake.

GANs have shown promise in generating diverse molecular structures, but can sometimes suffer from mode collapse (where the generator produces a limited variety of outputs) and challenges in ensuring chemical validity and synthesizability.

3. Diffusion Models

Diffusion models are a newer class of generative models that have achieved state-of-the-art results in image generation and are now being rapidly adopted for scientific data. They work by progressively adding noise to data until it becomes pure noise (the "forward diffusion process"), and then learning to reverse this process, gradually removing noise to generate new data from random noise (the "reverse diffusion process").

Example: Protein Structure Generation with Diffusion Models

- Forward Process: A known protein structure (e.g., represented as a point cloud of atoms or a 3D grid) is slowly corrupted by adding Gaussian noise over many steps.

- Reverse Process (Training): A neural network is trained to predict the noise that was added at each step, effectively learning how to "denoise" the structure.

- Generation: To generate a new protein, start with pure random noise and iteratively apply the learned denoising steps until a coherent protein structure emerges.

Diffusion models excel at generating high-fidelity and diverse samples. Their step-by-step refinement process makes them particularly well-suited for complex structured data like proteins and materials, where small changes can have large impacts.

4. Graph Neural Networks (GNNs) with Generative Models

Molecules and crystal structures are inherently graph-like (atoms as nodes, bonds as edges). Graph Neural Networks (GNNs) are uniquely suited to process this type of data. When combined with generative architectures like VAEs, GANs, or Diffusion Models, GNNs enable the direct generation of molecular graphs, ensuring chemical validity and capturing complex structural relationships.

Example: GNN-based Molecular Generation A GNN-based VAE might encode a molecular graph into a latent vector. The decoder, also a GNN, would then sequentially add atoms and bonds, conditioned on the latent vector, to construct a new molecular graph. This approach directly operates on the fundamental representation of a molecule.

Cutting-Edge Applications and Use Cases

The theoretical underpinnings of these models are translating into tangible breakthroughs across various scientific disciplines.

1. De Novo Molecular Design for Drug Discovery

This is perhaps the most prominent application. Generative AI is being used to invent entirely new molecules with desired pharmacological properties.

- Lead Compound Identification: Instead of screening millions of compounds, AI can generate a focused library of novel molecules predicted to bind to a specific disease target (e.g., a protein implicated in cancer or infection) with high affinity.

- Example: Researchers at Insilico Medicine used a GAN-based approach to generate novel small molecules for an undisclosed fibrosis target. Within 46 days, they identified a potent lead candidate that was subsequently validated in vitro and in vivo, demonstrating a significant acceleration compared to traditional methods.

- Optimization of Drug Candidates: Once a lead compound is identified, generative models can fine-tune its properties – improving solubility, reducing toxicity, enhancing selectivity, and optimizing metabolic stability – to transform it into a viable drug candidate.

- Antibiotic Discovery: With the rise of antimicrobial resistance, generative AI is crucial for discovering new classes of antibiotics. Models can learn the features of existing antibiotics and then generate novel scaffolds that evade resistance mechanisms.

- Example: Researchers at MIT used a deep learning model to identify halicin, a potent new antibiotic, from a vast chemical space. While not strictly a generative model, it demonstrates the power of AI to explore chemical space for novel therapeutics. Generative models take this a step further by designing the molecules.

- Personalized Medicine: In the future, generative AI could design drugs tailored to an individual's genetic makeup or specific disease profile, leading to highly effective and less toxic treatments.

2. Protein Design and Engineering

Proteins are the workhorses of biology, performing myriad functions from catalysis to structural support. Generative AI is revolutionizing their design.

- Generative Models for Protein Sequences and Structures: Inspired by the success of AlphaFold in predicting protein structures, generative models are now designing proteins from scratch. Diffusion models, in particular, are showing great promise in generating novel protein backbones that fold into stable, functional structures.

- Example: Research groups are using diffusion models to generate protein backbone structures and then designing sequences that fold into these structures, creating entirely novel proteins with desired shapes.

- Functional Protein Generation: Beyond just structure, the ultimate goal is to generate proteins with desired functions. This includes designing enzymes with enhanced catalytic activity or specificity for industrial processes (e.g., biofuel production, waste degradation) or creating highly effective therapeutic antibodies for various diseases.

- Example: Researchers are using LLM-like architectures, trained on vast protein sequence databases, to generate novel protein sequences that exhibit specific binding affinities or enzymatic activities. These "protein language models" can learn the grammar of protein function.

- Vaccine Design: Generative AI can propose novel antigens for vaccine development, potentially leading to more effective and broadly protective vaccines against rapidly evolving pathogens.

3. Materials Science and Discovery

The principles of molecular design extend to the macroscopic world of materials, where generative AI is accelerating the search for novel substances with extraordinary properties.

- Generative Models for Crystal Structures and Materials: Similar to molecular design, generative AI is being used to propose novel crystal structures or material compositions with specific electronic, mechanical, or thermal properties.

- Example: Models can generate new perovskite structures for solar cells, identify novel battery materials with higher energy density and faster charging capabilities, or even propose superconductors at higher temperatures.

- Catalyst Design: Engineering catalysts for sustainable chemical reactions is critical for reducing energy consumption and waste. Generative AI can design catalysts with improved efficiency and selectivity.

- Superconductors & Semiconductors: Accelerating the search for materials with advanced electronic properties is crucial for next-generation computing and energy technologies. Generative models can explore vast compositional spaces to find promising candidates.

4. Retrosynthesis and Reaction Prediction

Generative AI is also transforming the synthetic chemistry pipeline itself.

- LLMs for Chemical Synthesis Planning: Large Language Models, fine-tuned on vast datasets of chemical reactions (e.g., patent databases, reaction databases like Reaxys or SciFinder), are being used to predict reaction outcomes or plan multi-step synthesis routes (retrosynthesis) more efficiently than traditional rule-based systems.

- Example: Models can take a target molecule and propose a sequence of known reactions that could lead to its synthesis from readily available starting materials, significantly reducing the time and expertise required for synthesis planning.

- Discovery of Novel Reactions: Beyond known reactions, generative models might even suggest entirely new chemical transformations that have not yet been discovered by humans, opening up new avenues for chemical synthesis.

5. Integration with Robotics and Automation (AI-driven Labs)

The ultimate vision is a closed-loop "design-build-test" cycle. Generative AI designs a molecule or material, which is then automatically synthesized by robotic platforms, characterized by automated analytical instruments, and the experimental results are fed back to retrain and improve the generative model. This creates an autonomous scientific discovery engine.

Key Challenges and Future Directions

While the promise is immense, significant hurdles remain:

- Validation & Experimental Verification: Generating novel designs in silico is one thing; experimentally validating their properties, efficacy, and synthesizability in vitro and in vivo is another. The gap between computational prediction and real-world reality remains a major challenge. Bridging this gap requires tighter integration with high-throughput experimental platforms.

- Interpretability and Explainability (XAI): Understanding why a generative model proposes a particular molecule, protein, or material can provide invaluable scientific insights. Current models often operate as "black boxes," making it difficult to extract mechanistic understanding. Developing XAI techniques specific to scientific data is crucial.

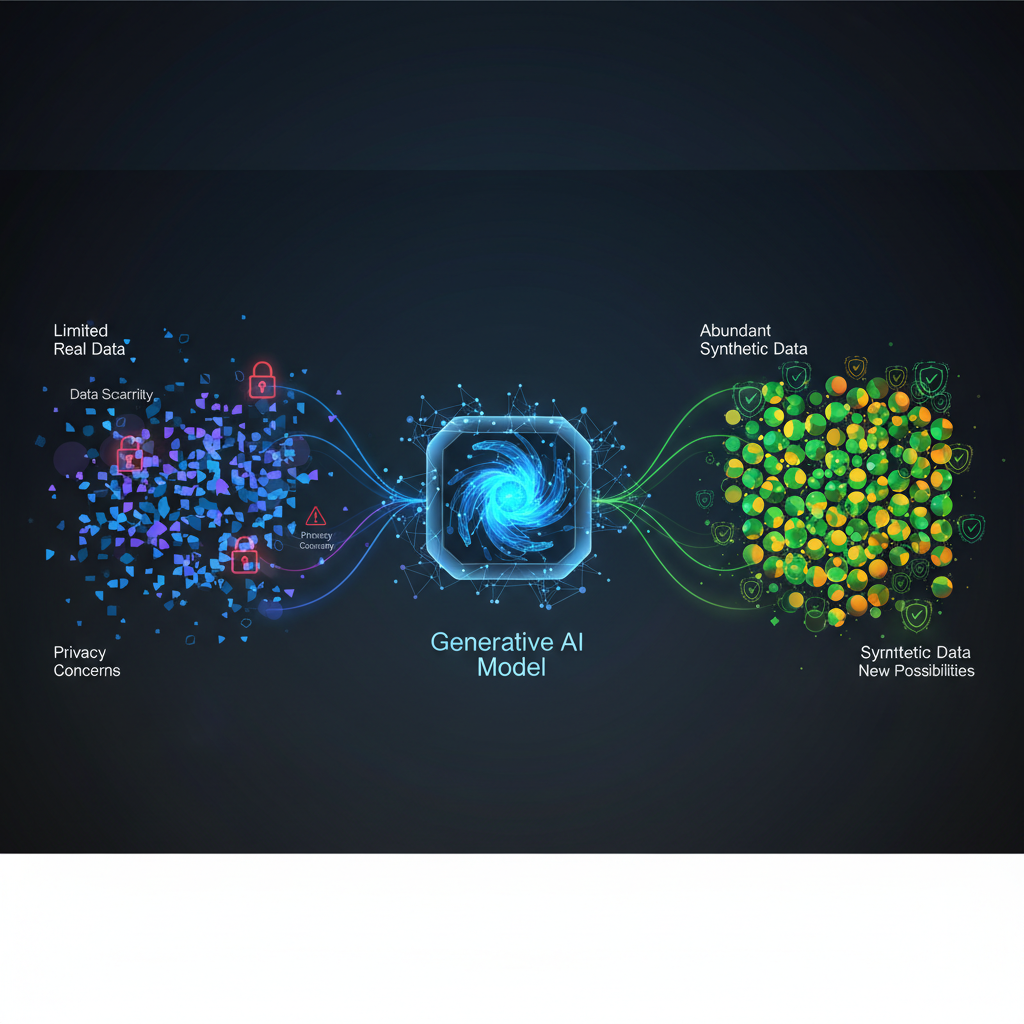

- Data Scarcity and Quality: High-quality, diverse, and sufficiently large datasets are crucial for training robust generative models, especially for complex biological systems where experimental data can be sparse and expensive to obtain. Data standardization and sharing initiatives are vital.

- Computational Cost: Training and running these sophisticated generative models, especially for large molecules or complex materials, can be computationally intensive, requiring significant hardware and energy resources.

- Ensuring Novelty and Diversity: While generative models can create novel compounds, ensuring they are truly diverse and not just minor variations of existing ones is important to avoid rediscovering known compounds. Techniques to encourage diversity in generation are an active area of research.

- Ethical and Safety Concerns: The ability to design novel molecules also raises ethical questions. How do we ensure that generated compounds are safe, effective, and not misused (e.g., for creating harmful substances or biological weapons)? Robust ethical guidelines and regulatory frameworks are essential.

- Synthesizability and Manufacturability: A generated molecule might have ideal properties in silico, but if it's impossible or prohibitively expensive to synthesize in the lab, its practical value is limited. Integrating synthesizability predictions into the generative process is a critical area of development.

Conclusion: A New Era of Discovery

Generative AI for scientific discovery and drug design is not merely an incremental improvement; it represents a paradigm shift in how we approach fundamental scientific challenges. By moving beyond passive data analysis to active, intelligent creation, these models are empowering researchers to explore previously inaccessible chemical and biological spaces, accelerating the pace of innovation across chemistry, biology, and materials science.

For AI practitioners, this field offers a rich landscape for applying cutting-edge machine learning techniques to real-world problems with profound impact. For enthusiasts, it highlights the transformative power of AI beyond consumer applications, showcasing its potential to address humanity's most pressing health, environmental, and technological needs.

As we continue to refine these models and integrate them more deeply into the experimental pipeline, we are on the cusp of a new era of discovery – one where intelligent machines work hand-in-hand with human scientists, unlocking unprecedented insights and ushering in a future defined by rapid, targeted, and impactful scientific breakthroughs. The journey has just begun, and the potential is truly limitless.