Multimodal Large Language Models: The Next Frontier in AI

Explore the revolutionary shift from text-only LLMs to Multimodal Large Language Models (MLLMs). Discover how MLLMs integrate images, audio, and more to mimic human cognition and redefine AI's capabilities.

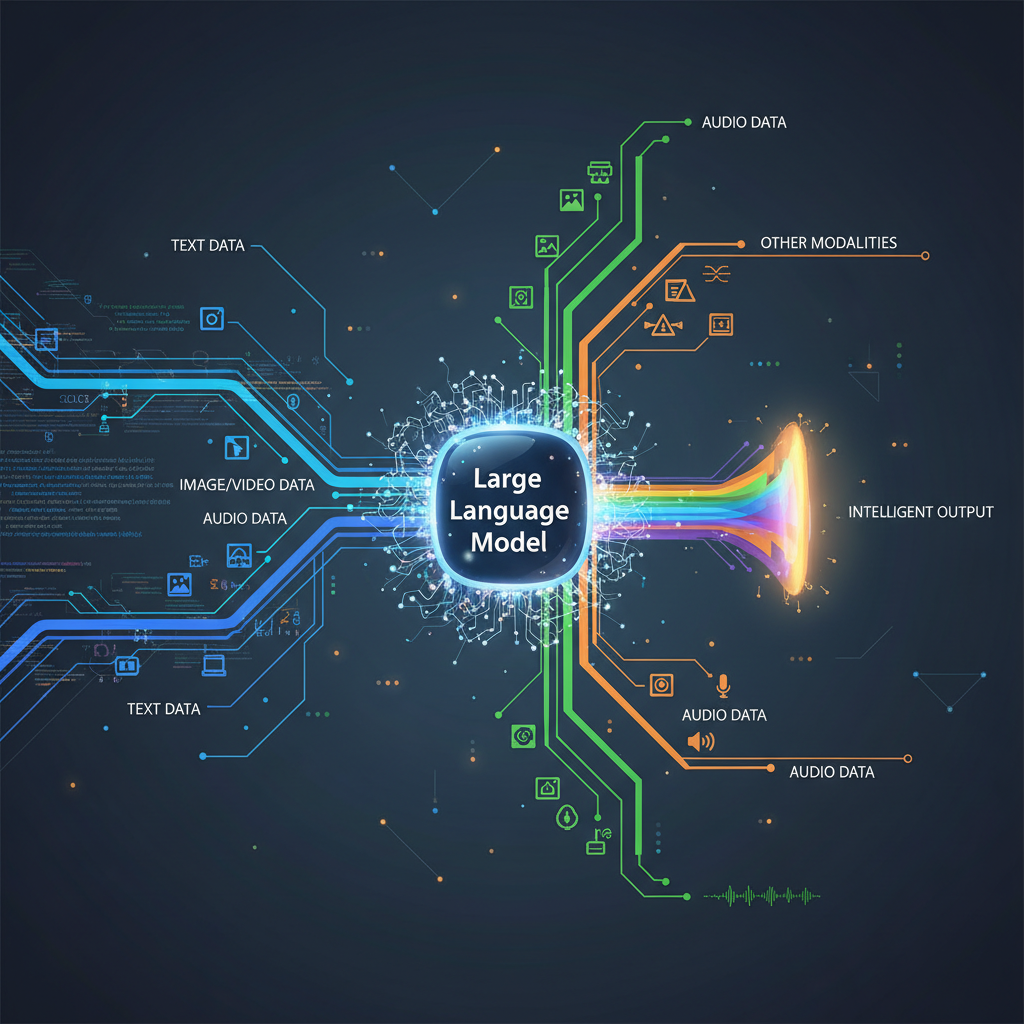

The world of Artificial Intelligence is in a constant state of exhilarating evolution. For the past few years, Large Language Models (LLMs) have captivated our imagination, demonstrating unprecedented capabilities in understanding, generating, and interacting with human language. From crafting compelling narratives to assisting with complex code, LLMs have redefined what's possible with text. However, human intelligence isn't confined to a single sense; we perceive and interact with the world through a rich tapestry of sights, sounds, and experiences. This inherent multimodality of human cognition has spurred the next great frontier in AI: Multimodal Large Language Models (MLLMs).

MLLMs represent a paradigm shift, moving beyond text-only processing to integrate and reason across diverse data types like images, audio, video, and even 3D data. This isn't merely about concatenating different inputs; it's about building AI systems that can truly "see," "hear," and "understand" the world in a more holistic, context-aware, and human-like manner. The recent emergence of models like GPT-4o, Gemini, LLaVA, and Fuyu-8B showcases this rapid advancement, pushing the boundaries of what AI can achieve and unlocking a universe of previously unimaginable applications.

The Dawn of Multimodal Intelligence: Why Now?

The surge in MLLM development isn't accidental; it's the convergence of several critical factors:

- Bridging the Human-AI Gap: Humans are inherently multimodal. We don't just read a recipe; we look at the ingredients, hear the sizzle, and smell the aroma. MLLMs aim to imbue AI with a similar, richer understanding of context and interaction, moving closer to artificial general intelligence.

- Data Abundance and Diversity: The digital age has flooded us with multimodal data – billions of images, videos, audio clips, and text documents. Advanced computational power and sophisticated data curation techniques now allow us to leverage this wealth of information for training increasingly complex models.

- Architectural Innovations: The transformer architecture, which underpins modern LLMs, has proven remarkably adaptable. Researchers have found ingenious ways to extend its attention mechanisms to process and fuse information from disparate modalities.

- Explosion of Practical Applications: The ability to process and generate across modalities opens up entirely new categories of applications, from enhanced accessibility tools to more intuitive human-robot interaction.

- Open-Source Momentum: The open-source community plays a vital role, with projects like LLaVA making powerful MLLMs accessible to a broader audience, accelerating research and development.

This confluence of factors positions MLLMs as one of the most exciting and impactful areas in AI today.

Architectural Evolution: From Fusion to Integration

The journey towards truly multimodal AI has seen significant architectural innovations, moving from loosely coupled systems to deeply integrated designs.

Early Approaches: Encoder-Decoder Fusion

Initial MLLMs often adopted an encoder-decoder architecture, where each modality had its dedicated encoder. For instance, an image might be processed by a Vision Transformer (ViT) and text by a traditional text encoder. The outputs of these encoders would then be concatenated or fused in some manner before being fed into a language model decoder.

- Example: Flamingo (DeepMind): Flamingo used pre-trained vision encoders (like NFNet or CLIP's ViT) to extract visual features. These features were then fed into a series of "Perceiver Resampler" modules, which effectively converted a variable number of image tokens into a fixed number of visual tokens. These visual tokens were then interleaved with text tokens and processed by a large language model. This allowed the LLM to condition its text generation on visual input.

- Example: BLIP-2 (Salesforce AI Research): BLIP-2 introduced the concept of a "Querying Transformer" (Q-Former) to bridge the gap between a frozen image encoder and a frozen LLM. The Q-Former learned to extract relevant visual features from the image encoder's output, which were then fed as soft prompts to the LLM. This approach was highly parameter-efficient, leveraging powerful pre-trained unimodal models without extensive retraining.

These early models demonstrated impressive capabilities in tasks like visual question answering (VQA) and image captioning, but they often treated modalities somewhat separately before a final fusion step.

Current Trends: Deeply Integrated Architectures

The latest generation of MLLMs, exemplified by models like GPT-4o and Gemini, are moving towards more deeply integrated architectures. The goal is to treat all modalities as "first-class citizens" throughout the processing pipeline, allowing for richer, more nuanced interactions and reasoning.

- Shared Representations and Cross-Attention: Instead of separate encoders followed by simple concatenation, these models might employ more sophisticated cross-attention mechanisms at multiple layers. This allows information from different modalities to influence each other's representations much earlier and more frequently during processing.

- Unified Tokenization: A key challenge is representing diverse modalities (pixels, audio waveforms, 3D points) as tokens that can be processed uniformly by a transformer alongside text tokens. This involves developing effective "tokenizers" for non-textual data. For images, patches can be flattened into sequences of vectors; for audio, spectrograms can be treated similarly. The crucial aspect is embedding these diverse tokens into a shared latent space where the transformer can operate on them interchangeably.

- "Native" Multimodality: The aspiration is for models where the core transformer architecture itself is inherently multimodal, rather than being a language model with tacked-on visual or audio inputs. This could involve a single, large transformer that processes a stream of interleaved tokens from different modalities, learning to attend to and integrate information across them dynamically. GPT-4o, for instance, is described as a "natively multimodal" model, meaning it's trained across text, vision, and audio, and can output in all these modalities.

The shift towards deeper integration promises more coherent reasoning, better contextual understanding, and ultimately, more human-like interaction.

Beyond Description: Instruction Following and Multimodal Generation

The capabilities of MLLMs extend far beyond simple descriptive tasks. They are increasingly adept at complex instruction following and sophisticated multimodal generation.

Conversational MLLMs and Instruction Following

Early multimodal models excelled at tasks like image captioning ("A cat sitting on a keyboard") or visual question answering ("What color is the cat?" -> "Orange"). Modern MLLMs, however, can engage in multi-turn conversations that require reasoning across modalities and following complex instructions.

- Example Scenario:

- User: (Uploads an image of a cluttered desk with a coffee mug, laptop, and books) "What's on this desk?"

- MLLM: "I see a laptop, several books, a coffee mug, and some papers."

- User: "Can you describe the coffee mug in more detail?"

- MLLM: "It appears to be a white ceramic mug with a blue stripe around the rim, and it's about half full of a dark liquid, likely coffee."

- User: "Suggest three ways to organize this desk, considering the items."

- MLLM: "1. Use a laptop stand to free up space. 2. Stack the books neatly or use a bookend. 3. Consider a small tray for the mug and other small items to keep them contained."

- User: "Now, imagine a small, whimsical creature is hiding somewhere on this desk. Generate a short story about it discovering the coffee."

- MLLM: (Generates a story incorporating elements from the image and the new instruction).

This demonstrates the ability to maintain context, reason about spatial relationships, and creatively generate content based on multimodal input and instructions.

Multimodal Generation: Any-to-Any

The holy grail of multimodal generation is "any-to-any" — where any combination of input modalities can lead to any combination of output modalities. While still an active research area, significant progress has been made.

- Conditional Generation: MLLMs can generate new content conditioned on multimodal inputs.

- Text + Image to Image: "Generate an image of a cat wearing a tiny hat, in the style of this painting." (User provides text and an image of a painting style).

- Image + Text to Text: "Describe this scene and write a poem about the mood it evokes." (User provides an image).

- Audio + Text to Image: "Generate an image of a bustling city street based on this audio clip and the prompt 'futuristic neon lights'."

- Image-to-Text-to-Image (and beyond): A common pattern involves an MLLM generating a rich description from an image, which can then be used as a prompt for a separate text-to-image model, allowing for style transfer, content variation, or generating new images "inspired" by the original.

- Video Summarization and Editing: MLLMs can analyze video content (visuals, audio, speech) to generate concise text summaries, identify key events, or even suggest edits based on semantic understanding.

- 3D Generation: Emerging MLLMs are beginning to process and generate 3D data, opening doors for architectural design, gaming, and virtual reality content creation.

The ability to generate across modalities is transformative, moving AI from mere analysis to active creation and interaction with the digital and physical world.

Practical Applications: Transforming Industries and Experiences

The theoretical advancements in MLLMs are rapidly translating into tangible, impactful applications across various sectors.

1. Enhanced Customer Service and Support

- Visual Troubleshooting: Imagine a customer struggling with a broken appliance. Instead of trying to describe the problem over the phone, they can upload a video or image. An MLLM can instantly analyze the visual cues, diagnose the issue, provide step-by-step repair instructions (perhaps with annotated diagrams), or even connect them with the right specialist.

- Multimodal FAQs: Companies can create dynamic FAQs where users can ask questions by pointing their camera at a product, diagram, or error message, and the MLLM provides relevant information from manuals or support documents.

2. Creative Content Generation and Editing

- Personalized Marketing: MLLMs can analyze a user's past purchases (text), browsing behavior (text/visuals), and even social media activity (multimodal) to generate highly personalized ad copy, product images, and short video clips that resonate with their specific preferences.

- Storyboarding and Concept Art: Artists and designers can input rough sketches, mood board images, and textual descriptions. The MLLM can then generate detailed character designs, environment concepts, or even initial scene renders, dramatically accelerating the creative process.

- Dynamic Video Production: MLLMs can summarize long video footage, identify emotional peaks, or even suggest alternative cuts and transitions based on the visual and auditory content, making video editing more efficient and intelligent.

3. Accessibility and Assistive Technologies

- Real-time Visual Descriptions: For visually impaired individuals, MLLMs can provide real-time audio descriptions of their surroundings, identifying objects, reading signs, interpreting complex charts, or even describing facial expressions of people they are interacting with.

- Sign Language Interpretation: Advanced MLLMs could potentially translate sign language videos into spoken or written text, and vice-versa, fostering more inclusive communication.

- Multimodal Learning Tools: Educational platforms can leverage MLLMs to create interactive content that adapts to different learning styles. Students could ask questions about diagrams, listen to explanations of complex concepts, or even generate visual aids based on textual input.

4. Robotics and Embodied AI

- Enhanced Scene Understanding: Robots equipped with MLLMs can interpret complex commands like "pick up the red mug next to the book on the top shelf." They can understand not just the objects but their spatial relationships and the context of the instruction.

- Natural Human-Robot Interaction: MLLMs enable robots to understand not only spoken commands but also gestures, facial expressions, and vocal tone, leading to more intuitive and empathetic interactions. This is crucial for collaborative robotics in manufacturing or service industries.

5. Healthcare and Diagnostics

- Medical Image Analysis: MLLMs can assist radiologists and pathologists by combining visual analysis of X-rays, MRIs, CT scans, or pathology slides with patient history (textual data) to provide more comprehensive diagnostic insights, identify subtle anomalies, and even predict disease progression.

- Patient Monitoring: Analyzing video feeds for fall detection, audio for cough analysis or changes in breathing patterns, and integrating this with sensor data and electronic health records can provide a holistic view of a patient's health, enabling proactive intervention.

6. Education

- Interactive Tutors: An MLLM-powered tutor could understand a student's handwritten notes, verbal questions, and even infer their confusion from their facial expressions or tone of voice, tailoring explanations and examples to their individual needs and learning pace.

- Content Generation for Learning: Teachers can use MLLMs to generate diverse learning materials, such as explanations of scientific diagrams, historical context for images, or even interactive simulations based on textual descriptions.

Challenges and the Road Ahead

Despite their incredible promise, MLLMs face significant challenges that researchers are actively addressing:

- Data Scarcity and Alignment: Creating truly multimodal, aligned datasets at scale is incredibly difficult. It's not enough to have images and text; the text must accurately describe the image, and vice-versa. Most current MLLMs still rely heavily on large text corpora, aligning other modalities to them.

- Computational Cost: Training and deploying state-of-the-art MLLMs require immense computational resources, making them expensive to develop and run. Techniques like parameter-efficient fine-tuning (PEFT), quantization, and distillation are crucial for broader accessibility.

- Hallucinations and Grounding: MLLMs can still "hallucinate" details in images or generate text that isn't fully consistent with the visual input. Ensuring factual grounding and consistency across modalities remains a critical research area.

- Ethical Considerations: Bias present in training data can manifest in discriminatory outputs across modalities. For example, an MLLM might misinterpret certain visual cues based on racial or gender stereotypes. Privacy concerns surrounding the processing of sensitive visual or audio data are also paramount.

- Reasoning and Causality: While MLLMs are excellent at pattern recognition and correlation, true multimodal reasoning, understanding causality, and performing complex problem-solving across modalities are still active research areas. For example, understanding why a particular event occurred in a video, not just what happened.

- Real-time Interaction: Reducing latency for real-time conversational and interactive MLLM applications is crucial for seamless user experiences, especially in scenarios like live visual assistance or human-robot interaction.

Conclusion

The field of Multimodal Large Language Models is at a pivotal moment, rapidly transitioning from theoretical exploration to practical deployment. For AI practitioners, understanding their capabilities, architectural nuances, and the underlying challenges is crucial for building the next generation of intelligent applications that can truly perceive and interact with the world. For enthusiasts, MLLMs offer a fascinating glimpse into a future where AI systems are more intuitive, empathetic, and capable of understanding the rich, multifaceted nature of human experience. As MLLMs continue to evolve, they promise to unlock unprecedented levels of creativity, efficiency, and accessibility, fundamentally reshaping how we interact with technology and the world around us. The journey towards truly human-like AI, one that can see, hear, and understand, has only just begun.