Revolutionizing QA: AI-Powered Generative Test Case and Data Synthesis

Traditional QA struggles with complex software and rapid release cycles. This post explores how Generative AI is transforming software testing by automating and enhancing the creation of diverse, relevant test cases and data, moving beyond mere test automation.

The landscape of software development is in constant flux, marked by accelerating release cycles, increasingly complex architectures, and an ever-growing demand for flawless user experiences. At the heart of delivering high-quality software lies robust Quality Assurance (QA) and Quality Control (QC). Yet, traditional QA methodologies often struggle to keep pace. The manual creation of comprehensive test cases and realistic test data is a labor-intensive, time-consuming, and often bottleneck-inducing process. It's here that Artificial Intelligence, particularly the burgeoning field of Generative AI, is poised to revolutionize how we approach software testing.

This post delves into AI-Powered Generative Test Case and Data Synthesis, an innovative approach leveraging cutting-edge AI technologies to automate and enhance the creation of testing artifacts. This isn't just about automating existing tests; it's about intelligently generating new, diverse, and highly relevant test cases and data that can uncover hidden bugs, improve coverage, and accelerate the entire development lifecycle.

The Bottleneck: Manual Test Creation in a Rapid World

Before diving into the AI solution, let's acknowledge the problem. Modern software development, driven by Agile and DevOps principles, demands speed and agility. However, the manual creation of test cases and test data often lags behind:

- Time-Consuming: Crafting detailed test steps, expected results, and pre-conditions for every feature is a significant undertaking.

- Data Scarcity/Sensitivity: Obtaining sufficient, diverse, and realistic test data, especially for complex scenarios or sensitive information, is challenging. Using production data directly poses severe privacy and compliance risks (e.g., GDPR, HIPAA).

- Limited Coverage: Human testers, no matter how skilled, can only conceive a finite number of scenarios. Edge cases, obscure interactions, and unexpected data combinations are often missed.

- Maintenance Overhead: As applications evolve, test cases and data need constant updates, leading to significant maintenance costs.

- Scalability Issues: Scaling testing efforts to match the growth of microservices architectures and distributed systems is incredibly difficult with manual methods.

These challenges highlight a critical need for a more intelligent, automated approach to test artifact generation.

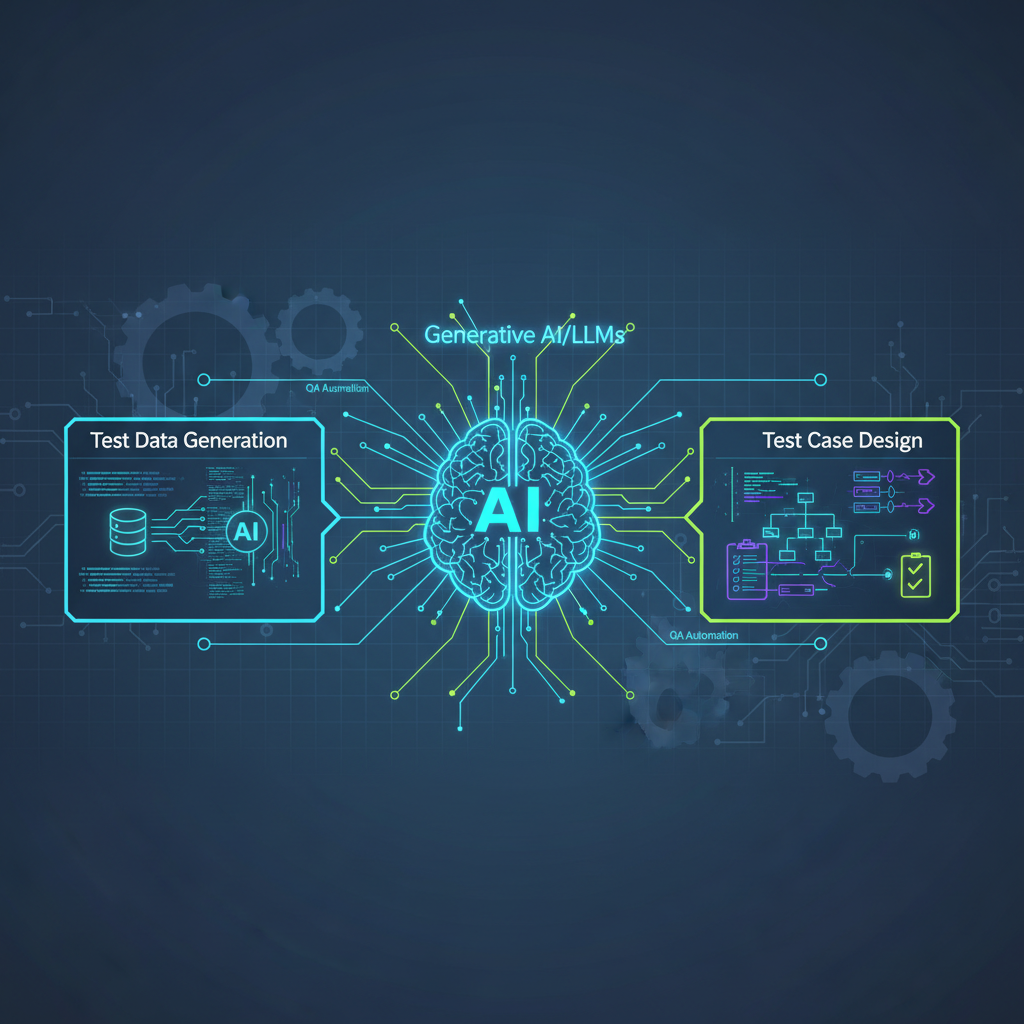

What is AI-Powered Generative Test Case and Data Synthesis?

At its core, this approach harnesses the power of various AI models to automatically produce two fundamental components of software testing:

- Test Cases: These are detailed, executable scenarios that outline specific actions, conditions, and expected outcomes. AI can generate these from high-level requirements, user stories, or even existing code, often in structured formats like Gherkin or plain language.

- Test Data: This encompasses realistic, diverse, and often synthetic datasets that mimic production data without exposing sensitive information. This can range from structured database records to unstructured text, images, audio, and complex event sequences.

The goal is to empower QA teams to shift from manual, reactive test creation to proactive, intelligent generation, allowing them to focus on higher-value activities like exploratory testing and complex scenario validation.

Why Now? The Convergence of AI and QA Needs

The timing for this technological shift couldn't be better, driven by several key factors:

- Explosion of Generative AI: The rapid advancements in Large Language Models (LLMs) like GPT-3/4, Llama, and Gemini have made text generation incredibly sophisticated. These models can understand context, generate coherent narratives, and even write code, making them ideal for crafting human-readable test scenarios and scripts.

- Data Privacy & Compliance: With stringent regulations like GDPR, CCPA, and HIPAA, generating synthetic yet realistic test data is no longer a luxury but a necessity. AI provides the means to create statistically similar data without compromising real user privacy.

- Shift-Left Testing Imperative: Catching bugs early is always cheaper. Automating test case and data generation allows testing to begin much earlier in the development cycle, even before full implementation, aligning perfectly with shift-left strategies.

- Complexity of Modern Systems: Microservices, cloud-native applications, and complex integrations make manual end-to-end testing a nightmare. AI can analyze system dependencies and generate comprehensive integration tests that humans might overlook.

- Agile & DevOps Demands: Rapid release cycles demand equally rapid test creation and execution. Manual methods simply can't keep up with continuous integration and continuous deployment (CI/CD) pipelines.

- Addressing Coverage Gaps: AI can explore a far wider range of inputs and scenarios than human testers, systematically identifying edge cases, boundary conditions, and potential vulnerabilities that might otherwise be missed.

Key Technologies & Methodologies Powering Generative Testing

This isn't a single AI solution but rather a synergistic combination of various advanced techniques:

1. Large Language Models (LLMs)

- Application: LLMs are the workhorses for natural language processing tasks in generative testing. They excel at:

- Requirements to Test Cases: Converting user stories, functional specifications, or even informal notes into structured test cases (e.g., Gherkin's Given/When/Then format).

- API Test Payload Generation: Crafting diverse JSON or XML payloads for API endpoints, including valid, invalid, and edge-case data.

- Test Script Generation: Generating basic UI automation scripts (e.g., Selenium, Playwright) or unit test stubs based on function signatures or UI elements.

- Data Validation Queries: Generating SQL queries or other data manipulation language (DML) statements for validating data integrity.

- Mechanism: LLMs are pre-trained on vast amounts of text data, allowing them to understand language patterns, context, and domain-specific terminology. For generative testing, they are often fine-tuned on existing test cases, documentation, codebases, and bug reports to learn the specific patterns and requirements of a project.

- Example: An LLM fine-tuned on a company's e-commerce platform documentation could take a prompt like "User adds item to cart and proceeds to checkout" and generate:

gherkin

Feature: Checkout Process Scenario: Successful purchase of a single item Given a user is logged in And the user has an "iPhone 15" in their cart When the user proceeds to checkout And enters valid shipping address "123 Main St, Anytown, CA" And selects "Standard Shipping" And enters valid credit card details And confirms the order Then the order should be placed successfully And an order confirmation email should be sent to the user And the user's cart should be emptyFeature: Checkout Process Scenario: Successful purchase of a single item Given a user is logged in And the user has an "iPhone 15" in their cart When the user proceeds to checkout And enters valid shipping address "123 Main St, Anytown, CA" And selects "Standard Shipping" And enters valid credit card details And confirms the order Then the order should be placed successfully And an order confirmation email should be sent to the user And the user's cart should be empty

- Example: An LLM fine-tuned on a company's e-commerce platform documentation could take a prompt like "User adds item to cart and proceeds to checkout" and generate:

2. Generative Adversarial Networks (GANs) & Variational Autoencoders (VAEs)

- Application: These models are primarily used for generating synthetic, realistic test data, especially for complex data types.

- GANs: Excel at generating data that closely mimics the statistical properties of real data. This is particularly useful for images, time-series data (e.g., sensor readings), and complex structured datasets like customer profiles or financial transactions.

- VAEs: Good for learning a compressed, latent representation of data, which can then be used to generate new, similar samples. They are often used for structured data generation and anomaly detection.

- Mechanism:

- GANs: Consist of two neural networks: a Generator that creates synthetic data, and a Discriminator that tries to distinguish between real and synthetic data. They are trained in a competitive game: the Generator tries to fool the Discriminator, and the Discriminator tries to get better at identifying fakes. This adversarial process drives the Generator to produce increasingly realistic data.

- VAEs: Learn to encode input data into a lower-dimensional latent space and then decode it back. By sampling from this latent space, new, similar data can be generated. VAEs provide a more structured way to control the characteristics of the generated data compared to GANs.

- Example (GANs): A GAN trained on a dataset of real customer profiles (names, addresses, purchase history, demographics) can generate entirely new, fictional customer profiles that are statistically indistinguishable from real ones, perfect for testing a CRM system without privacy concerns.

3. Reinforcement Learning (RL)

- Application: RL is powerful for guiding the generation process to achieve specific testing goals, especially in dynamic environments.

- Intelligent Fuzzing: An RL agent can learn to generate sequences of inputs that are more likely to uncover vulnerabilities or crashes in an application than random fuzzing.

- Test Sequence Optimization: Guiding the generation of complex user interaction paths to maximize code coverage or explore specific application states.

- Adaptive Test Generation: Learning from previous test runs to generate new tests that target areas with low coverage or high bug density.

- Mechanism: An RL agent interacts with the application under test (the "environment"). It takes actions (generates test inputs), observes the application's state, and receives a reward signal (e.g., increased code coverage, detection of a crash, reaching a specific application state). Through trial and error, the agent learns an optimal policy for generating tests that maximize its cumulative reward.

- Example: An RL agent could be trained to test a complex web application. Its actions might be clicking buttons, filling forms, or navigating pages. The reward could be the amount of new code covered or the number of unique error messages encountered. Over time, it learns to generate test sequences that effectively explore the application's functionality.

4. Constraint Satisfaction & Symbolic AI

- Application: While generative models create the raw output, symbolic AI ensures that the generated test cases and data adhere to strict business rules, data types, and referential integrity constraints.

- Mechanism: Rule engines, constraint solvers, and knowledge graphs are used to validate and refine AI-generated outputs. For instance, if an LLM generates a customer age, a constraint solver can ensure it's within a valid range (e.g., 18-120). If a GAN generates transaction data, symbolic AI can ensure that account balances remain consistent.

- Example: An LLM might generate a test case for a banking application where a user tries to transfer funds. A constraint satisfaction system would ensure that the source account has sufficient funds, the destination account exists, and the transfer amount is within daily limits, correcting or rejecting invalid scenarios generated by the LLM.

5. Automated Program Repair (APR) / Code Generation

- Application: While not directly generating test cases, the ability of LLMs to generate code can be extended to generate test code (e.g., unit tests, integration test stubs, mocking frameworks) directly from function signatures, API specifications, or even existing code.

- Mechanism: Similar to how LLMs generate application code, they can be fine-tuned to understand testing frameworks and best practices to produce executable test scripts.

Practical Applications & Use Cases

The theoretical underpinnings translate into tangible benefits across the QA spectrum:

- Requirements to Test Case Generation: Imagine feeding your product backlog or Confluence pages directly into an AI. The AI then generates a comprehensive suite of functional test cases, complete with steps, expected results, and preconditions, in a format like Gherkin or JSON, ready for execution.

- Synthetic Data for UI Testing: Instead of manually entering data or relying on static fixtures, generate realistic user profiles, addresses, order histories, and product catalogs to populate forms and test complex workflows in a UI. This ensures diverse data inputs without using sensitive production information.

- API Test Data Generation: Automatically create diverse JSON/XML payloads for REST or GraphQL APIs, covering valid inputs, edge cases (empty strings, nulls, boundary values), invalid data types, and error conditions. This significantly accelerates API testing.

- Performance Testing Data: Generate vast volumes of unique, realistic data to simulate heavy user loads, concurrent transactions, and diverse user behaviors, crucial for stress testing and capacity planning.

- Security Testing (AI-driven Fuzzing): AI-powered fuzzers learn application behavior and generate malformed or unexpected inputs more intelligently than traditional random fuzzers. This can uncover vulnerabilities like buffer overflows, injection flaws, or unexpected application states.

- Database Seeding: Populate development and testing databases with synthetic data that maintains referential integrity, data relationships, and realistic distributions, ensuring a robust test environment.

- Regression Test Suite Augmentation: AI can analyze existing test suites, identify areas of low coverage, and generate new tests to fill those gaps, improving the overall robustness of regression testing.

- Test Data Anonymization/Masking: While not strictly generation, AI can intelligently anonymize or mask sensitive real production data while preserving its statistical properties and relationships, making it safe for testing environments.

Challenges and Considerations

While the promise is immense, implementing AI-powered generative testing comes with its own set of challenges:

- "Hallucinations" and Accuracy: LLMs, in particular, can generate plausible but incorrect test cases or data. Human oversight, validation, and a robust feedback loop are crucial to catch these "hallucinations."

- Context Understanding: AI needs rich, accurate context (application domain, architecture, existing code, requirements) to generate truly effective tests. Integrating with existing documentation, code repositories, and domain experts is key.

- Computational Cost: Training and running advanced generative models, especially GANs and complex LLMs, can be resource-intensive, requiring significant computational power.

- Bias in Training Data: If the training data for the AI is biased, the generated tests or data may perpetuate those biases, leading to overlooked issues or skewed test coverage. Careful curation of training data is essential.

- Integration Complexity: Seamlessly integrating these AI tools into existing CI/CD pipelines, test automation frameworks, and development workflows requires careful planning and engineering effort.

- Maintaining Relevance: As applications evolve, the AI models need continuous updating and retraining to ensure the generated artifacts remain relevant and effective. Stale models will produce irrelevant tests.

- Explainability: Understanding why an AI generated a particular test case or data set can be challenging. This lack of explainability can impact trust and make debugging issues with generated tests more difficult.

The Future Outlook: A Paradigm Shift for QA

The trajectory for AI-powered generative testing is clear: it represents a fundamental shift in how we approach software quality.

- Demand for AI-Savvy QA Engineers: There will be a surge in demand for QA professionals who understand not just testing principles but also AI/ML concepts. These "AI-QA engineers" will be critical in designing, implementing, and managing generative testing solutions.

- New Tooling and Frameworks: Expect a proliferation of specialized AI-powered QA tools, both commercial and open-source, that abstract away the complexity of the underlying AI models, making them accessible to a wider audience.

- Hybrid Approaches Prevail: The most effective solutions will likely combine AI generation with human expertise and traditional test automation. AI will handle the heavy lifting of generation, while human testers will provide strategic oversight, exploratory testing, and validation.

- Ethical AI in Testing: Ensuring fairness, privacy, and accountability in AI-generated tests and data will become a critical area of research and practice. This includes mitigating bias and ensuring generated data respects privacy.

- Self-Healing Tests: The next frontier is not just generating tests but having AI adapt and fix tests automatically when application changes occur, dramatically reducing test maintenance overhead. Imagine tests that evolve alongside your code.

Conclusion

AI-Powered Generative Test Case and Data Synthesis is more than just a technological trend; it's a paradigm shift for software quality assurance. By intelligently automating the creation of test artifacts, it promises to make testing faster, more comprehensive, more cost-effective, and more resilient to the demands of modern software development. For AI practitioners and enthusiasts, this field offers an incredibly exciting and impactful area to explore, bridging the gap between cutting-edge AI research and the tangible benefits of delivering higher-quality software to the world. The era of intelligent, autonomous testing is not just on the horizon; it's rapidly becoming our present.