Synthetic Data: Unlocking AI's Potential by Solving the Data Paradox

Explore how Generative AI is revolutionizing machine learning by creating high-quality synthetic data, addressing critical challenges like scarcity and privacy, and pushing the boundaries of AI capabilities across industries.

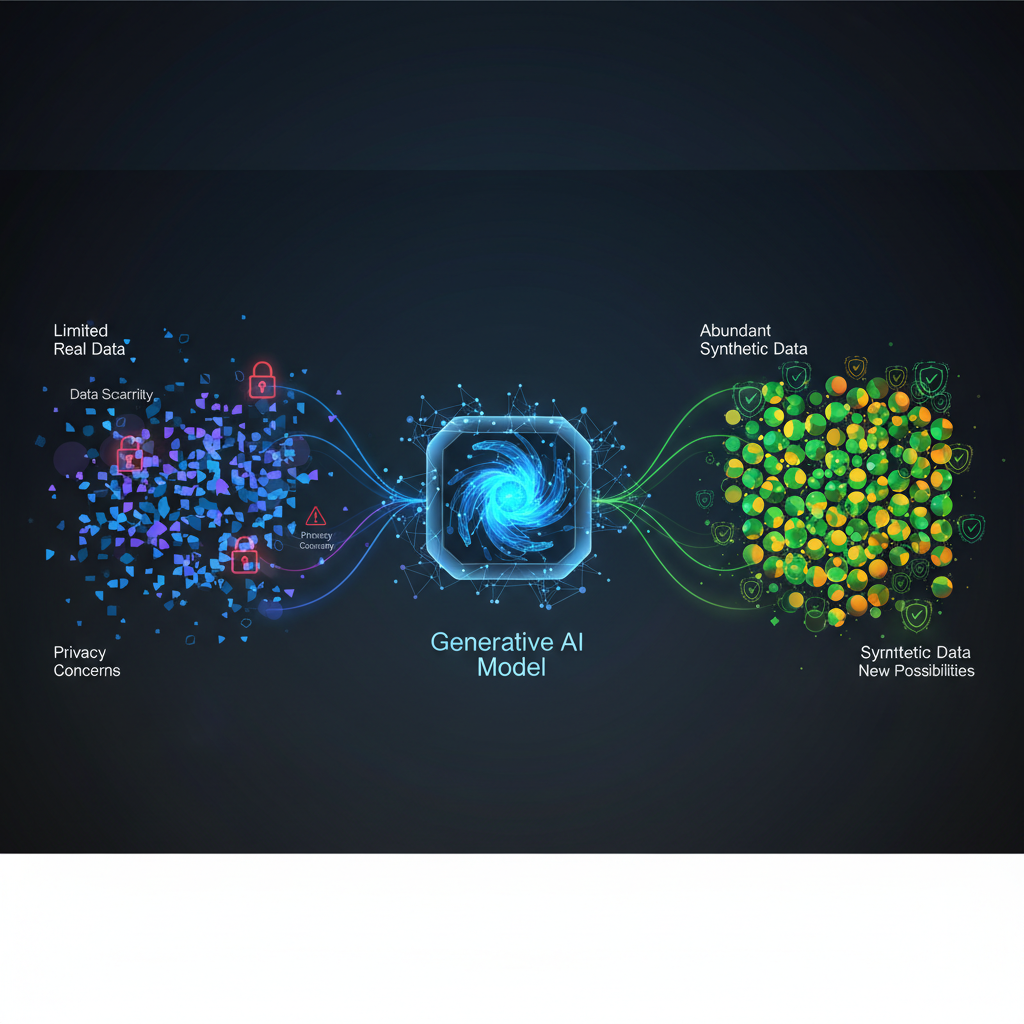

The lifeblood of artificial intelligence is data. Yet, for all its transformative power, data presents a persistent paradox: it's both abundant and scarce. We drown in oceans of information, but often lack the specific, high-quality, and ethically sourced datasets needed to train robust and unbiased machine learning models. This is where Generative AI steps in, offering a revolutionary solution: synthetic data. Far from being a mere imitation, synthetic data generated by sophisticated AI models is rapidly becoming an indispensable tool, unlocking new possibilities across industries and pushing the boundaries of what AI can achieve.

The Data Paradox: Why Synthetic Data is Essential

Before diving into the "how," let's understand the "why." The demand for synthetic data stems from several critical challenges faced by AI practitioners today:

- Data Scarcity: Imagine training a model to detect rare diseases or predict highly infrequent financial fraud events. Real-world examples are, by definition, scarce, making it incredibly difficult to gather enough data for effective model training. Similarly, in fields like autonomous driving, simulating every conceivable edge case (e.g., a deer crossing the road in dense fog at dusk) is practically impossible with real data alone.

- Data Privacy and Security: Regulations like GDPR, HIPAA, and CCPA have rightly placed stringent controls on the collection, storage, and sharing of sensitive personal information. This creates a significant hurdle for research and development, especially in healthcare, finance, and government. Synthetic data, devoid of direct links to real individuals, offers a privacy-preserving alternative that can mimic statistical properties without compromising individual privacy.

- Bias Mitigation: Real-world datasets often reflect and perpetuate societal biases present in the data collection process. If a dataset used to train a facial recognition system is predominantly composed of one demographic, it will perform poorly on others. Generative AI allows for the controlled creation of more balanced and diverse datasets, actively working to mitigate these inherent biases.

- Cost and Time Savings: Collecting, annotating, and validating real-world data is an expensive and time-consuming endeavor. Think of the millions of hours spent manually labeling images for computer vision tasks. Synthetic data can be generated on-demand, often at a fraction of the cost and time, accelerating the development lifecycle.

- Edge Case Generation: For critical applications like self-driving cars, models must be robust to extreme and unusual scenarios. Generating these "edge cases" in the real world is dangerous, costly, and often impractical. Synthetic environments and data allow for the creation of limitless variations of these challenging situations, improving model safety and reliability.

The maturation of advanced generative models, particularly Diffusion Models, has dramatically improved the fidelity and diversity of synthetic data, transforming it from a niche concept into a mainstream solution.

The Generative AI Toolkit: Algorithms Behind Synthetic Data

The magic of synthetic data generation lies in a diverse array of generative AI architectures, each with its unique strengths and mechanisms.

Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, revolutionized generative modeling. They operate on an adversarial principle, pitting two neural networks against each other:

- Generator (G): This network takes random noise as input and tries to produce synthetic data that resembles the real data.

- Discriminator (D): This network acts as a critic, trying to distinguish between real data samples and the synthetic data generated by G.

During training, G continuously tries to fool D, while D continuously tries to get better at identifying fakes. This adversarial game drives both networks to improve, with G eventually learning to produce highly realistic data that D can no longer reliably distinguish from real data.

Strengths: GANs can produce astonishingly realistic images, audio, and tabular data. Challenges: They are notoriously difficult to train, often suffering from instability and "mode collapse" (where the generator produces only a limited variety of samples). Variants: StyleGAN (for high-fidelity image generation), Conditional GANs (cGANs, allowing generation based on specific attributes), WGAN (improving training stability), BigGAN (for large-scale, diverse image generation).

Variational Autoencoders (VAEs)

VAEs offer a different, more stable approach to generative modeling, rooted in probabilistic principles. They consist of two main parts:

- Encoder: Maps input data to a lower-dimensional "latent space," representing the data's core characteristics as a probability distribution (mean and variance).

- Decoder: Takes samples from this latent space and reconstructs the original data.

Unlike standard autoencoders, VAEs learn a distribution over the latent space, which allows for sampling new, diverse data points by drawing from this learned distribution.

Strengths: More stable to train than GANs, provide a structured and interpretable latent space, excellent for interpolation and smooth transitions between generated samples. Challenges: Generated samples can sometimes lack the sharp fidelity and crispness of GANs.

Diffusion Models (DMs)

Currently the state-of-the-art for image and audio generation, Diffusion Models have taken the AI world by storm. Their mechanism is elegantly simple yet incredibly powerful:

- Forward Diffusion (Noising) Process: Gradually adds Gaussian noise to an input image over several steps, slowly transforming it into pure noise.

- Reverse Diffusion (Denoising) Process: The model learns to reverse this process, starting from pure noise and iteratively denoising it to reconstruct a coherent image. This is done by predicting the noise added at each step.

Strengths: High fidelity, remarkable diversity, stable training, and excellent for conditional generation (e.g., text-to-image). Challenges: Can be computationally intensive for generation, though sampling speed is rapidly improving with techniques like Latent Diffusion. Variants: Denoising Diffusion Probabilistic Models (DDPMs), Latent Diffusion Models (LDMs, like Stable Diffusion, which operate in a compressed latent space for efficiency).

Autoregressive Models (e.g., Transformers)

While often associated with sequence prediction, autoregressive models, especially large language models (LLMs) based on the Transformer architecture, are powerful generators of sequential data. They predict the next element in a sequence based on all preceding elements.

Strengths: Unparalleled coherence and contextual relevance for sequential data like text, code, and time series. Applications: Generating synthetic customer reviews, medical notes, code snippets, or even tabular data by treating rows as sequences of tokens. Example: An LLM can be prompted to generate a product review:

Prompt: "Write a positive review for a new smartphone, focusing on camera quality and battery life."

Output: "I'm absolutely blown away by the new XYZ phone! The camera is simply stunning, capturing incredibly detailed and vibrant photos even in low light. And the battery life? I can easily go a full day, sometimes even two, without needing a charge. Highly recommend!"

Prompt: "Write a positive review for a new smartphone, focusing on camera quality and battery life."

Output: "I'm absolutely blown away by the new XYZ phone! The camera is simply stunning, capturing incredibly detailed and vibrant photos even in low light. And the battery life? I can easily go a full day, sometimes even two, without needing a charge. Highly recommend!"

Flow-based Models

Flow-based models learn a reversible transformation from a simple base distribution (e.g., a standard Gaussian) to the complex data distribution. This reversibility allows for both efficient sampling (generating new data) and exact likelihood estimation (measuring how well the model fits the data).

Strengths: Exact likelihood calculation, efficient inference, and stable training. Challenges: Can be complex to design and scale to very high-dimensional data.

Practical Applications and Emerging Trends

The theoretical underpinnings of these models translate into a myriad of practical applications across diverse domains.

Computer Vision

- Application: Training autonomous vehicles requires vast amounts of data covering every possible road condition, weather scenario, and unexpected event. Synthetic data can generate endless variations of these scenarios, including rare and dangerous edge cases that are difficult to capture in the real world. In medical imaging, synthetic tumor images can augment scarce real data for training diagnostic models, especially for rare cancers.

- Trend: "Synthetic-to-Real" Transfer Learning: Models trained entirely on synthetic data are increasingly demonstrating strong performance on real-world data, often with the help of domain adaptation techniques that bridge the gap between synthetic and real domains.

- Trend: Text-to-Image Generation for Data Augmentation: Models like Stable Diffusion or DALL-E are being used to generate highly specific images based on textual prompts. Need more images of "a red car driving on a snowy road at night with a deer crossing"? Just describe it, and the model can generate it, providing targeted augmentation for specific training needs.

Natural Language Processing (NLP)

- Application: Generating synthetic customer support dialogues, medical reports, legal documents, or social media posts helps train NLP models for tasks like sentiment analysis, classification, or question answering, especially for low-resource languages or sensitive topics where real data is scarce or restricted.

- Trend: LLM-powered Data Augmentation: Fine-tuned LLMs can paraphrase sentences, expand short text snippets, or create entire synthetic datasets for specific NLP tasks. For example, to improve a chatbot's ability to handle complex queries, an LLM can generate hundreds of variations of a single user intent.

- Trend: Synthetic Code Generation: Training models on synthetically generated code snippets can enhance tools for code completion, bug detection, or even program synthesis, accelerating software development.

Tabular Data

- Application: Creating synthetic financial transaction data for fraud detection, patient records for healthcare analytics, or customer demographics for marketing allows organizations to develop and test models without exposing real individuals' sensitive information.

- Trend: Privacy-Preserving Synthetic Data: Beyond just mimicking statistical properties, the focus is on generating data that guarantees differential privacy or other robust privacy metrics, ensuring re-identification is practically impossible.

- Trend: Conditional Tabular Generation: Generating synthetic rows based on specific conditions is powerful. For instance, "generate 100 synthetic customer profiles of individuals over 50 who have churned from a subscription service."

Time Series Data

- Application: Generating synthetic sensor readings for industrial IoT, stock market data for financial modeling, or physiological signals for medical research provides a controlled environment for testing algorithms without relying on volatile or sensitive real-world streams.

- Trend: Generative Models for Anomaly Detection: By training models on vast amounts of synthetic "normal" time series data, they can become highly adept at identifying subtle deviations and anomalies in real-world streams, which is crucial for predictive maintenance or fraud detection.

Robotics and Simulation

- Application: Generating diverse simulation environments and sensor data allows for the training of robotic agents in a safe, scalable, and cost-effective manner. This reduces the need for expensive and potentially dangerous real-world trials.

- Trend: Reinforcement Learning with Synthetic Environments: Training reinforcement learning agents entirely within AI-generated synthetic environments enables rapid iteration and exploration of vast state spaces, with the agents then deployed in the real world after achieving proficiency.

Challenges and Future Directions

Despite its immense promise, the field of synthetic data generation is not without its challenges, which also point to exciting avenues for future research:

- Fidelity and Diversity: The eternal quest is to ensure synthetic data is not only indistinguishable from real data but also captures the full spectrum of real-world variations, including rare events and complex correlations.

- Evaluation Metrics: Developing robust and standardized metrics to quantify the quality, utility, and privacy guarantees of synthetic data remains a critical area. Beyond visual inspection, how do we objectively measure if synthetic data is "good enough" for a specific task?

- Controllability: Improving the ability to precisely control the attributes and characteristics of generated data (e.g., specific age, lighting conditions, sentiment, specific disease markers) is crucial for targeted data augmentation and bias mitigation.

- Bias Transfer/Mitigation: Generative models can inadvertently learn and amplify biases present in their training data. Future work focuses on techniques to prevent this bias transfer or even actively use synthetic data generation to debias existing datasets.

- Computational Cost: Training and sampling from advanced generative models, especially Diffusion Models, can be resource-intensive. Research into more efficient architectures and sampling methods is ongoing.

- Ethical Concerns: The power of generative AI raises significant ethical questions. The potential for misuse, such as creating hyper-realistic "deepfakes" for misinformation or generating datasets that are technically anonymized but still re-identifiable, demands careful consideration and the development of robust ethical guidelines and safeguards.

The Future is Synthetic

Generative AI for synthetic data generation and augmentation stands at the forefront of AI innovation. It directly addresses one of the most persistent bottlenecks in machine learning: the availability of high-quality, diverse, and ethically sound data. By democratizing access to data, accelerating research and development, and offering powerful tools for privacy preservation and bias mitigation, synthetic data is poised to transform how we build, train, and deploy AI systems.

For AI practitioners, mastering these techniques offers a competitive edge, enabling the development of more robust, fair, and privacy-preserving models. For enthusiasts, it's a fascinating glimpse into the cutting edge of AI, where creativity meets rigorous engineering to solve real-world problems. As generative models continue to evolve, the distinction between "real" and "synthetic" data will blur, paving the way for an era where AI can learn and innovate with unprecedented freedom and ethical responsibility. The future of AI is, in many ways, synthetic.