Unlocking AI's Potential: The Rise of Generative AI for Synthetic Data

Explore how Generative AI, with models like Diffusion and GANs, is revolutionizing data acquisition. Discover its role in creating high-quality, privacy-preserving synthetic data to fuel AI innovation across industries, from healthcare to autonomous driving.

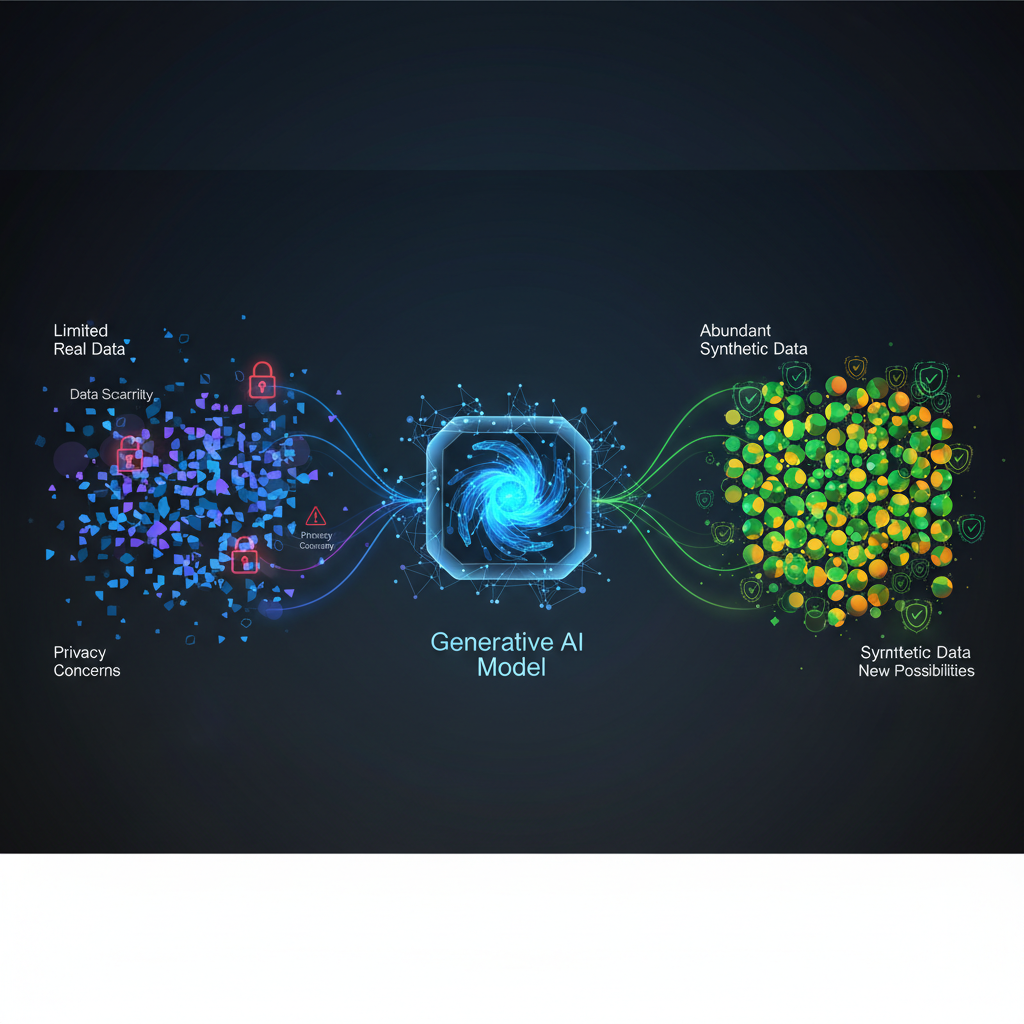

The lifeblood of artificial intelligence is data. Yet, the very fuel that powers our most sophisticated models — high-quality, labeled datasets — is often scarce, expensive to acquire, and fraught with privacy concerns. From the hyper-regulated realms of healthcare and finance to the competitive landscapes of e-commerce and autonomous driving, the quest for more, better, and safer data is relentless. Enter Generative AI for Synthetic Data Generation and Augmentation, a rapidly maturing field that promises to unlock new possibilities by creating artificial data that mimics the statistical properties and patterns of real-world information, without compromising sensitive details.

This isn't just a theoretical concept; it's becoming an indispensable tool for AI practitioners and enthusiasts alike. With the remarkable advancements in generative models like Diffusion Models and sophisticated GANs, we're moving beyond mere data augmentation to a future where synthetic data can accelerate development, enhance model robustness, and navigate complex regulatory landscapes.

The Data Dilemma: Why Synthetic Data is No Longer a Niche

The need for synthetic data stems from several fundamental challenges in the AI lifecycle:

- Data Scarcity & Cost: Acquiring and meticulously labeling real-world data is often a monumental undertaking, requiring significant time, resources, and human effort. For niche domains or rare events, real data might simply not exist in sufficient quantities.

- Privacy & Regulatory Hurdles: With increasingly stringent data privacy regulations like GDPR and CCPA, using sensitive real-world data for training, testing, or sharing becomes a legal and ethical minefield. Synthetic data, when generated responsibly, can bypass these hurdles by offering a privacy-preserving alternative.

- Bias Mitigation: Real-world datasets often reflect and perpetuate societal biases present in the data collection process. Synthetic data offers a controlled environment to create more balanced and diverse datasets, helping to train fairer and more equitable ML models.

- Edge Cases & Rare Events: ML models frequently struggle with rare events or "edge cases" due to insufficient examples in their training data. Generative models can be specifically engineered to synthesize data points for these critical, yet infrequent, scenarios, drastically improving model robustness and reliability.

- Rapid Prototyping & Development: The ability to generate synthetic data early in a project allows developers to begin training and testing models even before real-world data collection is complete, significantly accelerating development cycles and enabling faster iteration.

- Model Explainability & Debugging: Synthetic data can be designed to isolate specific features or conditions, providing a controlled environment to probe model behavior, understand its decision-making process, and identify potential failure points.

The technological maturity of modern Generative AI models has brought this solution to the forefront. We're no longer talking about simple data perturbation; we're talking about generating incredibly realistic, diverse, and statistically representative synthetic datasets across various modalities.

The Generative AI Powerhouse: Models Driving the Revolution

The magic behind high-quality synthetic data generation lies in the sophistication of modern generative AI models. These algorithms learn the underlying probability distribution of a dataset and then sample from that learned distribution to create new, never-before-seen data points.

1. Generative Adversarial Networks (GANs)

GANs, introduced by Ian Goodfellow in 2014, revolutionized generative modeling. They consist of two neural networks, a Generator and a Discriminator, locked in a zero-sum game:

- The Generator tries to create synthetic data that is indistinguishable from real data.

- The Discriminator tries to distinguish between real data and the Generator's synthetic data.

This adversarial process pushes both networks to improve, resulting in increasingly realistic synthetic outputs.

- How they work: The Generator takes random noise as input and transforms it into synthetic data. The Discriminator takes both real and synthetic data and outputs a probability that the input is real. The Generator's loss function is based on the Discriminator's ability to correctly identify its fakes, while the Discriminator's loss function is based on its ability to correctly classify both real and fake data.

- Variants for Synthetic Data:

- Conditional GANs (cGANs): Allow for targeted generation by conditioning the output on specific labels or attributes (e.g., generating a "cat" image or a "fraudulent transaction").

- StyleGANs: Known for generating highly realistic images with controllable attributes, useful for visual synthetic data.

- CTGAN (Conditional Tabular GAN): Specifically designed for tabular data, addressing challenges like mixed data types and imbalanced distributions.

2. Variational Autoencoders (VAEs)

VAEs are another powerful class of generative models that approach data generation from a different angle. They are probabilistic graphical models that learn a compressed, latent representation of the input data.

- How they work: An Encoder network maps input data to a latent space, typically represented by a mean and variance. A Decoder network then samples from this latent distribution to reconstruct the original input. The VAE is trained to minimize the reconstruction error while also ensuring the latent space follows a prior distribution (e.g., a Gaussian distribution), which helps in generating diverse samples.

- Advantages for Synthetic Data: VAEs are often more stable to train than GANs and provide a structured latent space, which can be useful for interpolation and understanding data variations. Conditional VAEs (CVAEs) extend this to controlled generation.

3. Diffusion Models

Currently, Diffusion Models are at the cutting edge of generative AI, particularly for image and audio synthesis, and are rapidly gaining traction for tabular and time-series data.

- How they work: Diffusion models operate in two phases:

- Forward Diffusion (Noising): Gradually adds Gaussian noise to an input image over several steps until it becomes pure noise.

- Reverse Diffusion (Denoising): Learns to reverse this process, starting from pure noise and iteratively removing noise to reconstruct a clean image. This denoising process is typically learned by a neural network (often a U-Net).

- Why they're powerful: They excel at capturing complex data distributions, often producing higher quality and more diverse samples than GANs. Their iterative denoising process allows for fine-grained control over the generation process.

- Impact on Synthetic Data: Models like Denoising Diffusion Probabilistic Models (DDPMs) and Latent Diffusion Models (used in Stable Diffusion) are showing immense promise for creating hyper-realistic synthetic images, but their application is expanding to tabular data (e.g., TabDDPM) and time series, offering state-of-the-art results.

4. Autoregressive Models & Transformers

While often associated with sequential data like text, autoregressive models and the Transformer architecture are also crucial for synthetic data generation.

- Autoregressive Models: Generate data one element at a time, predicting the next element based on all previously generated elements. This is fundamental for text generation (e.g., GPT models) and time-series data.

- Transformers: With their attention mechanisms, Transformers can model long-range dependencies, making them highly effective for complex sequential and even multi-modal data generation when integrated with other generative architectures.

Recent Developments & Emerging Trends

The field is evolving at an incredible pace, with several key trends shaping its future:

- Conditional Generation Mastery: The ability to generate synthetic data conditioned on specific attributes is becoming incredibly sophisticated. Imagine generating "a fraudulent transaction for a 45-year-old male from New York with a specific income bracket" or "an image of a car crash in foggy conditions at night." This precision is crucial for targeted data augmentation and testing.

- Privacy-Preserving Synthetic Data (PPSD): This is a hot research area. Techniques like Differential Privacy are being integrated directly into generative models. Differential Privacy adds controlled noise during the training process to ensure that no single individual's data can be inferred from the synthetic output, providing strong privacy guarantees.

- Multi-Modal Synthetic Data: The next frontier involves generating synthetic data that spans multiple modalities simultaneously. For example, creating an image of a scene and a corresponding textual description, or a video and associated sensor readings. This is vital for complex AI systems like autonomous vehicles or robotics.

- Synthetic Data for Foundation Models: As large foundation models become ubiquitous, synthetic data is being explored for pre-training or fine-tuning them, especially where real-world data is limited, proprietary, or too costly to acquire at scale.

- Benchmarking & Evaluation: Developing robust and standardized metrics to evaluate the utility, fidelity, and privacy of synthetic data is paramount. This includes comparing downstream model performance, statistical similarity metrics (e.g., Wasserstein distance, Maximum Mean Discrepancy), and formal privacy guarantees.

Practical Applications Across Industries

The implications of high-quality synthetic data are vast and transformative, impacting nearly every sector leveraging AI:

- Healthcare:

- Privacy-Preserving Research: Generating synthetic patient records for drug discovery, clinical trial design, and medical imaging analysis without compromising patient privacy. This allows researchers to share and collaborate on sensitive data without legal or ethical breaches.

- Rare Disease Modeling: Creating synthetic data for rare diseases where real patient data is extremely limited, enabling the development of diagnostic and treatment models.

- Finance:

- Fraud Detection: Synthesizing rare fraudulent transaction patterns to train more robust fraud detection models, which often suffer from highly imbalanced real-world datasets.

- Credit Scoring & Risk Assessment: Generating diverse financial scenarios to test and improve credit scoring models and risk assessment algorithms.

- Algorithmic Trading: Simulating market conditions and trading behaviors for backtesting and developing new strategies.

- Autonomous Vehicles:

- Diverse Driving Scenarios: Generating synthetic images, lidar, and radar data for various weather conditions (rain, snow, fog), lighting (day, night), and unusual events (pedestrians in unexpected places, rare traffic incidents) to train robust perception and control systems. This is safer and more scalable than collecting all such data in the real world.

- Sensor Simulation: Creating synthetic sensor data to train models in virtual environments before deployment in physical vehicles.

- E-commerce & Retail:

- Customer Behavior Modeling: Generating synthetic customer interaction data (browsing history, purchase patterns) for recommendation systems, personalized marketing, and inventory management, especially for new products or niche segments.

- A/B Testing: Simulating different user interfaces or product placements with synthetic user data to predict outcomes before live deployment.

- Cybersecurity:

- Threat Intelligence: Creating synthetic network traffic or attack patterns (e.g., DDoS, malware behavior) to train intrusion detection systems and test the resilience of security infrastructure without risking real systems.

- Robotics:

- Sensor Data Generation: Synthesizing diverse sensor data (e.g., camera, lidar, tactile, proprioceptive) for training robot manipulation, navigation, and interaction systems in various environments and under different conditions. This is crucial for sim-to-real transfer.

- Data Augmentation:

- Expanding Small Datasets: For tasks like image classification, object detection, or natural language processing, generative models can expand small datasets, leading to better generalization, reduced overfitting, and improved model performance.

- Fairness & Bias Mitigation: Creating synthetic datasets with balanced representation across sensitive attributes (e.g., gender, race, age) to train fairer and less biased models, addressing historical biases in real-world data.

- Testing & Debugging:

- Edge Case Testing: Generating specific synthetic data to rigorously test model behavior under extreme or unusual conditions, helping to uncover vulnerabilities and improve reliability.

- Isolation of Bugs: Creating controlled synthetic scenarios to isolate and debug specific issues in complex ML systems.

Challenges and Considerations

While the promise of synthetic data is immense, its successful implementation comes with its own set of challenges:

- Fidelity vs. Diversity: A critical balance must be struck. Synthetic data needs to be statistically similar to real data (high fidelity) to be useful, but also diverse enough to cover the full data distribution and prevent models from overfitting to the synthetic patterns.

- Privacy Guarantees: Ensuring that synthetic data truly protects the privacy of original individuals, especially when dealing with sensitive information, is paramount. This requires rigorous validation and often the integration of formal privacy techniques like Differential Privacy. A poorly generated synthetic dataset could inadvertently leak sensitive information.

- Evaluation Metrics: Developing robust, standardized, and universally accepted methods to evaluate the quality, utility, and privacy of synthetic data remains an active research area. Metrics must go beyond visual inspection to quantify statistical similarity, downstream model performance, and privacy leakage.

- Computational Cost: Training complex generative models, especially Diffusion Models or large GANs, can be computationally intensive, requiring significant GPU resources and time.

- "Garbage In, Garbage Out": Generative models learn from the data they are trained on. If the original data is biased, incomplete, or of poor quality, the synthetic data will likely inherit and even amplify those issues unless specific bias mitigation strategies are employed during the generation process.

- Regulatory Acceptance: While promising, the regulatory landscape for synthetic data is still evolving. Gaining full acceptance from regulatory bodies, particularly in highly sensitive domains, will require robust validation and clear standards.

Conclusion

Generative AI for Synthetic Data Generation and Augmentation stands at the nexus of several critical ML challenges: data scarcity, privacy, and bias. It offers a powerful paradigm shift, moving beyond simply collecting more data to intelligently creating it. With the rapid advancements in models like Diffusion Models, GANs, and VAEs, we are witnessing a transition from theoretical concept to a practical, indispensable tool.

For AI practitioners and enthusiasts, understanding and leveraging synthetic data generation is no longer optional; it's becoming a core competency. It empowers us to build more robust, ethical, and performant ML systems in a world increasingly constrained by data access and privacy concerns. As the technology matures and evaluation methodologies become more standardized, synthetic data will undoubtedly play a pivotal role in accelerating AI innovation and democratizing access to powerful machine learning capabilities across industries. The future of AI is not just about big data; it's about smart data, and synthetic data is leading the charge.