Multimodal Large Language Models: Bridging AI's Sensory Gap for Grounded Intelligence

Discover how Multimodal Large Language Models (MLLMs) are revolutionizing AI by integrating diverse sensory inputs like text, images, and audio. This paradigm shift enables AI to understand and reason about the world more comprehensively, moving towards truly human-like intelligence.

The world around us is inherently multimodal. We perceive, process, and interact with information through a rich tapestry of sights, sounds, and language. For decades, artificial intelligence systems largely operated in silos, specializing in one modality – be it text, images, or audio. While these specialized systems achieved remarkable feats, they often lacked the holistic understanding that comes from integrating diverse sensory inputs. This limitation has been a significant hurdle in creating truly intelligent, human-like AI.

Enter Multimodal Large Language Models (MLLMs). These groundbreaking AI architectures are rapidly bridging the gap between abstract linguistic concepts and concrete sensory experiences, heralding a new era of "grounded AI." MLLMs are not just a minor iteration; they represent a paradigm shift, enabling AI to see, understand, and reason about the world in a more comprehensive and intuitive manner.

The Rise of Multimodal Intelligence

The past 12-18 months have witnessed an explosion in the capabilities and accessibility of MLLMs. What started with impressive image captioning models has evolved into sophisticated systems capable of complex visual reasoning, instruction following, and generating coherent multimodal outputs. Models like OpenAI's GPT-4V, Google's Gemini, Meta's LLaVA, and open-source alternatives such as Fuyu and InstructBLIP are at the forefront of this revolution, demonstrating unprecedented abilities to process and reason across text, images, and sometimes even audio and video.

This rapid advancement is driven by several factors:

- Larger Models and Datasets: The success of large language models (LLMs) has shown that scale matters. MLLMs leverage this insight, training on even more massive, multimodal datasets.

- Architectural Innovations: Novel architectures are being developed to effectively integrate different modalities.

- Improved Training Strategies: Sophisticated pre-training and fine-tuning techniques are key to unlocking emergent capabilities.

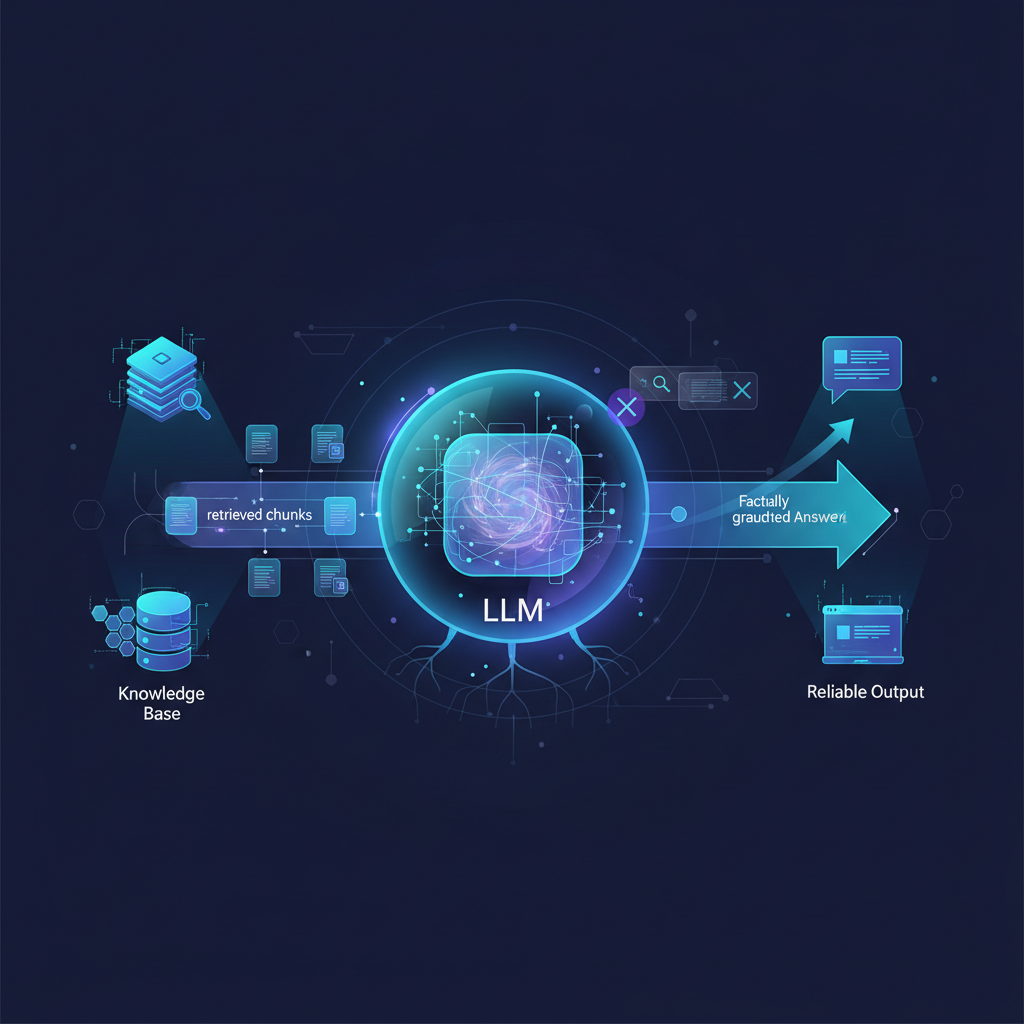

The implications are profound. MLLMs are not merely concatenating outputs from separate vision and language models; they are learning deep, shared representations that allow them to understand the interplay between modalities, leading to more robust and context-aware AI.

Unpacking the Technical Core of MLLMs

At their heart, MLLMs are complex systems designed to integrate information from disparate sources. This involves several technical challenges and innovative solutions.

Architectural Innovations: The Fusion of Modalities

The primary architectural challenge is how to "speak" vision features to a language model. Language models, typically Transformer-based, operate on sequences of discrete tokens. Vision models, on the other hand, produce dense feature vectors or sequences of visual tokens (patches). MLLMs tackle this by employing specialized components:

-

Vision Encoder: This component processes visual input (e.g., an image or video frame) and extracts meaningful features. Common choices include:

- Vision Transformers (ViT): These models divide an image into fixed-size patches, linearly embed each patch, add positional embeddings, and feed the resulting sequence into a standard Transformer encoder.

- CLIP (Contrastive Language-Image Pre-training) Vision Encoder: CLIP learns to associate images with their textual descriptions, producing highly semantic visual embeddings that are well-suited for multimodal tasks.

- ResNet/EfficientNet backbones: Often used in conjunction with attention mechanisms to extract features.

-

Projection Layer / Modality Aligner: This is the crucial bridge. The vision encoder's output (a sequence of visual tokens or a global embedding) needs to be transformed into a format that the language model can understand and integrate seamlessly with its textual tokens. This often involves:

- Linear Projection: A simple linear layer can map visual features into the language model's embedding space.

- Q-Former (Querying Transformer): Used in models like BLIP-2, this lightweight Transformer acts as an interface, querying the frozen image encoder for relevant features and projecting them into the LLM's input space. This allows the LLM to focus on relevant visual information without needing to process the full, high-dimensional visual output.

- Perceiver Resampler: Similar to Q-Former, it downsamples high-dimensional visual features into a fixed-length sequence of "visual tokens" that can be concatenated with text tokens.

-

Large Language Model (LLM) Backbone: This is typically a powerful, pre-trained Transformer decoder (like Llama, GPT, or PaLM variants) that handles the sequential processing of tokens, generates text, and performs reasoning. The visual tokens, after projection, are interleaved or prepended to the textual input sequence, allowing the LLM to attend to both modalities simultaneously.

Example Architecture Flow: Imagine an MLLM processing an image and a question: "What is the person in the red shirt doing?"

- Image Input: The image is fed into a ViT encoder, which breaks it into patches and extracts features.

- Visual Tokenization: These visual features are then processed by a projection layer (e.g., a Q-Former) to generate a sequence of "visual tokens" that are semantically aligned with the LLM's embedding space.

- Text Input: The question "What is the person in the red shirt doing?" is tokenized into textual tokens.

- Concatenation & LLM Processing: The visual tokens and textual tokens are concatenated into a single input sequence. The LLM then processes this combined sequence, attending to both visual and textual information to generate an answer. The LLM might first identify the "person in the red shirt" visually, then infer their action based on visual cues, and finally generate a textual description of that action.

Pre-training Strategies: Learning from the Multimodal Web

Training MLLMs requires vast datasets that contain interleaved text and images (and sometimes audio/video). The web is a rich source for this, with webpages, social media posts, and research papers often combining these modalities. Key pre-training objectives include:

- Contrastive Learning: Models like CLIP learn by maximizing the similarity between correct image-text pairs and minimizing it for incorrect pairs. This helps the model learn robust cross-modal representations.

- Image-Text Matching (ITM): The model predicts whether an image and a text segment are a positive pair (semantically related) or a negative pair.

- Image-Text Generation (ITG): Given an image, the model generates a descriptive caption, or given a caption, it generates an image (though this is more common in text-to-image generation models, the underlying principles of cross-modal understanding are shared).

- Masked Language Modeling (MLM) with Visual Context: Similar to BERT, but the model predicts masked text tokens while also attending to visual inputs.

- Next-Token Prediction (NTP) with Interleaved Modalities: The LLM predicts the next token in a sequence that can contain both text and visual tokens. This is particularly powerful for learning to generate multimodal outputs and for instruction following.

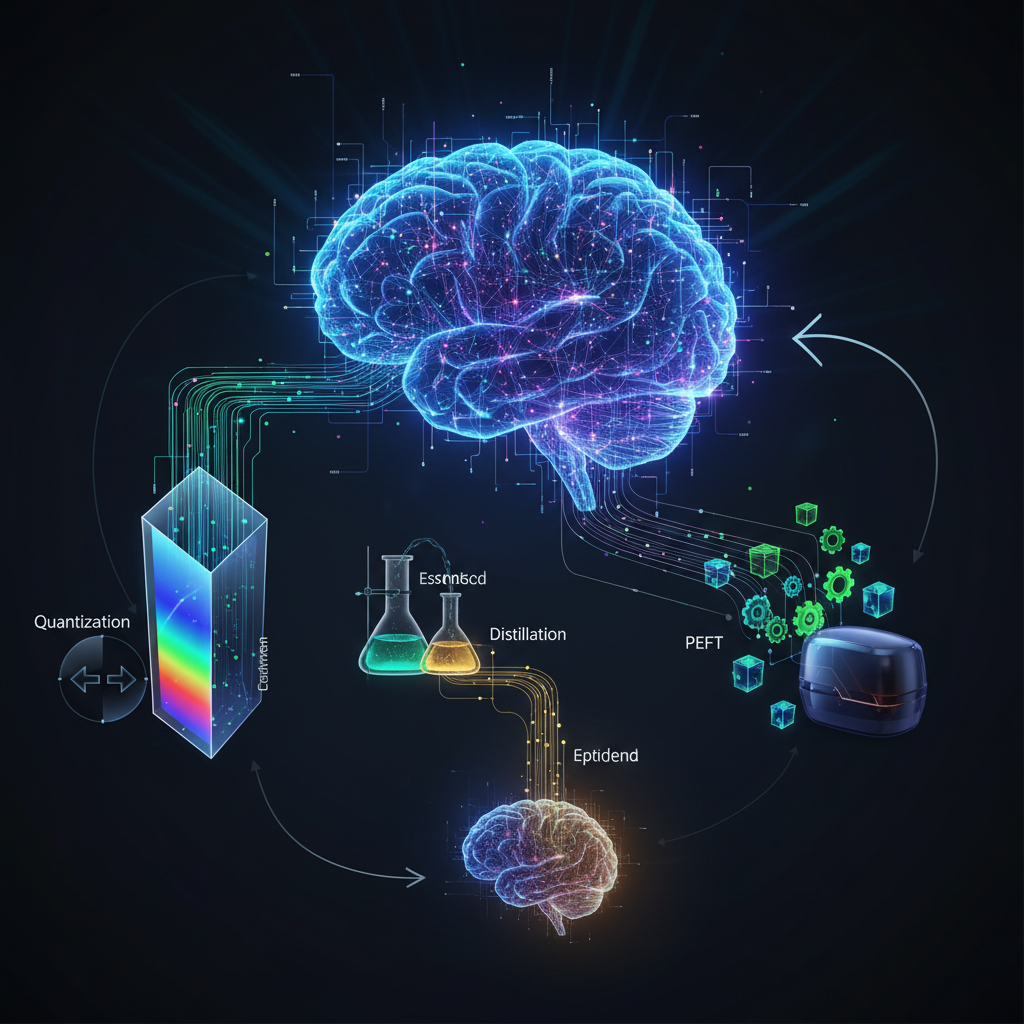

Fine-tuning and Alignment: Unlocking Practical Capabilities

While pre-training establishes foundational multimodal understanding, fine-tuning is crucial for aligning the model's behavior with specific user instructions and practical tasks.

- Instruction Tuning: This involves fine-tuning the MLLM on datasets of multimodal instructions and their corresponding responses. These datasets can be human-annotated (e.g., asking a model to describe an image in a specific style) or synthetically generated. This process teaches the model to follow complex commands and produce desired outputs.

- Low-Rank Adaptation (LoRA): For efficient fine-tuning, especially with large LLMs, LoRA is invaluable. Instead of updating all parameters of the large model, LoRA injects small, trainable matrices into the Transformer layers, significantly reducing the number of trainable parameters and computational cost. This makes it feasible to fine-tune MLLMs on custom datasets without requiring massive computational resources.

Emergent Capabilities: Beyond Simple Description

The combination of these techniques leads to emergent capabilities that go far beyond simple image captioning:

- Visual Question Answering (VQA): Answering complex questions about an image (e.g., "How many people are wearing hats?" or "What is the purpose of the device shown?").

- Visual Grounding: Identifying specific objects or regions in an image based on textual descriptions (e.g., "Point to the blue car").

- Object Detection via Language: Using natural language to describe objects to be detected, rather than relying on predefined categories.

- Generating Code from Screenshots: Some MLLMs can interpret a UI screenshot and generate the corresponding HTML/CSS/JS code.

- Multimodal Reasoning: Performing logical deductions or inferences that require understanding both visual and textual context (e.g., "Is this person likely to be cold given their attire and surroundings?").

Emerging Trends and the Future of Grounded AI

MLLMs are not static; the field is evolving rapidly, pushing the boundaries of what's possible.

- Beyond Image-Text: While image-text remains the dominant modality pair, the trend is towards incorporating more sensory inputs.

- Audio: Integrating speech-to-text, sound event detection, and even music understanding to create models that can "hear" the world.

- Video: Adding temporal reasoning capabilities to understand dynamic scenes, actions, and narratives over time.

- Structured Data: Combining visual and textual information with tabular data or knowledge graphs for richer context.

- Grounded AI: MLLMs are a significant step towards "grounded" AI, where models connect abstract linguistic concepts to concrete sensory experiences. This addresses a long-standing limitation of text-only models, which often "hallucinate" or lack real-world understanding because they operate solely within a linguistic space. By grounding language in perception, MLLMs can develop a more robust and reliable understanding of the world.

- Embodied AI & Robotics: MLLMs are becoming critical components for embodied agents and robots. They enable robots to:

- Understand Natural Language Instructions: "Pick up the red mug on the table next to the laptop."

- Perceive Their Environment: Identify objects, assess spatial relationships, and understand the context of a scene.

- Plan Actions: Based on instructions and perception, formulate a sequence of actions.

- Report Back: Describe their actions, observations, or ask clarifying questions in a human-understandable way.

- Efficiency and Open-Source: There's a strong push for more efficient MLLMs (smaller models, faster inference, quantized versions) and a growing ecosystem of open-source MLLMs. This democratizes access, accelerates research, and fosters innovation by allowing a wider community to build upon and improve these models.

Practical Applications for Practitioners and Enthusiasts

The capabilities of MLLMs translate into a vast array of practical applications across numerous industries, offering exciting opportunities for AI practitioners and enthusiasts alike.

1. Enhanced Content Creation & Editing

- Automatic Image Captioning & Description: Beyond simple captions, MLLMs can generate detailed, context-aware descriptions for accessibility (e.g., for visually impaired users), SEO, or content generation. For instance, an MLLM could describe an image of a bustling market as: "A vibrant outdoor market scene with a diverse crowd of people browsing stalls filled with fresh produce, textiles, and artisanal crafts under a clear blue sky."

- Visual Storytelling: Generate narratives from image sequences, or create compelling visual content from textual prompts with specific stylistic requirements. Imagine an MLLM taking a series of vacation photos and generating a travelogue.

- Design & UI/UX: MLLMs can analyze design mockups, provide feedback based on visual best practices, or even generate UI elements from textual descriptions (e.g., "Create a login form with a blue button and a 'Forgot Password' link").

2. Advanced Customer Service & Support

- Troubleshooting: Users can upload images or videos of a broken product, an error message on a screen, or a malfunctioning appliance. The MLLM can diagnose the issue, identify the specific component, and provide step-by-step troubleshooting instructions or link to relevant support articles.

- Product Identification: Customers can snap a photo of a product they like, and the MLLM can identify it, provide product details, reviews, and purchasing options from an e-commerce catalog.

3. Healthcare & Diagnostics

- Medical Image Analysis: Assisting doctors by interpreting X-rays, MRIs, CT scans, or pathology slides. MLLMs can identify anomalies, highlight regions of interest, and generate preliminary reports, potentially speeding up diagnosis and reducing human error. For example, an MLLM could analyze an X-ray and flag potential fractures or tumors.

- Patient Education: Explaining complex medical concepts using visual aids. An MLLM could interpret a diagram of the human circulatory system and answer a patient's questions in simple terms.

4. E-commerce & Retail

- Visual Search: "Shop the look" features where users upload an image of an outfit or item they like and find similar clothing, accessories, or home decor items available for purchase.

- Personalized Recommendations: Understanding user preferences from visual cues in their past purchases, browsing history, or even social media images, leading to more relevant product recommendations.

- Inventory Management: Visually identifying and counting items in a warehouse or store shelf.

5. Robotics & Autonomous Systems

- Instruction Following: A robot equipped with an MLLM can understand and execute complex natural language commands in a dynamic environment. For instance, "Go to the kitchen, open the fridge, and bring me the milk carton on the top shelf." The MLLM helps the robot visually identify the kitchen, the fridge, the milk carton, and plan the sequence of actions.

- Scene Understanding: Robots can use MLLMs to describe their environment, identify potential hazards, recognize objects for manipulation, and report their observations back to human operators.

- Human-Robot Collaboration: Facilitating more natural and intuitive interaction between humans and robots through shared understanding of visual and linguistic cues.

6. Education

- Interactive Learning: Explaining diagrams, graphs, scientific illustrations, or historical maps in detail, answering specific questions about their content, and providing additional context.

- Personalized Tutors: Answering questions about visual content in textbooks, online courses, or educational videos, acting as a knowledgeable assistant that can "see" what the student is seeing.

7. Accessibility

- Describing Visual Content: Providing detailed, context-aware descriptions of images and videos for visually impaired users. Unlike simple alt-text, MLLMs can generate rich narratives that capture the essence and important details of visual content, enhancing digital accessibility.

- Real-time Environmental Description: For individuals with visual impairments, an MLLM integrated into a wearable device could describe their surroundings, identify objects, read signs, and navigate spaces.

Conclusion

Multimodal Large Language Models represent a profound leap forward in artificial intelligence. By enabling AI systems to integrate and reason across diverse sensory inputs, MLLMs are paving the way for more intuitive, capable, and human-like AI. They are moving us closer to truly "grounded" intelligence – AI that doesn't just process symbols, but understands the real world through perception and interaction.

For AI practitioners, researchers, and enthusiasts, MLLMs offer a fertile ground for innovation. The field is moving at an astonishing pace, with new models, architectures, and applications emerging almost weekly. Understanding the technical underpinnings, exploring the emergent capabilities, and envisioning novel applications of MLLMs is not just an academic exercise; it's an opportunity to build the next generation of intelligent systems that can perceive, understand, and interact with our multimodal world in ways previously only imagined. The journey towards truly intelligent, context-aware AI is well underway, and MLLMs are undoubtedly leading the charge.