Foundation Models for Computer Vision: The Synergy of ViTs and Generative AI

Explore the paradigm shift in Computer Vision driven by Vision Transformers (ViTs) and Generative AI, especially Diffusion Models. Discover how Foundation Models, pre-trained on vast datasets, are democratizing AI development and accelerating innovation.

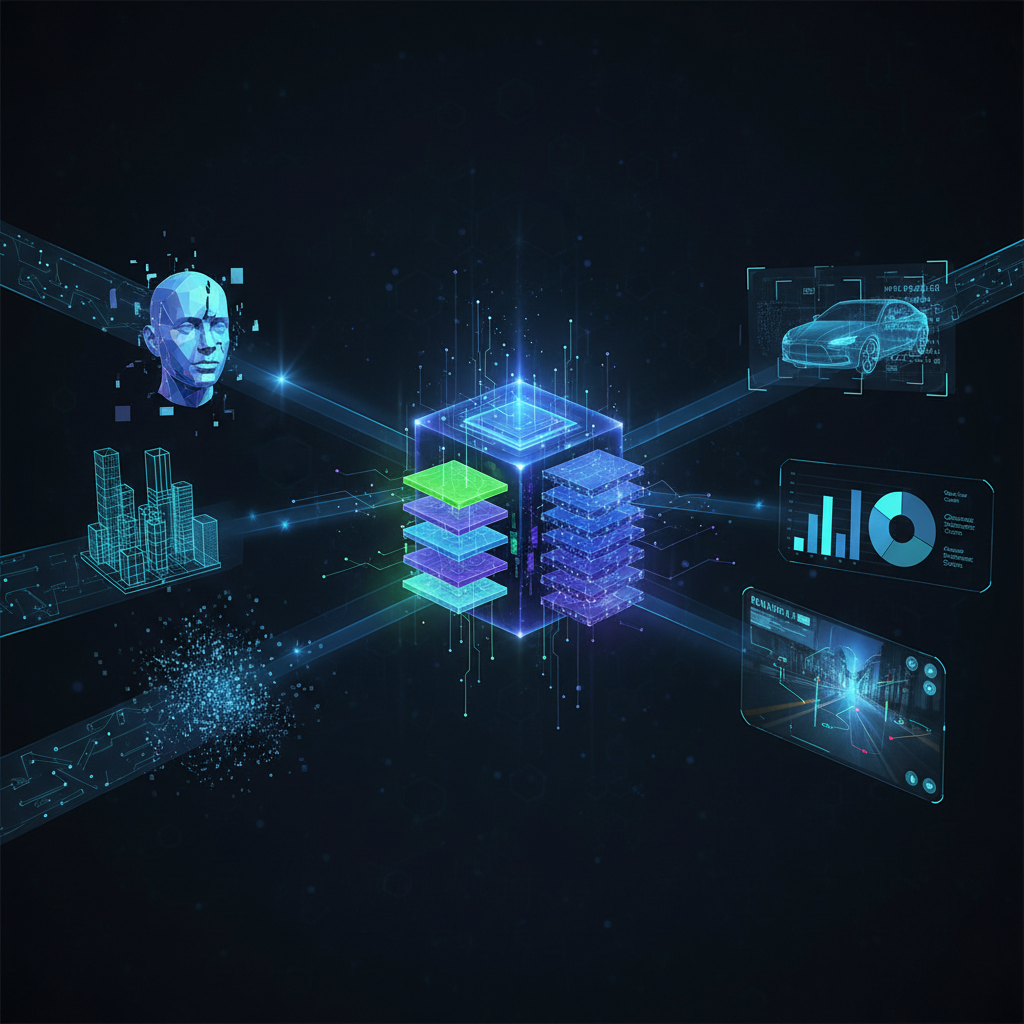

The landscape of artificial intelligence is in constant flux, but every so often, a paradigm shift occurs that fundamentally redefines the field. We are currently witnessing such a transformation in Computer Vision, driven by the convergence of two powerful technologies: Vision Transformers (ViTs) and Generative AI, particularly Diffusion Models. This synergy is giving rise to what we now call Foundation Models for Computer Vision, ushering in an era of unprecedented capabilities and generalization.

Gone are the days when every computer vision task required a bespoke model trained from scratch on carefully curated, task-specific datasets. Foundation Models, pre-trained on vast and diverse datasets, are designed to be adaptable. They learn rich, high-level representations of the visual world, which can then be fine-tuned, prompted, or leveraged with few-shot learning for a multitude of downstream applications. This shift promises to democratize AI development, accelerate innovation, and unlock new frontiers in visual understanding and creation.

The Rise of Universal Feature Extractors: Vision Transformers

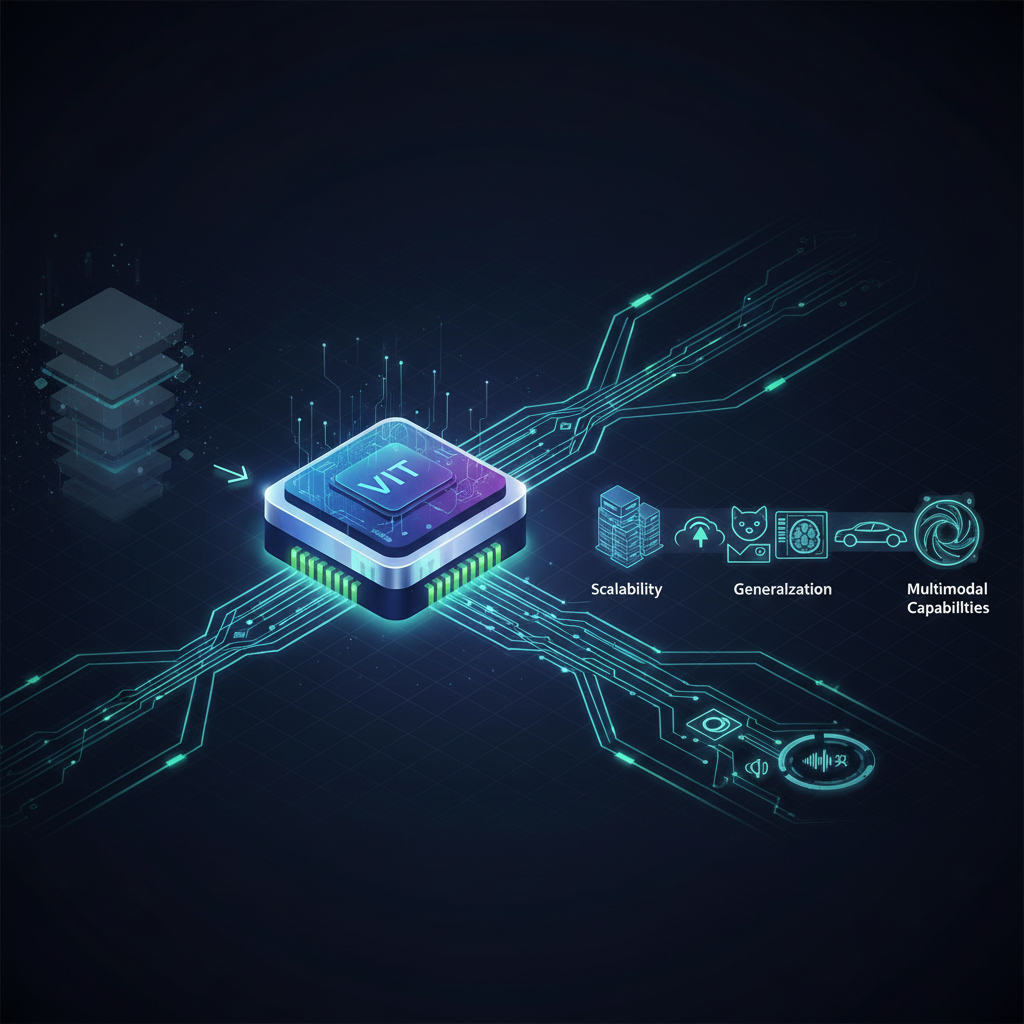

For nearly a decade, Convolutional Neural Networks (CNNs) reigned supreme in computer vision. Their hierarchical structure and ability to capture local features made them incredibly effective for tasks like image classification, object detection, and segmentation. However, a significant breakthrough in 2020 challenged this dominance: the introduction of the Vision Transformer (ViT) by Google.

Inspired by the success of the Transformer architecture in Natural Language Processing (NLP), ViTs demonstrated that the same attention-based mechanism could be applied to images. Instead of processing pixels directly, ViTs divide an image into fixed-size patches, linearly embed them, and treat these embeddings as a sequence of tokens. A standard Transformer encoder then processes this sequence, allowing it to capture long-range dependencies across the entire image – a capability where CNNs traditionally struggled.

Key Developments in Vision Transformers:

- Original ViT (2020): The seminal paper showed that when trained on sufficiently large datasets (like JFT-300M), ViTs could outperform state-of-the-art CNNs on image classification benchmarks. This was a crucial proof of concept, demonstrating the viability of Transformers for vision tasks.

- Data-Efficient Transformers (DeiT, 2021): A common criticism of early ViTs was their heavy reliance on massive datasets. DeiT addressed this by introducing a knowledge distillation strategy, allowing ViTs to be trained effectively on smaller datasets like ImageNet, making them more accessible.

- Self-Supervised Learning (SSL) for ViTs: This area has been instrumental in unlocking the full potential of ViTs. SSL methods allow models to learn powerful visual representations from unlabeled data, which is abundant.

- DINO (Self-Distillation with No Labels, 2021): Meta AI's DINO demonstrated that a ViT trained with self-supervised learning could learn highly semantic features, enabling zero-shot object segmentation without any explicit training for that task. It essentially learns to segment objects by observing patterns and relationships in images.

- MAE (Masked Autoencoders, 2021): Another groundbreaking SSL method from Meta AI, MAE pre-trains ViTs by masking out a large portion of image patches and then reconstructing the missing pixels. This simple yet effective approach forces the model to learn rich, contextual representations of images, leading to state-of-the-art transfer performance on various downstream tasks.

- Hierarchical ViTs (e.g., Swin Transformer, 2021): While original ViTs excelled at classification, their fixed-size patch processing made them less suitable for dense prediction tasks like object detection and semantic segmentation, which require multi-scale feature maps. The Swin Transformer introduced hierarchical feature maps and shifted window attention, making ViTs competitive with CNNs for these pixel-level tasks and improving computational efficiency.

- Large-scale Pre-training with Multimodality: Models like CLIP (Contrastive Language-Image Pre-training, OpenAI, 2021) and ALIGN (Google, 2021) took ViTs to another level by training them on massive internet-scale datasets of image-text pairs. They learn a joint embedding space where semantically similar images and text descriptions are close together. This enables remarkable zero-shot capabilities, such as classifying images based on text descriptions they've never seen during training, or retrieving images using natural language queries.

Practical Value of ViTs: ViTs, especially when pre-trained with self-supervision or multimodal objectives, have become the backbone for many state-of-the-art models. They excel in image classification, object detection, segmentation, and even video understanding. Their ability to capture global context and their strong performance with self-supervised pre-training make them incredibly versatile and a fundamental component of modern computer vision systems.

The Creative Engine: Generative AI with Diffusion Models

While ViTs are revolutionizing how we understand and extract features from images, another class of models is transforming how we create them: Diffusion Models (DMs). These generative models have taken the world by storm with their ability to produce incredibly realistic and diverse images from simple text prompts.

At their core, diffusion models operate on a clever principle: they learn to reverse a gradual "noising" process. Imagine starting with a clear image and slowly adding random noise until it becomes pure static. A diffusion model learns to reverse this process, starting from pure noise and iteratively denoising it step-by-step to reconstruct a coherent, high-quality image.

Key Developments in Diffusion Models:

- Denoising Diffusion Probabilistic Models (DDPMs, 2020): This work laid the theoretical and practical groundwork for stable and high-quality image generation using diffusion. It showed how to effectively train these models and achieve impressive results.

- Latent Diffusion Models (LDMs, CompVis/Stability AI, 2021): A significant leap in efficiency and scalability. Instead of performing the computationally intensive diffusion process directly in the high-dimensional pixel space, LDMs operate in a lower-dimensional latent space. This dramatically reduces computational cost and memory requirements, making them practical for generating high-resolution images. This is the core technology behind popular models like Stable Diffusion.

- Conditional Generation: The true power of DMs lies in their ability to be conditioned on various inputs.

- Text-to-Image Generation: This is the most famous application, exemplified by models like DALL-E 2, Midjourney, and Stable Diffusion. These models take a natural language prompt (e.g., "An astronaut riding a horse in a photorealistic style") and generate a corresponding image. This conditioning is often achieved by integrating powerful text encoders (like those from CLIP) that guide the diffusion process based on the semantic meaning of the prompt.

- Image-to-Image Translation: DMs can transform an input image based on a prompt or another image (e.g., changing the style of a photo, converting a sketch to a realistic image).

- Inpainting and Outpainting: They can intelligently fill in missing parts of an image (inpainting) or extend an image beyond its original borders (outpainting), maintaining consistency with the existing content.

- ControlNet (Stanford/ByteDance, 2023): A revolutionary neural network structure that allows for fine-grained control over diffusion models. ControlNet enables users to guide image generation with additional input conditions such as edge maps, depth maps, human pose skeletons, or segmentation maps. This provides unprecedented precision and control, moving beyond simple text prompts to highly specific visual instructions.

- Video Generation: The capabilities of DMs are now extending to generating entire video sequences, with models like Google's Imagen Video and Meta's Make-A-Video demonstrating the potential for creating dynamic visual content.

Practical Value of Diffusion Models: Diffusion models are revolutionizing creative industries.

- Content Creation: Digital artists, graphic designers, advertisers, and game developers are using DMs to rapidly prototype ideas, generate unique assets, and create stunning visuals.

- Data Augmentation: DMs can generate synthetic data that is highly realistic and diverse, which is invaluable for training other CV models, especially in domains where real-world data is scarce or expensive to collect.

- Image Editing: Powerful tools for semantic image manipulation, style transfer, and intelligent photo editing are emerging.

- Simulation & Virtual Worlds: Creating realistic textures, environments, and objects for virtual reality, augmented reality, and simulations.

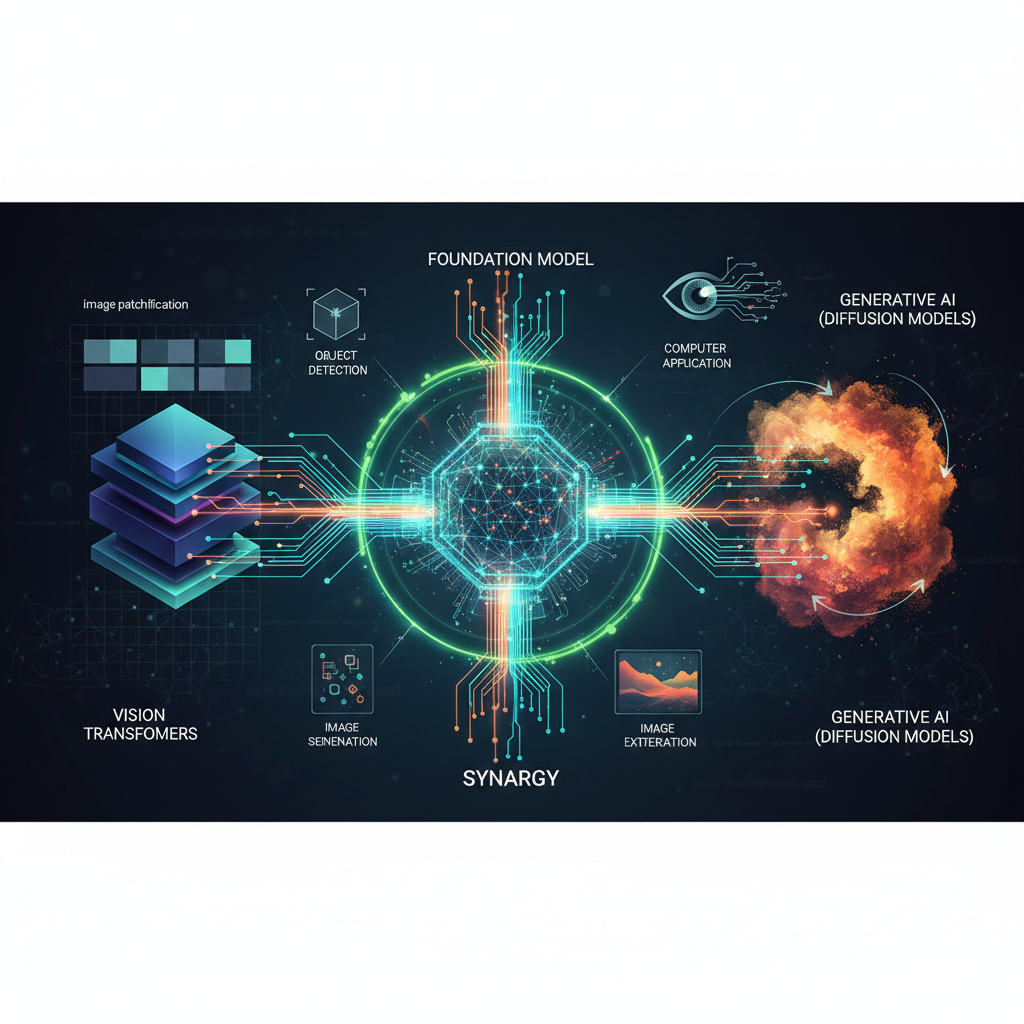

The Synergy: Where ViTs and Diffusion Models Converge

The true magic happens when Vision Transformers and Diffusion Models are brought together. Their integration creates powerful Foundation Models that can both understand and generate visual content with unprecedented sophistication.

- CLIP as the Multimodal Bridge: CLIP (Contrastive Language-Image Pre-training) is a prime example of this synergy. It consists of a ViT-based image encoder and a Transformer-based text encoder, trained together to learn a shared, multimodal embedding space. This joint embedding space is absolutely crucial for guiding text-to-image diffusion models. When you provide a text prompt to Stable Diffusion, for instance, a CLIP text encoder processes that prompt into an embedding. This embedding then conditions the diffusion process, ensuring that the generated image aligns semantically with the text description.

- Zero-Shot Capabilities: The combination amplifies zero-shot learning. CLIP enables zero-shot image classification and retrieval, allowing models to understand new categories without explicit training. Diffusion models, guided by CLIP's understanding, can then generate novel images corresponding to these previously unseen concepts.

- Multimodality: This convergence moves beyond mere pixels to semantic concepts. It allows for sophisticated multimodal understanding (e.g., answering questions about images, describing images in natural language) and generation (e.g., creating images from text, editing images with text commands).

- Efficient Adaptation: The pre-trained weights from large ViT models, often trained with self-supervision, provide an excellent starting point for fine-tuning on specific downstream tasks. This significantly reduces the data and computational resources required for new applications.

- "World Models" Potential: The long-term vision is that these models, trained on vast quantities of diverse data and leveraging both robust understanding (ViTs) and creative generation (DMs), could learn rich, high-level representations of the visual world. This could lead to more general, adaptable, and intelligent visual AI systems that can reason about and interact with their environment in human-like ways.

Practical Examples and Use Cases

Let's delve into some concrete examples of how these Foundation Models are being applied:

-

Creative Content Generation:

- Marketing & Advertising: Rapidly generate diverse ad creatives, product mockups, or social media content tailored to specific campaigns and demographics. Imagine generating 100 variations of an ad banner in minutes.

- Gaming & Entertainment: Create unique game assets, character designs, environmental textures, or concept art. Artists can iterate on ideas much faster, focusing on refinement rather than initial creation.

- Personalized Art: Users can generate custom artwork, illustrations, or even photorealistic images based on their imagination, leading to new forms of self-expression.

-

Data Augmentation & Synthetic Data Generation:

- Rare Event Detection: In domains like medical imaging or industrial inspection, certain anomalies are extremely rare. Diffusion models can generate synthetic examples of these rare events, significantly improving the training data for anomaly detection systems.

- Privacy-Preserving AI: Generate synthetic datasets that mimic the statistical properties of real data without containing any actual sensitive information, enabling AI development in privacy-sensitive sectors.

- Robotics & Simulation: Create diverse training environments and scenarios for robotic agents, allowing them to learn robust behaviors without extensive real-world trials.

-

Advanced Image Editing & Manipulation:

- Semantic Editing: Change objects or attributes in an image using natural language. For example, "change the car to red," or "add a dog sitting on the couch."

- Style Transfer: Apply the artistic style of one image to another, or generate new images in a specific artistic style (e.g., "a cityscape in the style of Van Gogh").

- Image Restoration: Denoise old photos, colorize black and white images, or intelligently remove unwanted objects (inpainting) with remarkable realism.

-

Zero-Shot and Few-Shot Learning:

- Novel Object Recognition: A factory might need to inspect a new product line. Instead of collecting thousands of images for training, a CLIP-enabled system can identify defects with just a few examples, or even zero-shot with a descriptive text prompt.

- Visual Search: Search for images using natural language descriptions, even for concepts not explicitly tagged in the dataset. "Find images of serene landscapes with a single tree."

-

Multimodal Understanding:

- Image Captioning: Automatically generate descriptive captions for images, aiding accessibility and content organization.

- Visual Question Answering (VQA): Answer complex questions about the content of an image, demonstrating a deeper understanding of visual semantics.

Challenges and Future Directions

Despite their incredible potential, Foundation Models for Computer Vision face several challenges that are active areas of research:

- Computational Cost: Training these models from scratch requires immense computational resources (GPUs, energy), making them accessible only to large organizations. Research into more efficient architectures and training methods is crucial.

- Data Requirements: While self-supervised learning reduces the need for labeled data, the initial pre-training still often requires massive, diverse datasets. Curating and managing these datasets is a significant undertaking.

- Bias and Ethics: Foundation Models learn from the data they are trained on. If this data contains biases (e.g., underrepresentation of certain demographics, stereotypical portrayals), the models will inherit and amplify these biases, leading to unfair, discriminatory, or harmful outputs. Addressing bias, ensuring fairness, and developing ethical guidelines are paramount.

- Interpretability and Explainability: These models are incredibly complex "black boxes." Understanding why they make certain decisions or generate specific images is challenging, hindering trust and debugging.

- Robustness and Adversarial Attacks: Ensuring the reliability and security of these models against adversarial attacks (subtly altered inputs designed to fool the model) is an ongoing concern.

- Efficient Deployment: The large size of these models makes deployment on edge devices or in real-time applications challenging. Techniques like model compression, quantization, and specialized hardware are being explored.

- Novel Architectures and Modalities: Research continues into new Transformer variants, improvements to diffusion models, and exploring their application to other modalities like 3D data, audio, and more complex interactive environments.

Conclusion

The convergence of Vision Transformers and Diffusion Models has ushered in a new era for Computer Vision, characterized by the rise of powerful Foundation Models. These models are not just incremental improvements; they represent a fundamental shift in how we approach AI development, moving towards more general, adaptable, and multimodal intelligence.

From revolutionizing creative industries with text-to-image generation to enhancing scientific discovery and enabling more robust AI systems through synthetic data, the impact is already profound. For AI practitioners and enthusiasts, understanding these foundational concepts, their practical applications, and the ongoing research challenges is not just beneficial – it's absolutely essential to navigate and contribute to the future of visual AI. The journey towards truly generalized visual intelligence is well underway, and Foundation Models are leading the charge.