Foundation Models in Computer Vision: A Paradigm Shift for AI

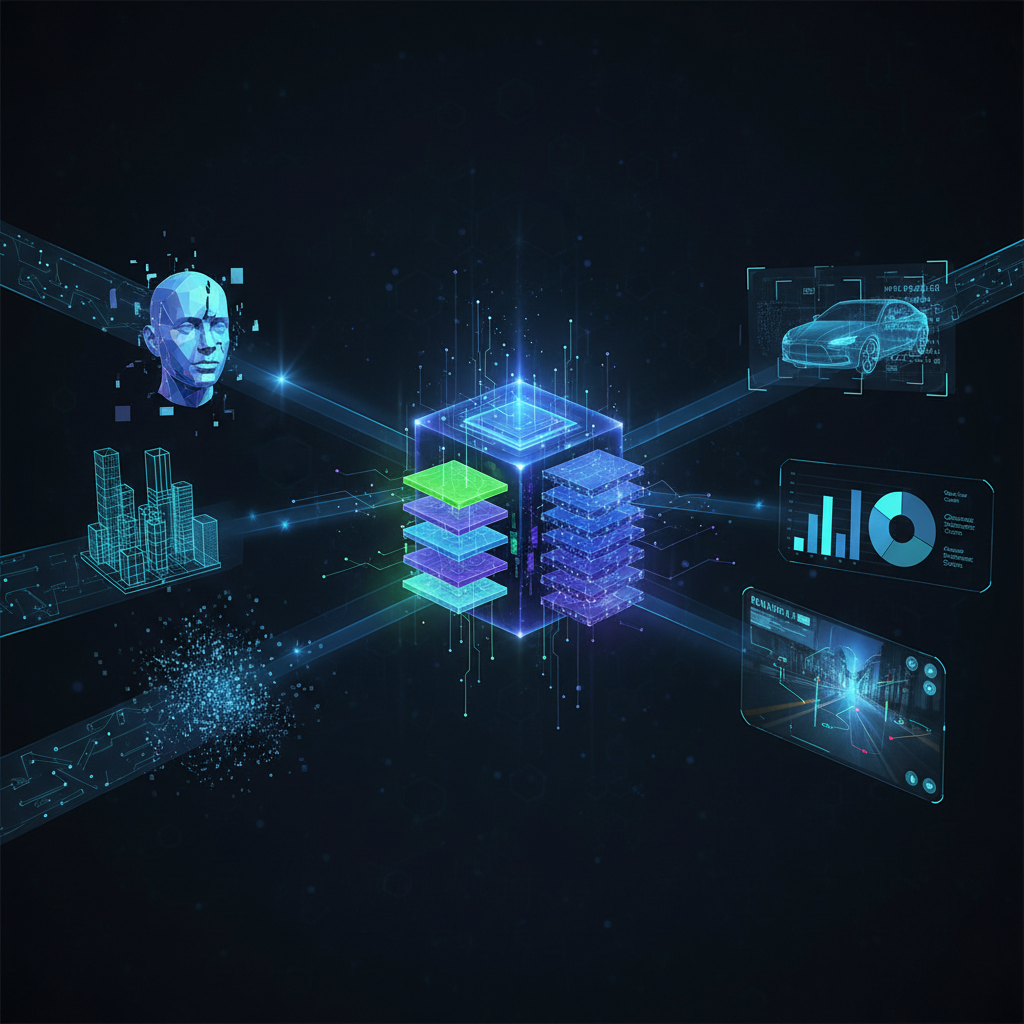

Explore the revolutionary impact of Foundation Models on Computer Vision, moving beyond specialized AI to general-purpose visual intelligence. This shift promises to democratize advanced CV capabilities and unlock unprecedented applications, mirroring the LLM revolution in NLP.

The landscape of artificial intelligence is in constant flux, but every so often, a shift occurs that fundamentally redefines what's possible. We witnessed this with Large Language Models (LLMs) in Natural Language Processing (NLP), and now, a similar revolution is sweeping through Computer Vision (CV): the advent of Foundation Models. These aren't just incremental improvements; they represent a paradigm shift towards general-purpose visual intelligence, promising to democratize advanced CV capabilities and unlock unprecedented applications.

The Dawn of General-Purpose Visual Intelligence

For decades, computer vision largely relied on training specialized models for specific tasks. Want to classify cats? Train a cat classifier. Need to detect cars? Train an object detector. This approach, while effective, was resource-intensive, requiring vast labeled datasets and significant computational power for each new task.

Foundation models challenge this paradigm. Inspired by the success of LLMs, they are massive, pre-trained models designed to learn broad representations from vast, often unlabeled, datasets. The magic lies in their ability to then adapt to a wide array of downstream tasks with minimal fine-tuning, or even zero-shot, without explicit training examples for that specific task. This shift from task-specific models to general-purpose intelligence is transforming how we approach visual AI.

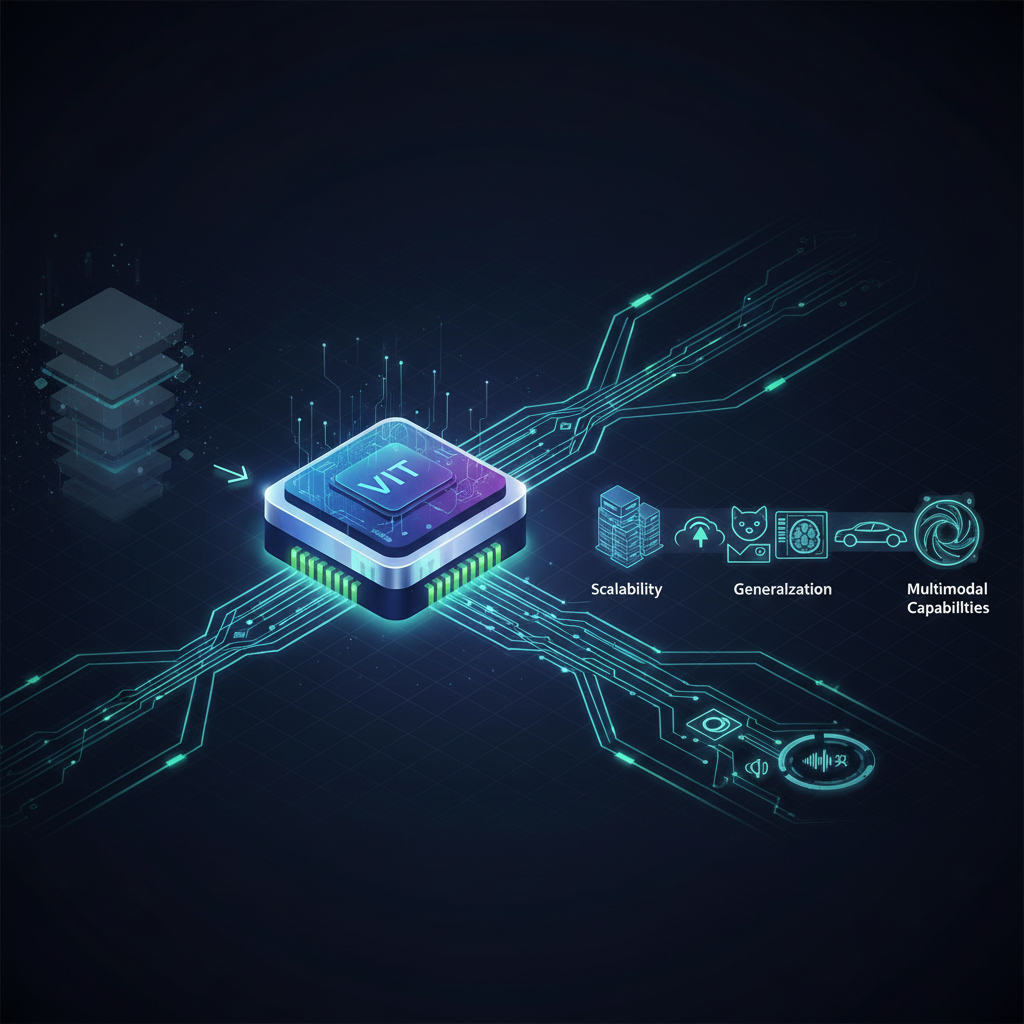

Vision Transformers (ViTs): The Architectural Backbone

The cornerstone of this revolution is the Vision Transformer (ViT). Before ViTs, Convolutional Neural Networks (CNNs) reigned supreme in computer vision. CNNs excel at capturing local features through their convolutional filters, building hierarchical representations. However, they struggle with long-range dependencies across an image without complex architectures.

ViTs, introduced in 2020 by Google, boldly adapted the Transformer architecture – originally designed for NLP – to image data. The core idea is surprisingly simple:

- Image Patching: An input image is divided into a grid of fixed-size patches (e.g., 16x16 pixels).

- Linear Embedding: Each patch is flattened and linearly projected into a fixed-size embedding vector.

- Positional Encoding: To retain spatial information (as Transformers are permutation-invariant), positional embeddings are added to the patch embeddings.

- Transformer Encoder: These sequence of patch embeddings are then fed into a standard Transformer encoder, which consists of multiple layers of multi-head self-attention and feed-forward networks.

This approach allows ViTs to capture global relationships between distant parts of an image, something CNNs inherently struggle with.

Architectural Variants and Self-Supervised Pre-training

While vanilla ViTs showed promising results, their performance often lagged CNNs without massive pre-training datasets. This led to a flurry of innovations:

- Swin Transformers: Introduced hierarchical attention, operating on shifted windows rather than global attention. This significantly reduces computational complexity, making Swin Transformers more efficient and scalable, especially for dense prediction tasks like segmentation.

- Masked Autoencoders (MAE): Developed by Meta AI, MAE applies the "masked language modeling" idea from BERT to images. A large portion of image patches are masked, and the model is trained to reconstruct the original pixel values of the masked patches. This self-supervised pre-training strategy teaches the model rich visual representations without any labels.

- DINO (Self-Distillation with No Labels): This method uses self-supervised learning where a "student" ViT learns by matching the output of a "teacher" ViT, without requiring explicit labels or even contrastive pairs. It's particularly effective at learning strong visual features that can be used for tasks like segmentation and object detection.

Practical Implications: ViTs, especially their optimized variants, have demonstrated superior performance on various benchmarks, particularly when trained on large datasets. Their ability to model long-range dependencies makes them powerful for tasks requiring a holistic understanding of an image. For practitioners, this means access to powerful pre-trained models that can serve as robust backbones for a wide range of CV tasks.

Vision-Language Models (VLMs): Bridging the Modality Gap

Perhaps the most exciting development in foundation models for CV is the convergence of vision and language. Vision-Language Models (VLMs) are designed to understand and generate content across these two fundamental human modalities, leading to models that can "see" and "speak" about the world.

CLIP: The Game Changer

Contrastive Language-Image Pre-training (CLIP), developed by OpenAI, was a groundbreaking VLM. It learns visual concepts from natural language supervision, rather than relying on manually annotated labels.

Mechanism: CLIP is trained on a massive dataset of 400 million image-text pairs scraped from the internet. It simultaneously trains an image encoder and a text encoder to embed images and their corresponding captions into the same high-dimensional latent space. The training objective is to maximize the cosine similarity between correct image-text pairs and minimize it for incorrect pairs.

How CLIP Enables Zero-Shot Classification: Imagine you want to classify an image as "cat," "dog," or "bird." With CLIP, you don't need to fine-tune a model. You simply:

- Encode the image using the CLIP image encoder.

- Encode the text prompts "a photo of a cat," "a photo of a dog," and "a photo of a bird" using the CLIP text encoder.

- Calculate the similarity (e.g., cosine similarity) between the image embedding and each text embedding.

- The class corresponding to the highest similarity is the predicted label.

# Conceptual example, not runnable without CLIP model setup

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

import requests

# Load pre-trained CLIP model and processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Example image

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# Candidate labels

candidate_labels = ["a photo of a cat", "a photo of a dog", "a photo of a remote control"]

# Process inputs

inputs = processor(text=candidate_labels, images=image, return_tensors="pt", padding=True)

# Get model outputs

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image # this is the image-text similarity score

probs = logits_per_image.softmax(dim=1) # convert to probabilities

# Output results

for i, label in enumerate(candidate_labels):

print(f"'{label}': {probs[0][i].item():.4f}")

# Expected output (approximate):

# 'a photo of a cat': 0.9990

# 'a photo of a dog': 0.0009

# 'a photo of a remote control': 0.0001

# Conceptual example, not runnable without CLIP model setup

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

import requests

# Load pre-trained CLIP model and processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Example image

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

# Candidate labels

candidate_labels = ["a photo of a cat", "a photo of a dog", "a photo of a remote control"]

# Process inputs

inputs = processor(text=candidate_labels, images=image, return_tensors="pt", padding=True)

# Get model outputs

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image # this is the image-text similarity score

probs = logits_per_image.softmax(dim=1) # convert to probabilities

# Output results

for i, label in enumerate(candidate_labels):

print(f"'{label}': {probs[0][i].item():.4f}")

# Expected output (approximate):

# 'a photo of a cat': 0.9990

# 'a photo of a dog': 0.0009

# 'a photo of a remote control': 0.0001

Applications: CLIP's capabilities are vast:

- Zero-shot image classification: Classify images into categories it has never seen during training.

- Image retrieval: Find images based on natural language queries.

- Content moderation: Identify inappropriate content using textual descriptions.

- Prompt engineering for generative AI: Guiding models like DALL-E 2 or Stable Diffusion to generate specific images.

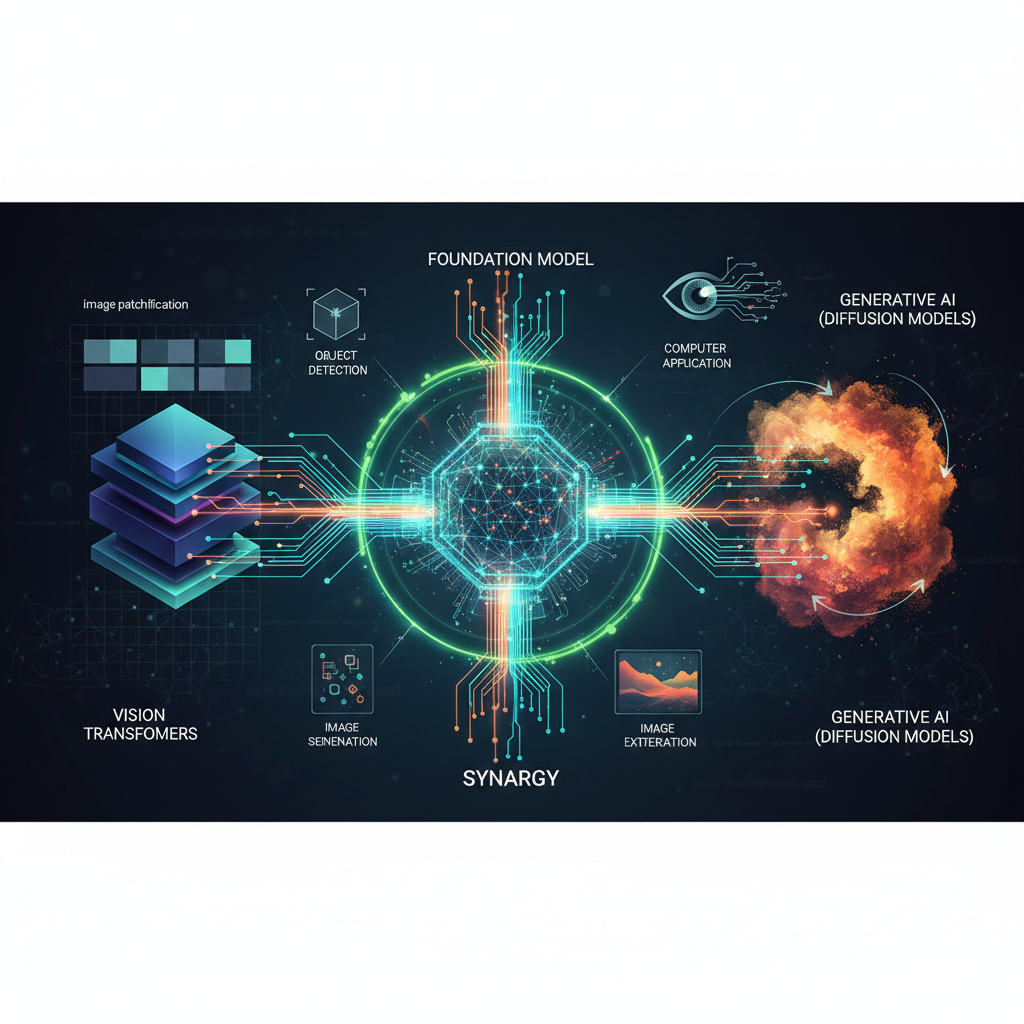

Generative VLMs: Text-to-Image Synthesis

The power of VLMs extends to generation. Models like DALL-E 2, Stable Diffusion, and Midjourney leverage the robust visual-language understanding of models like CLIP (or similar architectures) to create stunning images from text prompts. They typically involve:

- A text encoder (often a VLM component) to create a latent representation of the text prompt.

- A diffusion model that iteratively refines random noise into an image, guided by the text embedding.

This synergy allows for unprecedented creative control, enabling users to generate highly specific and imaginative visual content simply by describing it.

Beyond CLIP: Advanced VLMs for Reasoning

Newer VLMs are pushing the boundaries further, integrating large language models with vision encoders for more complex reasoning:

- Flamingo (DeepMind): An "in-context learning" VLM that can perform new vision-language tasks with just a few examples, leveraging a pre-trained LLM and a vision encoder.

- CoCa (Contrastive Captioners): Combines contrastive learning (like CLIP) with image captioning, allowing it to excel at both zero-shot classification and generating detailed descriptions.

- LLaVA (Large Language and Vision Assistant): Connects a vision encoder (like CLIP's) to an open-source LLM (like LLaMA) to enable multimodal chat, visual question answering, and instruction following.

These models are moving towards a future where AI can not only recognize objects but also understand the context, reason about visual scenes, and engage in meaningful conversations about them.

Self-Supervised Learning (SSL): The Data Engine

The success of foundation models, particularly in vision, is inextricably linked to Self-Supervised Learning (SSL). The sheer volume of labeled data required for supervised training of these massive models is prohibitive. SSL offers a powerful alternative: it allows models to learn rich, general-purpose representations from vast amounts of unlabeled data by creating "pretext tasks" where the supervision signal is derived directly from the data itself.

Why SSL is Crucial:

- Scalability: Leverages the abundance of unlabeled data (e.g., billions of images and videos online).

- Reduced Annotation Costs: Significantly cuts down on the expensive and time-consuming process of manual data labeling.

- Robust Representations: Often leads to more robust and generalizable features compared to supervised pre-training, especially when fine-tuned on smaller downstream datasets.

Key SSL Methods:

- Contrastive Learning (e.g., SimCLR, MoCo, DINO): These methods train models to bring representations of "similar" (augmented versions of the same image) samples closer together in the embedding space, while pushing "dissimilar" (different images) samples apart.

- SimCLR (Simple Framework for Contrastive Learning of Visual Representations): Uses multiple augmented views of an image to form positive pairs, and other images in the batch as negative pairs.

- MoCo (Momentum Contrast): Addresses the need for large negative sample batches by maintaining a dynamic dictionary of past representations using a momentum encoder.

- DINO: As mentioned, uses self-distillation without labels, where a student network learns from a teacher network's output, effectively learning to cluster similar visual features.

- Masked Image Modeling (e.g., MAE, BEiT): Inspired by masked language modeling in NLP, these techniques involve masking out parts of an image and training the model to reconstruct the missing information. This forces the model to learn meaningful contextual representations.

SSL is the unsung hero, enabling the scale and generalization capabilities that define foundation models in computer vision.

Practical Applications and Impact for Practitioners

The rise of foundation models has profound implications for AI practitioners and enthusiasts.

1. Transfer Learning & Fine-tuning:

Foundation models provide incredibly powerful pre-trained weights. Instead of training a model from scratch, practitioners can now:

- Load a pre-trained ViT, Swin Transformer, or CLIP model.

- Replace the final classification head with one suited for their specific task (e.g., 10 classes instead of 1000).

- Fine-tune the model on a relatively small, labeled dataset for their target task.

This drastically reduces the data requirements, training time, and computational resources needed to achieve state-of-the-art performance. Platforms like Hugging Face Transformers provide easy access to these models.

# Conceptual example: Fine-tuning a pre-trained ViT for a custom classification task

from transformers import ViTForImageClassification, ViTFeatureExtractor

from datasets import load_dataset

import torch

# Load a pre-trained ViT model

model_name = "google/vit-base-patch16-224"

feature_extractor = ViTFeatureExtractor.from_pretrained(model_name)

model = ViTForImageClassification.from_pretrained(model_name, num_labels=2) # e.g., 2 classes

# Load your custom dataset (e.g., using Hugging Face datasets library)

# dataset = load_dataset("your_custom_dataset")

# Define training arguments and trainer

# from transformers import TrainingArguments, Trainer

# training_args = TrainingArguments(...)

# trainer = Trainer(model=model, args=training_args, train_dataset=dataset["train"], ...)

# trainer.train()

print(f"Model loaded and ready for fine-tuning on {model.config.num_labels} classes.")

# Conceptual example: Fine-tuning a pre-trained ViT for a custom classification task

from transformers import ViTForImageClassification, ViTFeatureExtractor

from datasets import load_dataset

import torch

# Load a pre-trained ViT model

model_name = "google/vit-base-patch16-224"

feature_extractor = ViTFeatureExtractor.from_pretrained(model_name)

model = ViTForImageClassification.from_pretrained(model_name, num_labels=2) # e.g., 2 classes

# Load your custom dataset (e.g., using Hugging Face datasets library)

# dataset = load_dataset("your_custom_dataset")

# Define training arguments and trainer

# from transformers import TrainingArguments, Trainer

# training_args = TrainingArguments(...)

# trainer = Trainer(model=model, args=training_args, train_dataset=dataset["train"], ...)

# trainer.train()

print(f"Model loaded and ready for fine-tuning on {model.config.num_labels} classes.")

2. Zero-shot & Few-shot Learning:

This is where foundation models truly shine.

- Zero-shot: Use models like CLIP to classify images into categories it was never explicitly trained on, simply by providing text descriptions of those categories. This is invaluable for rapid prototyping and tasks with evolving class definitions.

- Few-shot: Adapt a pre-trained model to a new task with only a handful of labeled examples. This dramatically reduces the need for extensive data annotation, a major bottleneck in many CV projects.

3. Custom Object Detection & Segmentation:

Models like DETR (DEtection TRansformer) and the Segment Anything Model (SAM) are revolutionizing these tasks.

- DETR: Replaces traditional anchor boxes and non-maximum suppression with a direct set prediction approach using Transformers, simplifying the object detection pipeline.

- SAM: A truly remarkable foundation model for segmentation. It can segment any object in an image with a single click or by providing a text prompt. It was trained on 11 million images and 1.1 billion masks, making it incredibly versatile for interactive segmentation, data annotation, and even creating new datasets.

# Conceptual example: Using SAM for interactive segmentation

# from segment_anything import sam_model_registry, SamPredictor

# import cv2

# import numpy as np

# sam_checkpoint = "sam_vit_h_4b8939.pth"

# model_type = "vit_h"

# sam = sam_model_registry[model_type](checkpoint=sam_checkpoint)

# predictor = SamPredictor(sam)

# # Load image

# image = cv2.imread('image.jpg')

# image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# predictor.set_image(image)

# # Example: Segment based on a point click (e.g., click on a dog)

# input_point = np.array([[500, 375]]) # x, y coordinates

# input_label = np.array([1]) # 1 for foreground, 0 for background

# masks, scores, logits = predictor.predict(

# point_coords=input_point,

# point_labels=input_label,

# multimask_output=True,

# )

# # masks contains multiple possible segmentations, scores indicate confidence

# # You can then visualize the mask with the highest score

# Conceptual example: Using SAM for interactive segmentation

# from segment_anything import sam_model_registry, SamPredictor

# import cv2

# import numpy as np

# sam_checkpoint = "sam_vit_h_4b8939.pth"

# model_type = "vit_h"

# sam = sam_model_registry[model_type](checkpoint=sam_checkpoint)

# predictor = SamPredictor(sam)

# # Load image

# image = cv2.imread('image.jpg')

# image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# predictor.set_image(image)

# # Example: Segment based on a point click (e.g., click on a dog)

# input_point = np.array([[500, 375]]) # x, y coordinates

# input_label = np.array([1]) # 1 for foreground, 0 for background

# masks, scores, logits = predictor.predict(

# point_coords=input_point,

# point_labels=input_label,

# multimask_output=True,

# )

# # masks contains multiple possible segmentations, scores indicate confidence

# # You can then visualize the mask with the highest score

4. Ethical Considerations & Bias:

The scale of data used to train foundation models means they can inadvertently learn and perpetuate biases present in the real world.

- Data Bias: If training data overrepresents certain demographics or underrepresents others, the model may perform poorly or unfairly on underrepresented groups.

- Stereotypes: Models trained on internet data can pick up societal stereotypes, leading to biased outputs (e.g., associating certain professions with specific genders).

Addressing these issues requires careful data curation, bias detection techniques, and ongoing research into fairness, transparency, and interpretability. Practitioners must be aware of these risks and deploy models responsibly.

5. Computational Demands:

While leveraging pre-trained models is efficient, training foundation models from scratch is immensely resource-intensive. This creates a barrier to entry for smaller organizations. However, advancements in:

- Model Distillation: Training a smaller "student" model to mimic the behavior of a larger "teacher" model.

- Quantization: Reducing the precision of model weights (e.g., from 32-bit to 8-bit integers) to reduce memory footprint and speed up inference.

- Efficient Architectures: Developing models that achieve similar performance with fewer parameters or computations.

These techniques are crucial for making foundation models deployable on edge devices or in resource-constrained environments.

Emerging Trends and Future Directions

The field of foundation models in CV is exploding with innovation:

- Multimodal Foundation Models: The integration of vision and language is just the beginning. Future models will likely incorporate audio, sensor data, haptic feedback, and more, creating a truly holistic understanding of the world.

- Embodied AI: Foundation models are poised to empower robots with more sophisticated perception, reasoning, and interaction capabilities, moving beyond pre-programmed behaviors to truly intelligent agents.

- Personalization & Continual Learning: Imagine models that adapt and learn continuously from individual user interactions or dynamic environments, becoming more personalized and relevant over time.

- Efficient Architectures for Edge Deployment: Research will continue to focus on making these powerful models smaller, faster, and more energy-efficient, enabling their deployment on mobile phones, drones, and other edge devices.

- Unified Architectures: The ultimate goal might be a single, massive foundation model capable of handling virtually any AI task across modalities, acting as a universal AI agent.

Conclusion

Foundation models are ushering in a new era for computer vision, moving us closer to general-purpose visual intelligence. By leveraging massive datasets and self-supervised learning, these models offer unprecedented generalization, efficiency, and accessibility. From the architectural innovations of Vision Transformers to the multimodal understanding of Vision-Language Models like CLIP, and the powerful segmentation capabilities of SAM, the tools available to practitioners are more potent than ever before.

While challenges remain, particularly around ethical considerations and computational demands, the trajectory is clear: foundation models are not just a fleeting trend but a fundamental shift that will redefine how we build, deploy, and interact with AI systems in the visual domain for years to come. For anyone in AI, understanding and engaging with this paradigm is no longer optional; it's essential for staying at the forefront of innovation.