Foundation Models in Computer Vision: The Revolution of ViTs and Diffusion Models

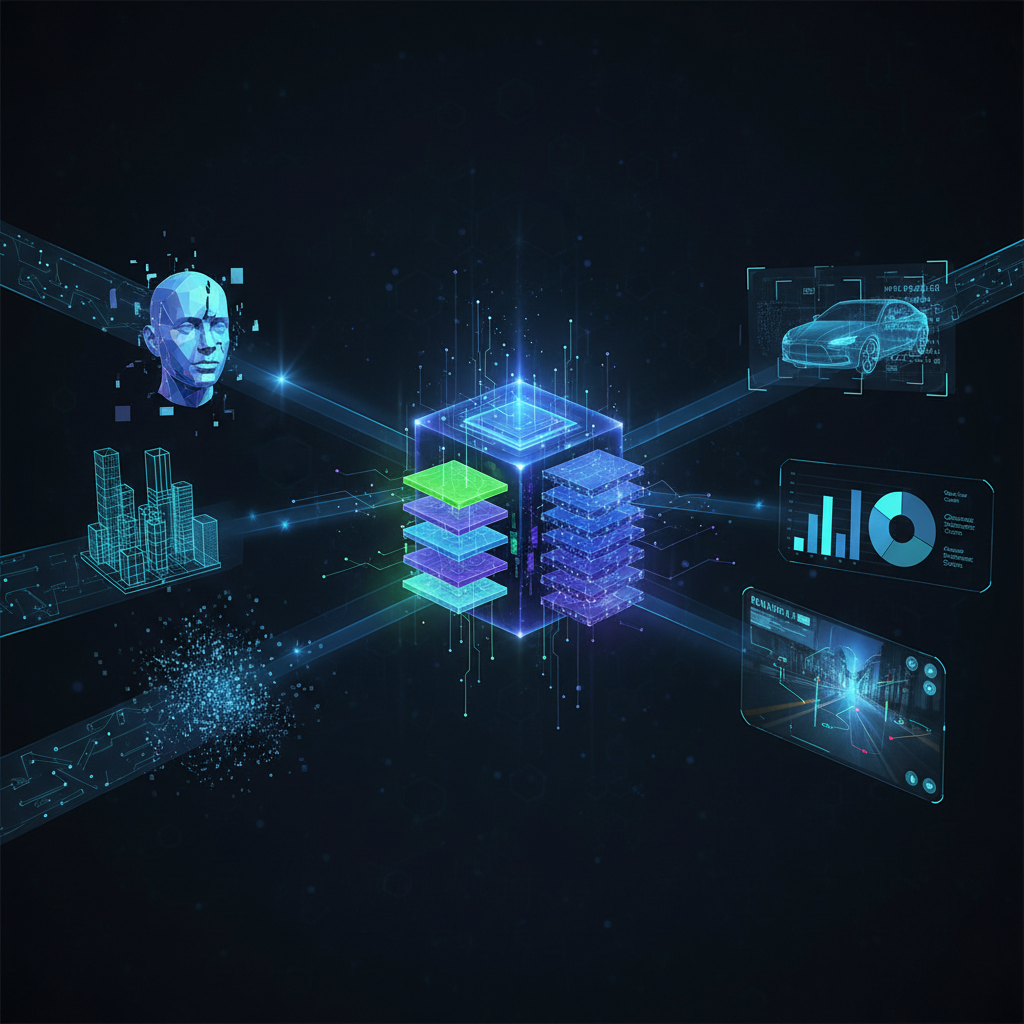

Discover how foundation models, driven by Vision Transformers (ViTs) and Diffusion Models, are transforming Computer Vision. This paradigm shift offers unified, efficient AI capabilities, moving beyond task-specific architectures to unlock unprecedented understanding and generation.

The landscape of artificial intelligence is undergoing a profound transformation, driven by the emergence of "foundation models." These aren't just incremental improvements; they represent a paradigm shift, much like Large Language Models (LLMs) revolutionized Natural Language Processing. In Computer Vision (CV), this revolution is powered by the synergistic dance between Vision Transformers (ViTs) and Diffusion Models, unlocking unprecedented capabilities in both understanding and generation.

For years, Computer Vision relied on specialized architectures meticulously crafted for specific tasks – CNNs for image classification, R-CNNs for object detection, U-Nets for segmentation. While effective, this approach often led to fragmented knowledge bases and required extensive, task-specific data annotation. Foundation models, however, promise a more unified and efficient future. By pre-training on vast, diverse datasets, these models learn rich, general-purpose representations that can be adapted to a multitude of downstream tasks with minimal fine-tuning, often achieving state-of-the-art results.

This blog post delves into the core components of this revolution: Vision Transformers for robust understanding and representation learning, and Diffusion Models for high-fidelity generative AI. We'll explore their individual strengths, how they converge to create powerful multimodal systems, and the practical implications for AI practitioners and enthusiasts alike.

The Rise of Vision Transformers: Understanding the World Through Attention

The Transformer architecture, initially a breakthrough in NLP, shattered the dominance of recurrent neural networks by introducing self-attention mechanisms. This allowed models to weigh the importance of different parts of an input sequence, capturing long-range dependencies more effectively. The question for Computer Vision was: how do you treat an image as a sequence?

Core Concept: Patching Up Images

Vision Transformers (ViTs) answered this by treating an image not as a grid of pixels, but as a sequence of fixed-size image patches. Each patch is flattened, linearly projected into an embedding space, and positional embeddings are added to retain spatial information. These patch embeddings then become the "tokens" that feed into a standard Transformer encoder.

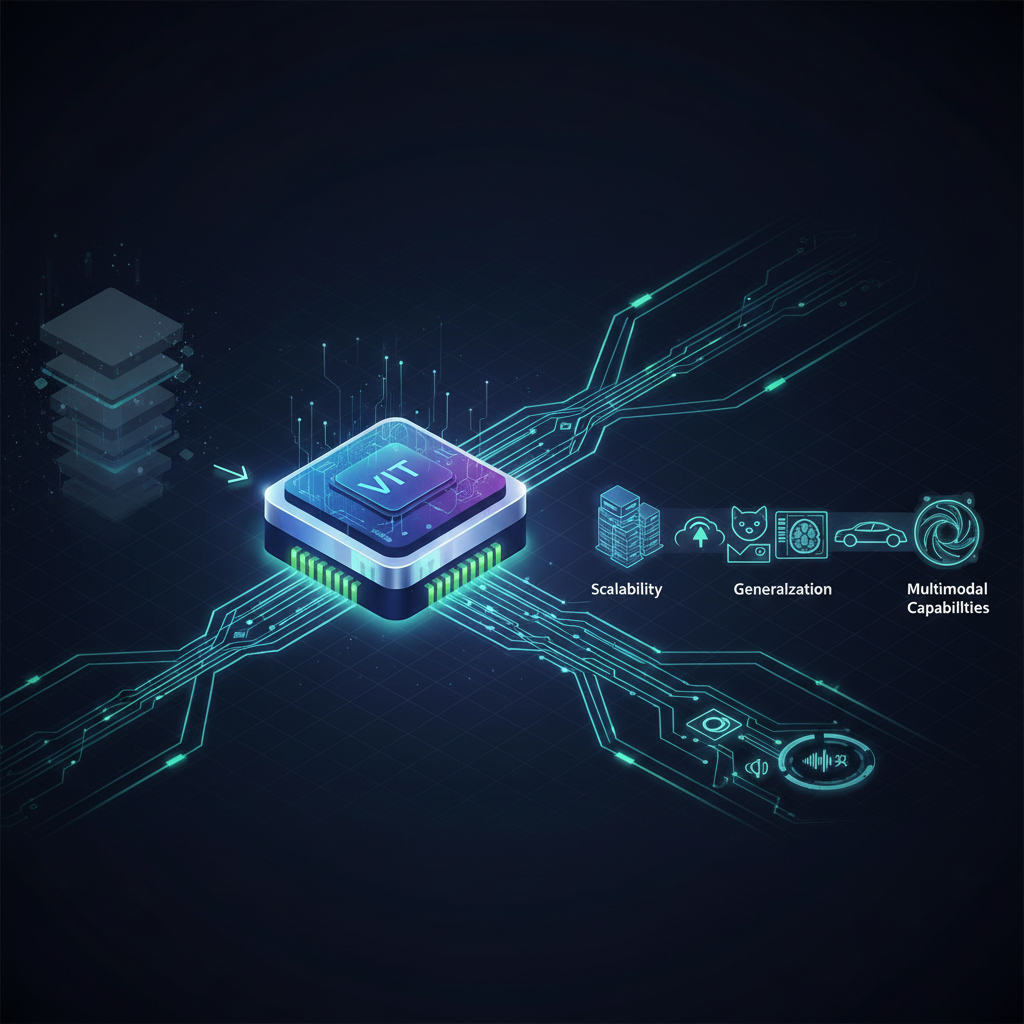

This simple yet powerful adaptation allowed ViTs to:

- Capture global context: Unlike CNNs, which have a local receptive field, self-attention allows ViTs to relate any patch to any other patch in the image, regardless of distance.

- Scale effectively: With sufficient data, ViTs can learn incredibly rich and robust representations, often outperforming traditional CNNs on large-scale benchmarks.

Recent Developments: Unlocking Deeper Understanding

While initial ViTs required massive datasets for supervised pre-training, subsequent innovations have dramatically expanded their utility and efficiency:

-

Self-Supervised Learning (SSL) for ViTs: The true power of foundation models lies in their ability to learn from unlabeled data. SSL techniques enable ViTs to learn powerful visual representations without explicit human annotations.

- Masked Autoencoders (MAE): Inspired by BERT in NLP, MAE masks out a large portion of image patches and trains the ViT to reconstruct the missing pixels. This forces the model to learn meaningful representations of the visible patches to infer the masked ones.

- DINOv2: This method uses self-distillation with no labels, where a "student" ViT learns from a "teacher" ViT's representations of different augmentations of the same image. DINOv2 has shown remarkable zero-shot performance on various downstream tasks, producing high-quality semantic segmentation masks without ever being explicitly trained for segmentation.

- EsViT: Another self-supervised approach focusing on learning robust features by contrasting different views of an image.

These SSL methods are crucial because they allow ViTs to leverage the vast ocean of unlabeled image data available online, leading to more generalizable and powerful models.

-

Multi-modal Integration (Vision-Language Models): Perhaps one of the most impactful developments is the integration of vision and language. By training models on vast datasets of image-text pairs, they learn a shared understanding across modalities.

- CLIP (Contrastive Language-Image Pre-training): Developed by OpenAI, CLIP learns to associate images with their corresponding text descriptions. It does this by training a text encoder and an image encoder to produce embeddings that are close for matching pairs and far apart for non-matching pairs. This enables powerful zero-shot capabilities: you can ask CLIP to classify an image into categories it has never seen before, simply by providing text descriptions of those categories.

- LLaVA (Large Language and Vision Assistant) & BLIP-2: These models take multimodal understanding a step further by connecting a pre-trained LLM (like GPT-3 or FlanT5) with a pre-trained vision encoder. This allows them to perform complex visual question answering, detailed image captioning, and even engage in visual dialogue, bridging the gap between seeing and reasoning.

-

Foundation Models for Downstream Tasks: ViTs are not just for classification; they are becoming the backbone for a wide array of CV tasks.

- Object Detection & Segmentation (DETR, DINO, Mask2Former): Traditional object detection pipelines were complex, involving region proposals, feature extraction, and non-maximum suppression. DETR (DEtection TRansformer) simplified this by directly predicting bounding boxes and class labels using a Transformer decoder. Successors like DINO and Mask2Former have pushed the state-of-the-art, demonstrating that ViTs can handle complex spatial reasoning for precise object localization and segmentation.

- Generalist Vision Models (SAM - Segment Anything Model): Meta AI's SAM is a groundbreaking example of a generalist vision model. Trained on an enormous dataset of 11 million images and 1.1 billion masks, SAM can segment any object in an image given a simple prompt (e.g., a click, a bounding box, or even text). Its "promptable" interface makes it incredibly versatile, akin to a universal segmentation tool.

Example: Zero-Shot Classification with CLIP

Imagine you have a dataset of images of various bird species, but you don't have labels for all of them. With CLIP, you can perform zero-shot classification:

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

import requests

# Load pre-trained CLIP model and processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Example image (replace with your own image path or URL)

url = "http://images.cocodataset.org/val2017/000000039769.jpg" # Example: an image of cats

image = Image.open(requests.get(url, stream=True).raw)

# Candidate labels for classification

candidate_labels = ["a photo of a cat", "a photo of a dog", "a photo of a bird", "a photo of a car"]

# Process inputs

inputs = processor(text=candidate_labels, images=image, return_tensors="pt", padding=True)

# Get model outputs

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image # this is the image-text similarity score

probs = logits_per_image.softmax(dim=1) # convert to probabilities

# Print results

for i, label in enumerate(candidate_labels):

print(f"'{label}': {probs[0][i].item():.4f}")

# Output will show highest probability for "a photo of a cat"

from transformers import CLIPProcessor, CLIPModel

from PIL import Image

import requests

# Load pre-trained CLIP model and processor

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Example image (replace with your own image path or URL)

url = "http://images.cocodataset.org/val2017/000000039769.jpg" # Example: an image of cats

image = Image.open(requests.get(url, stream=True).raw)

# Candidate labels for classification

candidate_labels = ["a photo of a cat", "a photo of a dog", "a photo of a bird", "a photo of a car"]

# Process inputs

inputs = processor(text=candidate_labels, images=image, return_tensors="pt", padding=True)

# Get model outputs

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image # this is the image-text similarity score

probs = logits_per_image.softmax(dim=1) # convert to probabilities

# Print results

for i, label in enumerate(candidate_labels):

print(f"'{label}': {probs[0][i].item():.4f}")

# Output will show highest probability for "a photo of a cat"

This demonstrates how CLIP can classify an image into categories it wasn't explicitly trained on, simply by understanding the semantic relationship between the image and the text descriptions.

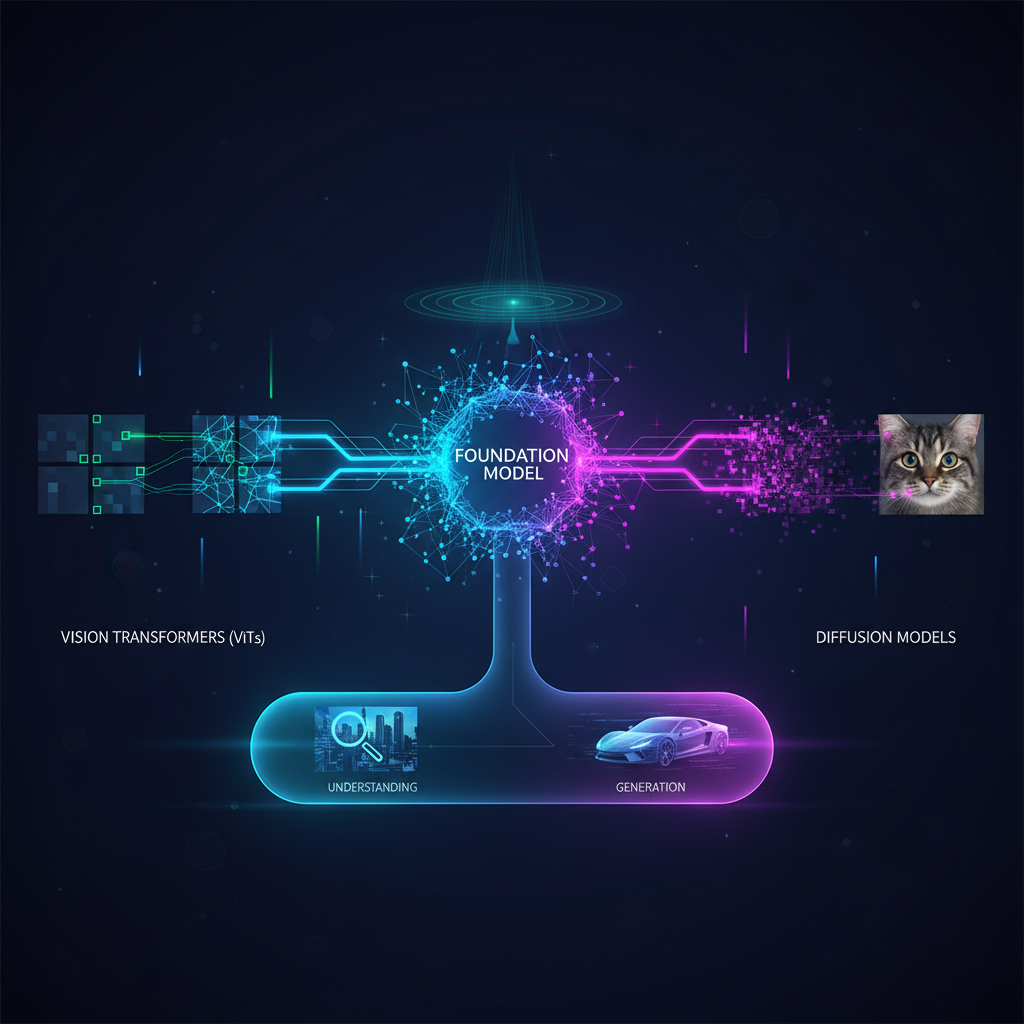

Diffusion Models: The Art of Generative AI

While ViTs excel at understanding and representation, Diffusion Models have emerged as the undisputed champions of high-fidelity image generation. They have surpassed Generative Adversarial Networks (GANs) in terms of image quality, diversity, and controllability.

Core Concept: Denoising to Creation

Diffusion models work by learning to reverse a gradual "noising" process. During training, noise is progressively added to an image until it becomes pure Gaussian noise. The model is then trained to predict and remove this noise at each step, effectively learning how to transform noise back into a coherent image. During inference, the model starts with random noise and iteratively denoises it, guided by learned patterns, until a new image is generated.

Recent Developments: Unleashing Creative Potential

The past few years have seen an explosion of innovation in diffusion models, making them accessible and powerful tools:

-

Text-to-Image Generation (Stable Diffusion, DALL-E 2, Midjourney): These models have captivated the world with their ability to generate stunning, photorealistic, or artistic images from simple text prompts. They typically combine a text encoder (often a CLIP-like model) to understand the prompt, which then guides the diffusion process in a latent space.

- Stable Diffusion: Open-source and highly optimized, Stable Diffusion has democratized text-to-image generation, enabling countless applications and further research.

- DALL-E 2 & Midjourney: Proprietary models known for their exceptional image quality and artistic flair.

-

Image-to-Image Translation: Diffusion models are not just for generating from scratch. They excel at transforming existing images:

- Inpainting: Filling in missing parts of an image seamlessly.

- Outpainting: Extending an image beyond its original borders.

- Style Transfer: Applying the artistic style of one image to the content of another.

- Super-resolution: Enhancing the resolution and detail of low-resolution images.

-

Control Mechanisms (ControlNet, T2I-Adapter): A key limitation of early text-to-image models was the lack of fine-grained control over the generated output. ControlNet and T2I-Adapter address this by allowing users to provide additional input conditions, such as:

- Depth maps: Guide the 3D structure of the generated scene.

- Edge maps (Canny): Preserve the outlines and shapes of objects.

- Pose estimation (OpenPose): Control the pose of human figures.

- Segmentation masks: Define specific regions for objects.

These control mechanisms have transformed diffusion models from mere "prompt-and-generate" tools into powerful creative instruments for artists, designers, and architects.

-

Video and 3D Generation: The frontier is rapidly expanding beyond 2D images.

- Video Generation: Models like RunwayML's Gen-1/Gen-2 and Google's Imagen Video are extending diffusion to generate coherent and dynamic video sequences from text or existing video clips.

- 3D Content Generation: Emerging research is using diffusion models to generate 3D assets, textures, or neural radiance fields (NeRFs) from text prompts or single 2D images, paving the way for automated 3D content creation.

Example: Controlled Image Generation with Stable Diffusion and ControlNet

Imagine you want to generate an image of a person in a specific pose, holding a specific object, and in a particular style. ControlNet allows you to do this by providing a pose estimation image (e.g., from OpenPose) alongside your text prompt.

Prompt: "A woman sitting at a cafe, drinking coffee, realistic photo, cinematic lighting" ControlNet Input: An OpenPose image depicting the desired pose (e.g., sitting at a table with a cup).

The diffusion model, guided by both the text prompt and the structural information from the ControlNet input, will generate an image that adheres to both conditions, offering unparalleled control over the output.

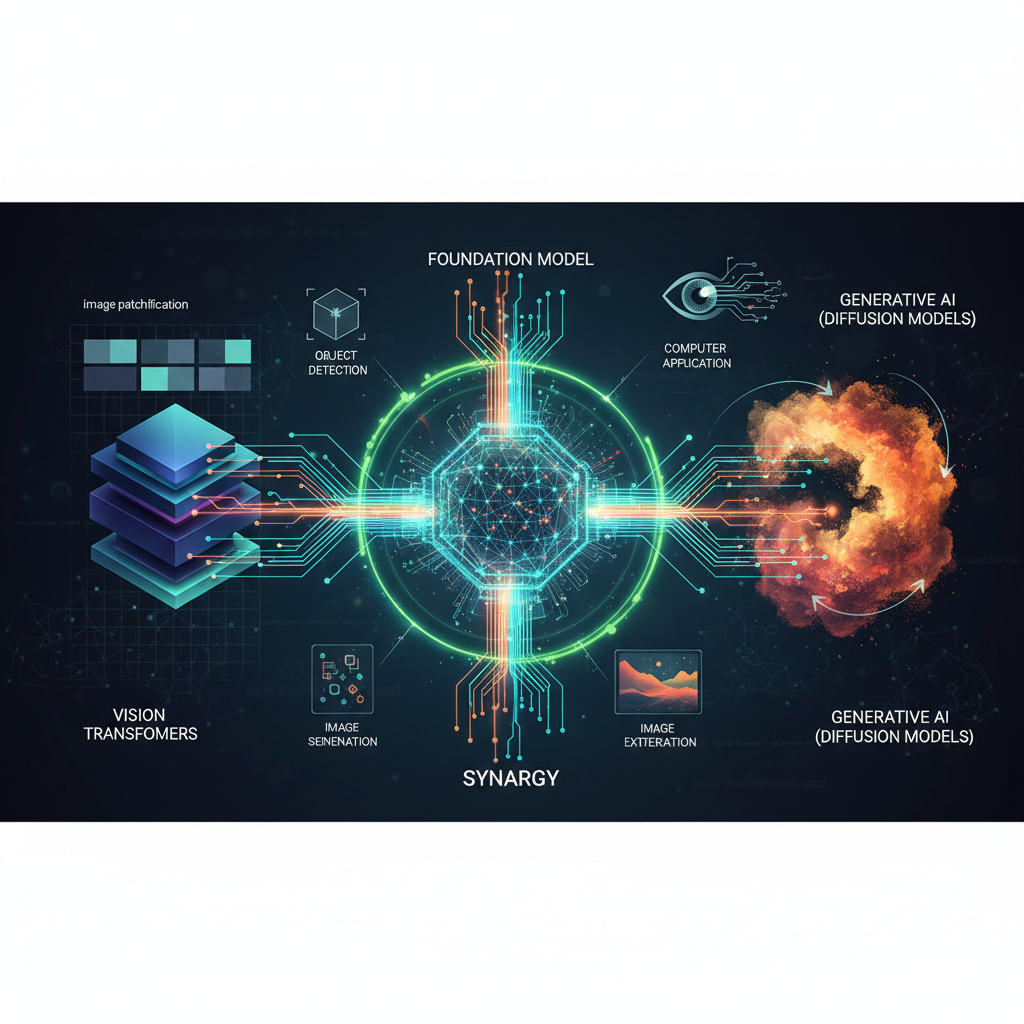

The Convergence: A Synergistic Partnership

The true power of foundation models in Computer Vision often lies in the convergence of ViTs and Diffusion Models. They are not isolated technologies but rather complementary forces that enhance each other's capabilities.

- ViTs Powering Diffusion: Many state-of-the-art diffusion models leverage ViT-based encoders (like CLIP's text encoder) to understand the text prompts that guide image generation. The rich semantic representations learned by ViTs are crucial for the diffusion model to interpret complex instructions and generate semantically coherent images.

- Diffusion Models Enhancing ViTs: Diffusion models can generate vast amounts of synthetic data, which can then be used to augment training datasets for ViT-based understanding models. This is particularly valuable in data-scarce domains or for creating diverse scenarios that are difficult to capture in the real world.

- Multimodal Reasoning and Generation: The combination leads to powerful multimodal systems. Imagine a system where a ViT-based model (like LLaVA) understands an image and generates a detailed caption. This caption can then be fed into a diffusion model to generate variations of the image, or even to create new images based on the described scene.

- Promptable Everything: SAM, with its "promptable" interface, demonstrates a generalist approach to vision understanding. Similarly, ControlNet extends the "promptable" paradigm to generation, allowing users to guide the creative process with various input modalities. This shared philosophy of "prompting" (whether with text, points, or control maps) is a hallmark of foundation models.

Practical Applications and Insights for Practitioners

The implications of these foundation models are far-reaching, offering immediate and future benefits across industries:

-

Accelerated Content Creation:

- Marketing & Advertising: Rapidly generate diverse visual assets for campaigns, product mockups, and social media.

- Gaming & Entertainment: Create concept art, textures, 3D models, and even character animations with unprecedented speed.

- Design: Explore countless design iterations for products, architecture, and fashion, saving significant time and resources.

-

Enhanced Data Augmentation & Synthesis:

- Robotics: Generate synthetic training data for robot perception in dangerous or hard-to-access environments.

- Autonomous Driving: Create diverse scenarios (weather, lighting, traffic conditions) to robustly train self-driving car models, addressing edge cases that are rare in real-world data.

- Medical Imaging: Generate synthetic medical images to augment limited datasets for rare diseases, improving diagnostic model performance while respecting patient privacy.

-

Zero-Shot & Few-Shot Learning:

- Industrial Inspection: Deploy models for defect detection on new product lines with minimal labeled data, significantly reducing annotation costs and time-to-deployment.

- Environmental Monitoring: Classify new species or detect novel environmental changes using pre-trained vision-language models without extensive re-training.

-

Advanced Image Editing & Manipulation:

- Photography & Videography: Professional-grade inpainting, outpainting, and style transfer for creative post-production.

- E-commerce: Automatically generate product variations (e.g., different colors, textures) or place products in diverse lifestyle settings.

-

Multimodal Search & Recommendation:

- E-commerce: Allow users to search for products using both text descriptions and image examples (e.g., "find me a dress like this, but in blue").

- Content Discovery: Recommend articles, videos, or products based on a deeper understanding of visual and textual context.

-

Foundation for Robotics & Embodied AI:

- Generalist vision models like SAM can provide robust, promptable perception capabilities for robots, allowing them to understand and interact with diverse environments without needing task-specific training for every object.

Challenges and Future Directions

While immensely powerful, foundation models come with their own set of challenges and open questions:

- Computational Cost: Training these models from scratch requires immense computational resources, making it accessible only to well-funded organizations. This highlights the importance of open-source initiatives and efficient fine-tuning methods.

- Ethical Concerns:

- Bias: Models trained on biased internet data can perpetuate and amplify societal biases, leading to unfair or harmful outputs.

- Misinformation & Deepfakes: The ease of generating realistic images and videos raises concerns about the spread of misinformation and the creation of malicious deepfakes.

- Intellectual Property: The use of copyrighted material in training data and the generation of content in the style of existing artists raise complex IP questions.

- Interpretability & Explainability: Understanding why these complex, black-box models make certain decisions or generate specific outputs remains a significant challenge.

- Evaluation Metrics: Developing robust and comprehensive metrics for evaluating the quality, diversity, controllability, and safety of generative models is an active research area.

- Deployment & Efficiency: Optimizing these large models for real-time inference on diverse hardware (especially edge devices) is crucial for widespread adoption.

- Beyond 2D Images: While progress in video and 3D generation is rapid, effectively extending these paradigms to truly understand and generate complex, interactive 3D worlds remains a grand challenge.

Conclusion

The convergence of Vision Transformers and Diffusion Models marks a pivotal moment in Computer Vision. We are moving from a world of specialized, isolated models to one of general-purpose, powerful foundation models capable of both sophisticated understanding and high-fidelity generation. This shift democratizes advanced AI capabilities, empowers creators, and accelerates innovation across countless domains.

As practitioners and enthusiasts, understanding these foundational architectures, their synergistic potential, and their associated challenges is paramount. The journey has just begun, and the future of Computer Vision, shaped by these incredible models, promises to be more intelligent, creative, and impactful than ever before.