Unlocking Enterprise Intelligence: The Power of Retrieval-Augmented Generation (RAG)

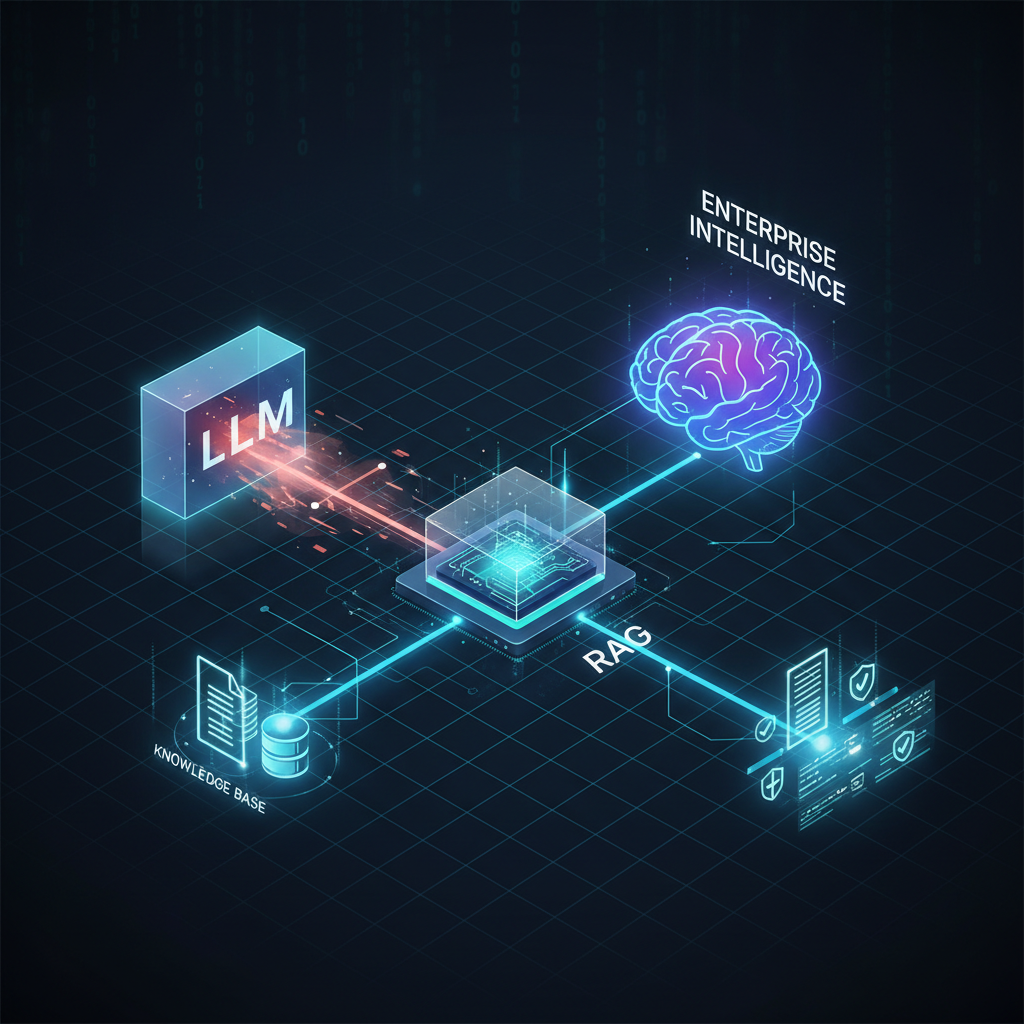

Discover how Retrieval-Augmented Generation (RAG) transforms Large Language Models (LLMs) from impressive curiosities into reliable, trustworthy, and indispensable tools for businesses. Learn why RAG is the leading solution for factual accuracy and verifiability in enterprise AI.

Unlocking Enterprise Intelligence: The Power of Retrieval-Augmented Generation (RAG)

Large Language Models (LLMs) have taken the world by storm, demonstrating astonishing capabilities in understanding, generating, and summarizing human language. From crafting creative content to answering complex questions, their potential seems boundless. However, for all their prowess, LLMs face critical limitations when deployed in real-world, knowledge-intensive enterprise environments. They hallucinate, lack up-to-date information, struggle with proprietary data, and often cannot attribute their claims. This is where Retrieval-Augmented Generation (RAG) steps in, not just as a workaround, but as the leading practical solution to transform LLMs from impressive curiosities into reliable, trustworthy, and indispensable tools for businesses.

RAG is rapidly becoming the de facto standard for building robust AI applications that demand factual accuracy, verifiability, and access to dynamic, domain-specific knowledge. It marries the generative power of LLMs with the precision of information retrieval, creating a synergy that addresses the core challenges of deploying AI in critical business operations. This blog post will deep dive into RAG, exploring its architecture, advanced techniques, evaluation strategies, and its profound impact on enterprise AI.

The Achilles' Heel of Standalone LLMs: Why RAG is Essential

Before we dissect RAG, let's understand the fundamental problems it solves:

- Hallucination: LLMs, by design, are probabilistic engines that predict the next most likely token. This can lead to them confidently generating factually incorrect or nonsensical information, a phenomenon known as "hallucination." In enterprise contexts, where accuracy is paramount (e.g., legal, medical, financial), hallucinations are unacceptable.

- Knowledge Cut-off: The knowledge base of a pre-trained LLM is static, frozen at the point of its last training data collection. This means it cannot access real-time information, recent events, or newly published research. For dynamic industries, this renders standalone LLMs quickly obsolete.

- Lack of Attribution: When an LLM provides an answer, it rarely cites its sources. This lack of transparency makes it difficult to verify the information and erodes trust, especially in regulated environments.

- Proprietary Data Blindness: LLMs are trained on vast public datasets. They have no inherent access to an organization's internal documents, private databases, customer records, or confidential reports. This is a critical barrier for enterprise adoption.

- Cost and Latency of Fine-tuning: While fine-tuning an LLM on proprietary data can imbue it with domain-specific knowledge, it's an expensive, time-consuming process that requires significant computational resources and a large, high-quality dataset. Furthermore, continuous fine-tuning for frequently updated knowledge is impractical.

RAG directly mitigates these issues by grounding the LLM's responses in verifiable, external knowledge, transforming it into a powerful, context-aware reasoning engine.

The Core Architecture of RAG: A Two-Phase Approach

At its heart, a RAG system operates in two main phases: the Indexing/Retrieval Pipeline and the Generation Pipeline.

Phase 1: The Indexing/Retrieval Pipeline

This phase is about preparing and organizing your external knowledge base so that it can be efficiently searched and retrieved.

-

Data Ingestion:

- What it is: The process of loading raw data from various sources.

- Examples: PDFs, Word documents, web pages, databases (SQL, NoSQL), internal wikis, Slack conversations, emails, CSV files, JSON objects, etc.

- Technical Detail: This often involves using specialized connectors or custom scripts to extract text and metadata from diverse formats.

-

Chunking:

- What it is: Breaking down large documents into smaller, manageable pieces or "chunks." This is crucial because LLMs have a limited "context window" (the amount of text they can process at once).

- Strategies:

- Fixed Size Chunking: Splitting text into chunks of a predetermined character or token count, often with some overlap to maintain context across chunks.

- Semantic Chunking: Attempting to split documents based on semantic boundaries (e.g., paragraphs, sections, topics) to ensure each chunk represents a coherent idea. This often involves using an LLM or a small model to identify these boundaries.

- Recursive Chunking: A sophisticated approach that tries different chunk sizes and strategies, often recursively, to find the most semantically meaningful splits.

- Importance: Effective chunking is paramount. Too large, and you exceed context windows or dilute relevance; too small, and you lose crucial context.

-

Embedding:

- What it is: Converting each text chunk into a numerical vector (an embedding). These embeddings capture the semantic meaning of the text, allowing similar chunks to have similar vector representations.

- Technical Detail: This is done using specialized embedding models (e.g., OpenAI's

text-embedding-3-large, Cohere'sembed-english-v3.0, open-source models likebge-large-en-v1.5orall-MiniLM-L6-v2). These models are trained to produce dense vector representations where the distance between vectors reflects the semantic similarity of the underlying text.

-

Vector Database (Vector Store):

- What it is: A specialized database designed to efficiently store and query these high-dimensional vectors.

- Examples: Pinecone, Weaviate, Qdrant, Chroma, Milvus, Faiss (library, not a full database), Elasticsearch (with vector capabilities).

- Function: It allows for rapid "nearest neighbor" searches, meaning given a query embedding, it can quickly find the most semantically similar chunk embeddings. Along with the embeddings, the original text chunks and any relevant metadata are also stored.

Phase 2: The Generation Pipeline

This phase is where the user's query is processed, relevant information is retrieved, and the LLM synthesizes an answer.

- User Query: The user asks a question or provides a prompt.

- Query Embedding: The user's query is also converted into a vector embedding using the same embedding model used for the document chunks.

- Vector Search: The query embedding is used to perform a similarity search in the vector database. The database returns the top-$k$ most semantically similar chunks (i.e., the most relevant pieces of information) from the indexed knowledge base.

- Context Augmentation: The retrieved chunks are then injected into the LLM's prompt, along with the original user query. This forms a "grounded" prompt, providing the LLM with specific, factual context.

- Example Prompt Structure:

You are an AI assistant tasked with answering questions based on the provided context. If the answer is not in the context, state that you don't know. Context: [Retrieved Chunk 1 Text] [Retrieved Chunk 2 Text] [Retrieved Chunk 3 Text] ... Question: [User's Original Query] Answer:You are an AI assistant tasked with answering questions based on the provided context. If the answer is not in the context, state that you don't know. Context: [Retrieved Chunk 1 Text] [Retrieved Chunk 2 Text] [Retrieved Chunk 3 Text] ... Question: [User's Original Query] Answer:

- Example Prompt Structure:

- LLM Generation: The LLM processes this augmented prompt and generates an answer, synthesizing information from the provided context to address the user's query. Because it's grounded in retrieved facts, the likelihood of hallucination is significantly reduced, and the answer can often be traced back to its source.

Advanced RAG Strategies: Beyond the Basics

While the core RAG architecture is powerful, the field is rapidly innovating with sophisticated techniques to enhance retrieval accuracy, context quality, and generation relevance.

-

Hybrid Search:

- Problem: Pure vector search excels at semantic similarity but can sometimes miss exact keyword matches or specific entities. Keyword search (like BM25) is good for exact matches but lacks semantic understanding.

- Solution: Combine both! Perform a vector search and a keyword search, then merge and re-rank the results. This leverages the strengths of both approaches for improved recall.

-

Re-ranking:

- Problem: The initial vector search might return many relevant documents, but some might be more pertinent than others, or some might contain redundant information.

- Solution: After an initial retrieval (e.g., top 50 chunks), use a smaller, more powerful "re-ranker" model (often a cross-encoder) to re-score and re-order these documents. Re-rankers look at the query and each retrieved document pair, providing a more granular relevance score, leading to higher precision in the final top-$k$ selection.

-

Query Expansion/Rewriting:

- Problem: Users might ask ambiguous, short, or poorly formulated queries that don't lead to optimal retrieval.

- Solution: Use an LLM to reformulate the user's query into multiple, semantically richer versions. For example, if a user asks "Tell me about the new policy," the LLM might expand it to "What are the details of the company's updated leave policy?" or "Summarize the changes in the latest HR policy document." These expanded queries are then used for retrieval, increasing the chances of finding relevant documents. Techniques like "HyDE" (Hypothetical Document Embeddings) generate a hypothetical answer first, then embed that answer to retrieve documents.

-

Multi-hop Retrieval:

- Problem: Some complex questions require synthesizing information from multiple, indirectly related documents or involve a chain of reasoning. A single search might not suffice.

- Solution: Implement an iterative retrieval process. The initial query retrieves some documents. An LLM then analyzes these documents and might formulate a follow-up query based on the information found, leading to another retrieval step. This continues until the LLM determines it has enough information to answer the original complex question.

-

Graph-based RAG:

- Problem: Pure text-based retrieval can struggle with structured relationships between entities or complex factual queries that benefit from relational understanding.

- Solution: Integrate knowledge graphs. Instead of just embedding text chunks, entities and their relationships are represented in a graph. Retrieval can then involve traversing the graph to find related facts, which are then used to augment the LLM's context. This is particularly powerful for questions like "Who manages project X and what are their key responsibilities?" where project X, managers, and responsibilities are distinct entities and relationships.

Context Optimization: Making Every Token Count

LLM context windows, while growing, are still finite. Optimizing the context passed to the LLM is crucial for performance and cost-efficiency.

-

Summarization/Condensing:

- Strategy: Before passing retrieved documents to the main LLM, use a smaller, faster LLM (or even the same one with a specific prompt) to summarize or extract key information from each retrieved chunk. This reduces the token count while retaining essential facts.

-

Adaptive Context Length:

- Strategy: Instead of always sending a fixed number of chunks, dynamically adjust the amount of context based on the query complexity or initial retrieval confidence. If the initial retrieved documents are highly relevant and sufficient, send fewer; if the query is complex or retrieval is uncertain, send more (up to the context window limit).

-

Prompt Engineering for Context:

- Strategy: Carefully design the prompt to guide the LLM on how to use the provided context. Emphasize using only the context, stating when information is not found, and prioritizing factual accuracy.

Evaluating RAG Systems: Measuring Success

Robust evaluation is critical for iterating and improving RAG systems. This involves assessing both the retrieval quality and the generation quality.

Retrieval Metrics: How good is our search?

These metrics assess how well the system finds relevant documents.

- Recall: The proportion of all relevant documents that were successfully retrieved. High recall means we're not missing important information.

- Precision: The proportion of retrieved documents that are actually relevant. High precision means we're not cluttering the context with irrelevant noise.

- Mean Reciprocal Rank (MRR): Measures the quality of ranked search results. If the first relevant item is at rank 1, MRR is 1; if at rank 2, it's 0.5, etc.

- Normalized Discounted Cumulative Gain (NDCG): A more sophisticated metric that considers the relevance of items and their position in the ranked list, giving higher weight to highly relevant items appearing early.

Generation Metrics: How good is the LLM's answer?

These metrics assess the quality of the LLM's output, often requiring human or LLM-based judgment.

- Faithfulness/Factuality: Is the answer consistent with and supported by the retrieved context? This is paramount for RAG. Often evaluated by LLMs prompted to compare the answer against the context.

- Answer Relevance: Is the answer directly addressing the user's query?

- Context Relevance: Is the retrieved context actually relevant to the user's query? (This helps diagnose issues if the LLM generates a poor answer despite good retrieval).

- Groundness/Attribution: Can specific statements in the answer be traced back to specific sentences or paragraphs in the retrieved sources? This is crucial for building trust and enabling verification.

Tools like Ragas, LlamaIndex's evaluation modules, and custom LLM-as-a-judge frameworks are emerging to automate or semi-automate these evaluations.

Tooling and Frameworks: Building RAG Systems

The RAG ecosystem is supported by a rich set of open-source libraries and commercial services.

-

Orchestration Frameworks:

- LangChain: A popular framework for developing LLM applications, offering extensive modules for RAG, agents, and chains.

- LlamaIndex: Specifically designed for data ingestion, indexing, and querying private or domain-specific data with LLMs, making it a powerful RAG-focused framework.

- Haystack: An end-to-end framework for building NLP applications, including robust RAG pipelines.

-

Vector Databases:

- Cloud-Native: Pinecone, Weaviate, Qdrant, Milvus.

- Open-Source/Embedded: ChromaDB, Faiss, LanceDB.

- Hybrid: Elasticsearch (with vector search capabilities), Pgvector (PostgreSQL extension).

-

Embedding Models:

- Commercial APIs: OpenAI Embeddings, Cohere Embeddings.

- Open-Source: Hugging Face Transformers (e.g.,

sentence-transformerslibrary), BGE (BAAI General Embedding) models.

Emerging Trends within RAG: The Future is Dynamic

RAG is not a static solution; it's a vibrant area of continuous innovation.

- Self-RAG and Adaptive RAG: These approaches empower the LLM to not only retrieve but also to reflect on the quality of its retrieval and generation. The LLM can learn to critique its own outputs, decide if more retrieval is needed, or even reformulate the query based on initial results.

- CRAG (Corrective RAG): An evolution where the LLM actively assesses the quality of retrieved documents. If the retrieved context is deemed insufficient or contradictory, the LLM can trigger further retrieval, reformulate the query, or even decide to abstain from answering.

- RAG for Multi-Modal Data: Extending RAG beyond text to incorporate images, audio, and video. Imagine querying an LLM about a product, and it retrieves not just text descriptions but also relevant product images or video tutorials.

- Optimizing RAG for Real-time Data: Integrating RAG with streaming data sources (e.g., Kafka, Flink) to ensure LLMs always have access to the absolute latest information, crucial for financial news, sensor data, or live customer interactions.

- RAG for Agentic Workflows: Combining RAG with autonomous AI agents. An agent can use RAG as a tool to gather information, then use other tools (e.g., calculators, APIs) to process that information, and finally use an LLM to synthesize a comprehensive response or execute a task.

- Fine-tuning Small Language Models (SLMs) for RAG Components: Instead of relying solely on large, general-purpose models, SLMs are being fine-tuned for specific RAG tasks like re-ranking, query rewriting, or context summarization. This can lead to more efficient, cost-effective, and specialized RAG pipelines.

Practical Applications: RAG in the Enterprise

The practical value of RAG for businesses is immense, enabling a new generation of intelligent applications:

-

Internal Knowledge Bases & Employee Support:

- Use Case: Employees need quick answers to HR policies, IT troubleshooting, project documentation, or company best practices.

- RAG Solution: An internal RAG system can query vast repositories of company documents, providing instant, accurate, and attributed answers, significantly reducing support tickets and improving productivity.

-

Customer Support & Chatbots:

- Use Case: Customers seek help with product features, order status, or troubleshooting.

- RAG Solution: Chatbots powered by RAG can access FAQs, product manuals, customer history, and real-time inventory data to provide personalized and accurate support, improving customer satisfaction and reducing agent workload.

-

Legal Tech:

- Use Case: Lawyers need to research case law, review contracts, or analyze legal documents for specific clauses.

- RAG Solution: RAG systems can quickly retrieve relevant precedents, identify conflicting clauses in contracts, or summarize key points from lengthy legal texts, dramatically accelerating research and due diligence.

-

Medical/Healthcare:

- Use Case: Clinicians require up-to-date medical guidelines, patient records, drug interactions, or research papers.

- RAG Solution: A RAG system can provide rapid access to the latest medical literature, synthesize patient data for diagnosis support, or ensure adherence to clinical protocols, enhancing decision-making and patient care.

-

Financial Services:

- Use Case: Analysts need to process market reports, regulatory documents, company filings, or financial news.

- RAG Solution: RAG can extract key insights from vast amounts of financial text, summarize complex reports, or monitor regulatory changes, empowering faster and more informed financial decisions.

-

Personalized Learning & Education:

- Use Case: Students or professionals need tailored learning content or answers to specific questions within a subject area.

- RAG Solution: Educational platforms can use RAG to draw from vast academic databases, textbooks, and research papers to provide personalized explanations, examples, and study materials, adapting to individual learning needs.

Conclusion: The Cornerstone of Trustworthy Enterprise AI

Retrieval-Augmented Generation has unequivocally emerged as the most critical practical advancement for deploying LLMs in real-world, enterprise-grade applications. It directly confronts and mitigates the inherent limitations of standalone LLMs – hallucination, knowledge cut-off, lack of attribution, and proprietary data access – by grounding their powerful generative capabilities in verifiable, external knowledge.

For AI practitioners and enthusiasts alike, understanding and mastering RAG is no longer optional; it's a fundamental requirement for building robust, reliable, and trustworthy AI systems. As the field continues to evolve with advanced retrieval techniques, context optimization, and sophisticated evaluation methods, RAG promises to unlock unprecedented levels of intelligence and efficiency across every sector. The future of enterprise AI is not just about powerful LLMs, but about LLMs made intelligent and accountable through the strategic application of RAG.