Vision Transformers (ViTs): The Revolution Reshaping Computer Vision

Explore the paradigm shift in AI as Vision Transformers (ViTs), originally from NLP, challenge the long-standing dominance of Convolutional Neural Networks (CNNs) in computer vision. Discover how this revolutionary architecture is opening new avenues for innovation.

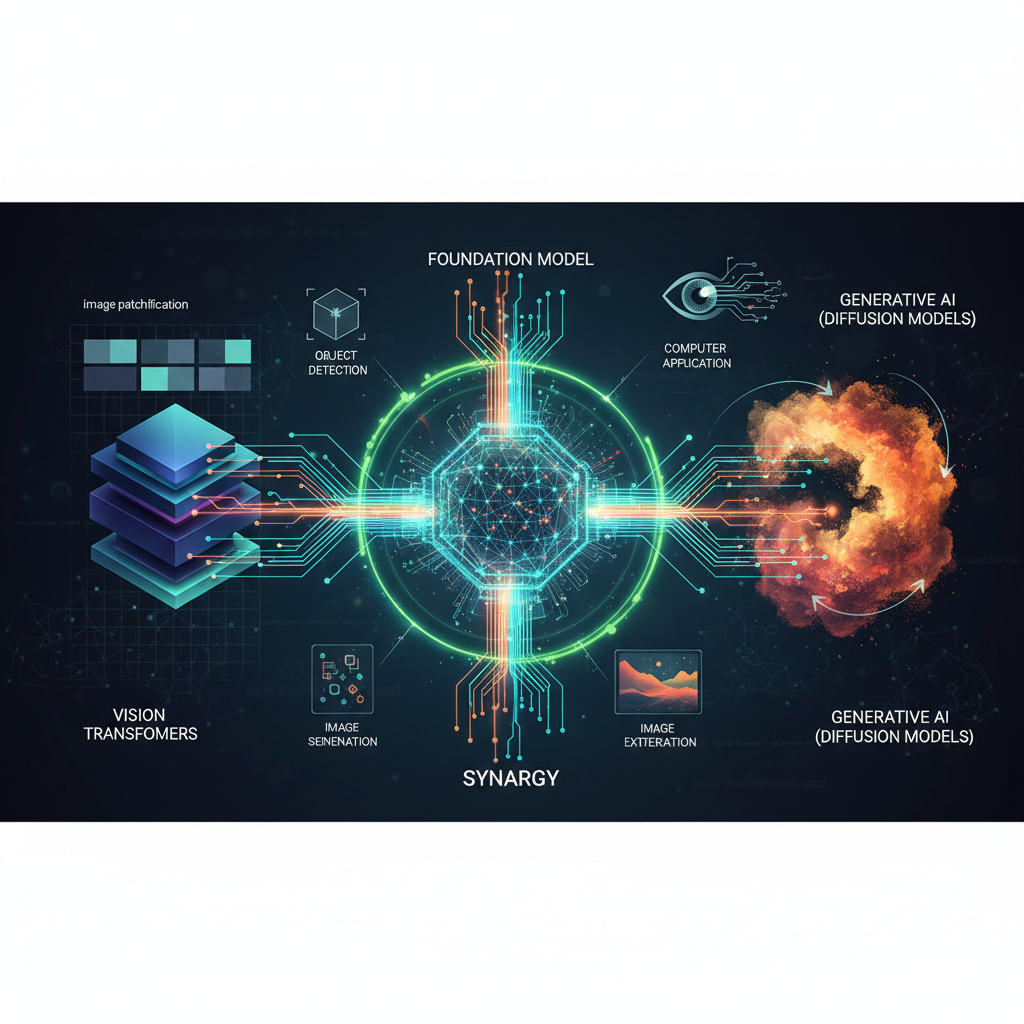

The landscape of artificial intelligence is in a constant state of flux, with breakthroughs emerging at an astonishing pace. For decades, Convolutional Neural Networks (CNNs) reigned supreme in computer vision, their hierarchical feature extraction and inductive biases perfectly suited for image understanding. Then, a paradigm shift occurred. An architecture born in the world of natural language processing (NLP) – the Transformer – dared to venture into the visual domain, and the results were nothing short of revolutionary. This marked the advent of Vision Transformers (ViTs), and their subsequent evolution has not only challenged the dominance of CNNs but has also opened up entirely new avenues for AI innovation.

The Seismic Shift: From Convolutions to Attention

Before ViTs, the idea of applying a Transformer, a model designed to process sequences of words, to images seemed counterintuitive. CNNs inherently understand the local nature of pixels, the importance of spatial hierarchies, and the concept of translation equivariance. Transformers, on the other hand, operate on sequences, capturing long-range dependencies through self-attention mechanisms. The genius of the original Vision Transformer (ViT) paper in 2020 was to bridge this gap.

The Core Idea: Patching Up Images for Attention

The fundamental insight of ViT was to treat an image not as a 2D grid of pixels, but as a sequence of flattened 2D patches. Imagine taking an image and dividing it into a grid of smaller, equally sized squares – say, 16x16 pixels. Each of these squares is a "patch."

- Patching and Linear Embedding: The image is divided into non-overlapping patches. Each patch is then flattened into a 1D vector. These vectors are then projected into a higher-dimensional embedding space using a linear layer. This process effectively converts each patch into a "token" similar to how words are tokenized in NLP.

- Positional Embeddings: Unlike CNNs, Transformers don't inherently understand the spatial arrangement of these patches. To reintroduce this crucial information, positional embeddings are added to the patch embeddings. These learnable embeddings encode the original position of each patch within the image.

- Transformer Encoder: The sequence of embedded patches (along with a special

[CLS]token, similar to BERT, used for classification) is then fed into a standard Transformer encoder. This encoder consists of multiple layers, each containing a multi-head self-attention (MHSA) module and a feed-forward network (FFN).- Self-Attention: This is the heart of the Transformer. It allows each patch token to weigh the importance of every other patch token in the sequence. This means a patch in the top-left corner can directly "attend" to a patch in the bottom-right corner, capturing global relationships across the entire image – a stark contrast to CNNs' local receptive fields.

- Feed-Forward Networks: After attention, each token's representation is independently processed by a simple neural network.

- Classification Head: Finally, the output corresponding to the

[CLS]token from the Transformer encoder is passed through a multi-layer perceptron (MLP) head for classification.

Why this was a big deal: ViTs demonstrated that with sufficient data, they could match or even surpass state-of-the-art CNNs on image classification tasks. This proved that the inductive biases of CNNs (locality, translation equivariance) were not strictly necessary if enough data was available for the model to learn them.

Initial Challenge: The original ViT models were data-hungry. They required pre-training on massive datasets like JFT-300M (300 million images) to outperform CNNs on ImageNet-1K. This was due to their lack of built-in inductive biases, meaning they had to learn everything from scratch.

The Evolution: Making ViTs Practical and Efficient

The initial success of ViTs spurred a flurry of research aimed at addressing their limitations, particularly their data hunger and quadratic computational complexity with respect to image size (due to global self-attention). This led to a rich ecosystem of ViT variants, each pushing the boundaries of efficiency and generalization.

1. Addressing Data Hunger: Knowledge Distillation and Self-Supervised Learning

-

DeiT (Data-efficient Image Transformers) [2021]: This work was pivotal in making ViTs practical for smaller datasets like ImageNet-1K. DeiT introduced a "distillation token" that allowed a ViT to learn from a pre-trained CNN "teacher" model. The ViT was trained to not only predict the correct label but also to mimic the output of the teacher, effectively transferring the teacher's inductive biases and knowledge. This significantly reduced the data requirement for ViTs to achieve competitive performance.

python# Conceptual example of distillation token in DeiT import torch import torch.nn as nn class DeiTTransformer(nn.Module): def __init__(self, num_patches, embed_dim, num_classes): super().__init__() self.patch_embedding = nn.Linear(16*16*3, embed_dim) # Example patch embedding self.cls_token = nn.Parameter(torch.randn(1, 1, embed_dim)) self.dist_token = nn.Parameter(torch.randn(1, 1, embed_dim)) # The distillation token self.pos_embedding = nn.Parameter(torch.randn(1, num_patches + 2, embed_dim)) # +2 for cls and dist self.transformer_encoder = nn.TransformerEncoderLayer(d_model=embed_dim, nhead=8) self.head = nn.Linear(embed_dim, num_classes) self.dist_head = nn.Linear(embed_dim, num_classes) # Head for distillation loss def forward(self, x): # ... process patches, add tokens and pos embeddings ... # tokens = [cls_token, dist_token, patch_embeddings + pos_embeddings] # output = self.transformer_encoder(tokens) # cls_output = output[:, 0] # dist_output = output[:, 1] # return self.head(cls_output), self.dist_head(dist_output) # Two outputs for classification and distillation pass # Simplified for brevity# Conceptual example of distillation token in DeiT import torch import torch.nn as nn class DeiTTransformer(nn.Module): def __init__(self, num_patches, embed_dim, num_classes): super().__init__() self.patch_embedding = nn.Linear(16*16*3, embed_dim) # Example patch embedding self.cls_token = nn.Parameter(torch.randn(1, 1, embed_dim)) self.dist_token = nn.Parameter(torch.randn(1, 1, embed_dim)) # The distillation token self.pos_embedding = nn.Parameter(torch.randn(1, num_patches + 2, embed_dim)) # +2 for cls and dist self.transformer_encoder = nn.TransformerEncoderLayer(d_model=embed_dim, nhead=8) self.head = nn.Linear(embed_dim, num_classes) self.dist_head = nn.Linear(embed_dim, num_classes) # Head for distillation loss def forward(self, x): # ... process patches, add tokens and pos embeddings ... # tokens = [cls_token, dist_token, patch_embeddings + pos_embeddings] # output = self.transformer_encoder(tokens) # cls_output = output[:, 0] # dist_output = output[:, 1] # return self.head(cls_output), self.dist_head(dist_output) # Two outputs for classification and distillation pass # Simplified for brevity -

Self-Supervised Learning (SSL): This has been a game-changer for ViTs, allowing them to learn powerful representations from unlabeled data.

- DINO (Self-distillation with no labels) [2021]: DINO showed that ViTs trained with a specific self-distillation strategy (where a "student" ViT learns from a "teacher" ViT, both trained on the same unlabeled data) could learn incredibly rich visual features. Remarkably, these ViTs learned to segment objects explicitly without any explicit supervision, demonstrating the power of attention maps for interpretability.

- MAE (Masked Autoencoders) [2021]: Inspired by BERT's masked language modeling, MAE masks a large portion (e.g., 75%) of image patches and trains the ViT to reconstruct the missing pixels. This forces the model to learn meaningful representations of the visible patches to infer the content of the masked ones. MAE pre-training has proven incredibly effective, allowing ViTs to achieve state-of-the-art performance even with smaller downstream datasets.

2. Improving Efficiency and Hierarchical Processing: Swin Transformer

-

Swin Transformer (Shifted Window Transformer) [2021]: This was a major breakthrough, making ViTs suitable for dense prediction tasks like object detection and segmentation, and for processing larger images.

- Hierarchical Structure: Unlike the original ViT's single-resolution processing, Swin Transformers build hierarchical feature maps, much like CNNs. This means they process images at multiple scales, capturing both fine-grained and coarse-grained features.

- Windowed Attention: To address the quadratic complexity of global self-attention, Swin Transformers compute self-attention only within local, non-overlapping windows. This significantly reduces computation.

- Shifted Windows: To enable communication between different windows (which is crucial for capturing global context), Swin Transformers introduce a "shifted window" partitioning scheme in successive layers. This allows information to flow across window boundaries.

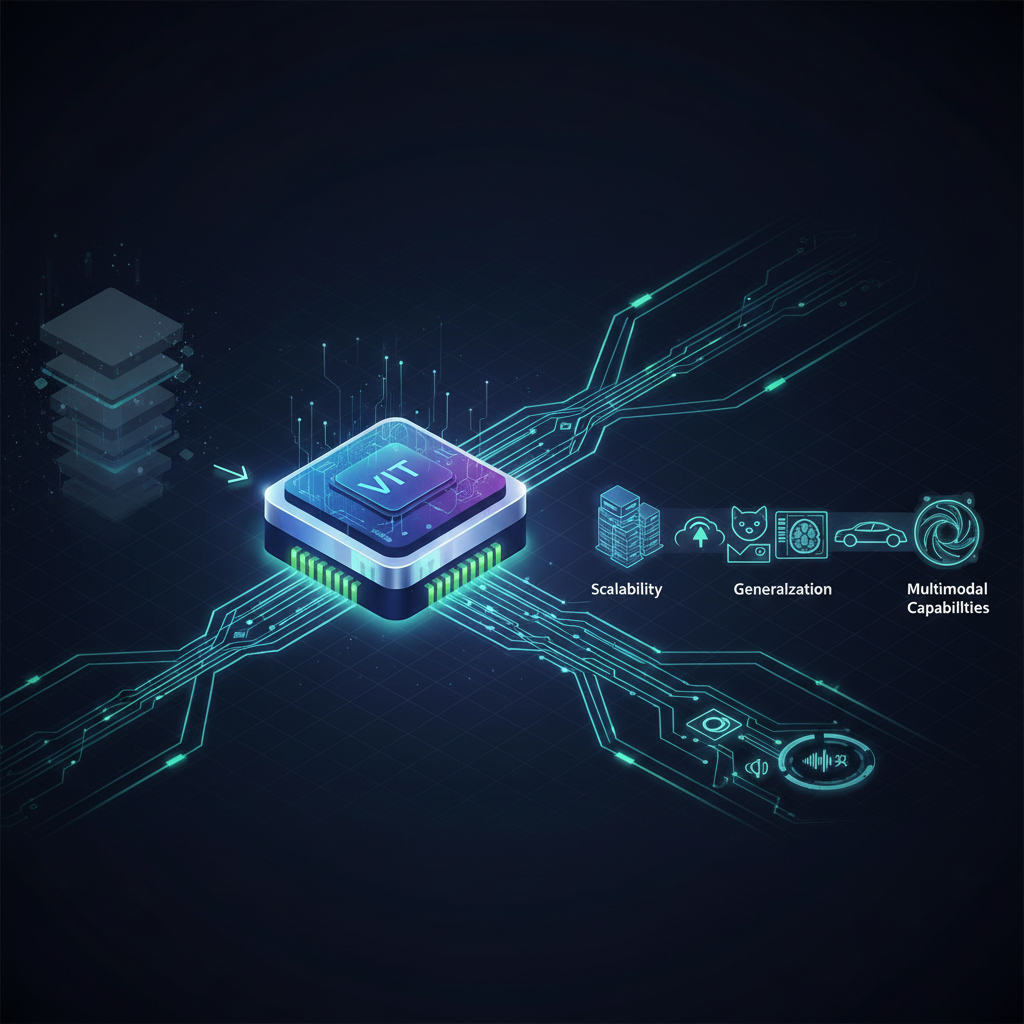

Benefits: Swin Transformers achieve linear computational complexity with respect to image size, making them highly efficient and scalable. They quickly became the backbone of choice for many state-of-the-art models across various computer vision tasks, demonstrating that CNN-like inductive biases (hierarchy, locality) could be effectively integrated into a Transformer architecture.

3. Hybrid Approaches: Blending the Best of Both Worlds

Recognizing the strengths of both CNNs and Transformers, researchers began exploring hybrid architectures that combine their best attributes.

- ConViT (Convolutional Vision Transformers) [2021]: This work explored adding "soft convolutional inductive biases" to the self-attention mechanism, showing that a judicious blend can improve performance, especially with less data.

- MobileViT [2022]: Designed for resource-constrained environments, MobileViT combines the strengths of CNNs (local processing, parameter efficiency) and ViTs (global processing). It uses a lightweight MobileNet-like convolutional backbone for initial feature extraction and then integrates Transformer blocks for global reasoning on these features. This makes it highly efficient for deployment on mobile and edge devices.

- CoAtNet (Convolution and Attention Network) [2021]: This family of models systematically combines depth-wise convolutions and self-attention in a stacked manner. They demonstrated that by carefully integrating these two mechanisms, they could achieve superior performance across various model scales, showcasing the power of synergy.

Practical Applications and Emerging Trends

The evolution of ViTs has had a profound impact across the AI landscape, driving innovation in numerous domains.

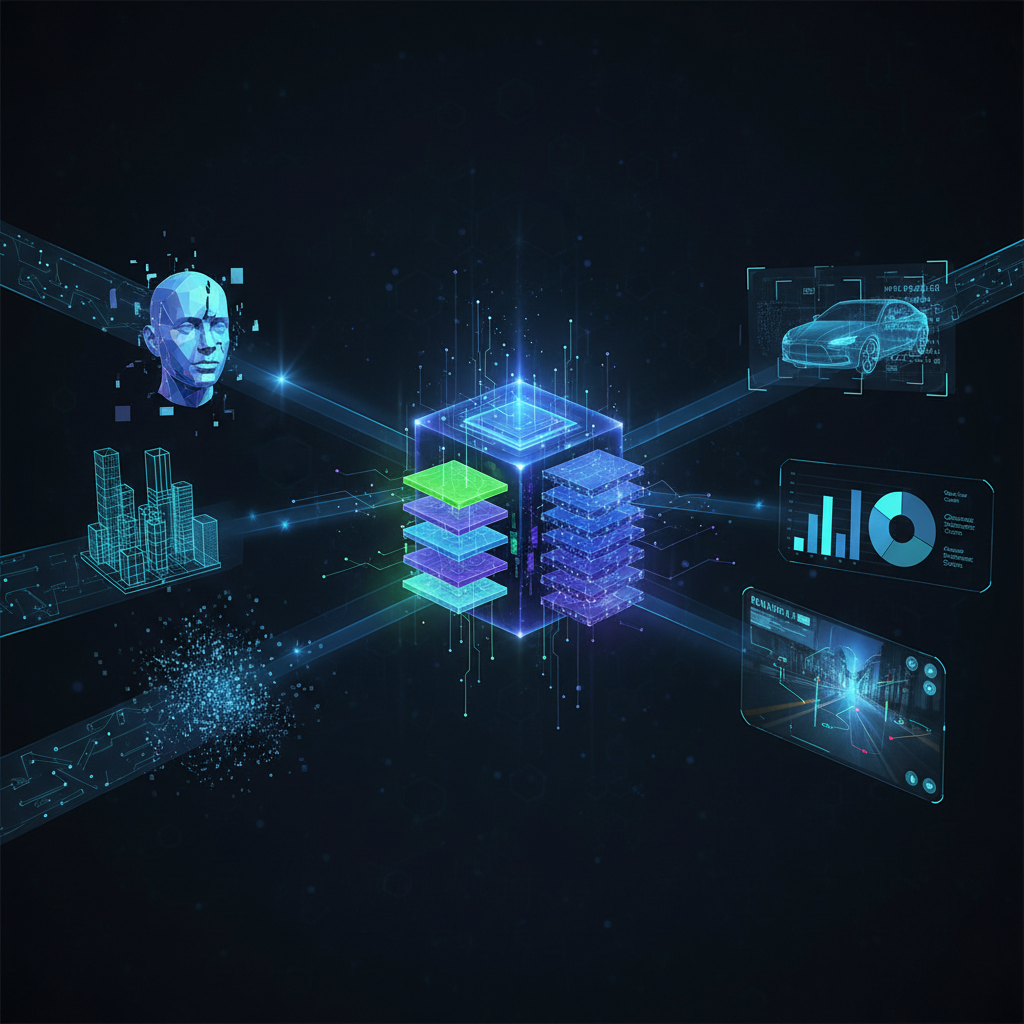

1. Foundation Models and Multimodal AI

ViTs (or their variants) are the bedrock of many large-scale multimodal foundation models.

- CLIP (Contrastive Language-Image Pre-training): CLIP uses a ViT (or ResNet) for image encoding and a Transformer for text encoding. It learns to associate images with their descriptive text captions, enabling zero-shot image classification and powerful image search.

- DALL-E, Stable Diffusion, Midjourney: These groundbreaking text-to-image generation models heavily rely on Transformer principles. They leverage the ability of Transformers to understand the relationship between text prompts and visual features, generating incredibly diverse and high-quality images from natural language descriptions. ViT-like architectures are often used as components within these larger generative frameworks.

2. Medical Imaging

ViTs are showing immense promise in healthcare. Their ability to capture long-range dependencies is crucial for understanding complex anatomical structures and detecting subtle anomalies in medical images. Applications include:

- Disease Detection: Identifying cancerous cells or lesions in X-rays, CT scans, and MRIs.

- Tumor Segmentation: Precisely outlining tumors for treatment planning.

- Medical Image Analysis: Automated diagnosis and prognosis.

3. Robotics and Autonomous Driving

In highly dynamic and safety-critical environments, robust perception is paramount. ViTs are being integrated into perception systems for:

- 3D Object Detection: Identifying and localizing objects in 3D space from sensor data.

- Scene Understanding: Interpreting complex driving scenarios.

- Motion Prediction: Forecasting the movement of other vehicles and pedestrians.

4. Efficient ViTs for Edge Devices

The demand for AI on resource-constrained devices (smartphones, drones, IoT devices) is growing. Research is actively focused on developing smaller, faster, and more energy-efficient ViTs (e.g., MobileViT, EdgeViT, TinyViT) to enable on-device AI capabilities.

5. Video Transformers

Extending ViTs to video analysis involves adding temporal attention mechanisms or 3D patch embeddings to process sequences of frames. This opens doors for:

- Action Recognition: Identifying human actions in videos.

- Video Object Detection and Tracking: Following objects through video sequences.

- Event Understanding: Analyzing complex events in surveillance or sports footage.

6. Explainability

One of the inherent advantages of ViTs is their potential for improved interpretability. The attention maps within the Transformer can be visualized to show which parts of an image the model is "attending" to when making a decision. This offers a more direct and intuitive way to understand model reasoning compared to the often opaque feature maps of CNNs, aiding in debugging and building trust in AI systems.

Value for AI Practitioners and Enthusiasts

The rapid evolution of Vision Transformers makes this a critical area for anyone involved in AI.

For Practitioners:

- Model Selection: Understanding the nuances of different ViT variants (e.g., Swin for dense prediction, MobileViT for edge, MAE-trained ViTs for data efficiency) is crucial for selecting the optimal architecture for specific tasks and resource constraints.

- Pre-training Strategies: Leveraging self-supervised learning techniques like DINO and MAE is becoming essential for achieving state-of-the-art performance, especially when labeled data is scarce or expensive.

- Deployment Considerations: Awareness of efficient ViT designs is vital for deploying models in production, particularly on edge devices or in latency-sensitive applications.

- Multimodal AI: A solid grasp of ViT principles is fundamental for working with cutting-edge multimodal models like CLIP and Stable Diffusion, which are redefining how we interact with AI.

For Enthusiasts:

- Frontier Research: This field is at the forefront of AI research, offering a fascinating glimpse into how fundamental architectural changes can revolutionize an entire domain.

- Interdisciplinary Inspiration: The success of ViTs highlights the power of cross-pollination between different AI subfields (NLP and CV), inspiring new ideas and approaches.

- Real-World Impact: Witnessing how these models enable capabilities like text-to-image generation, advanced medical diagnostics, or more robust autonomous systems is incredibly exciting and showcases the tangible benefits of AI.

- Future-Proofing Knowledge: Understanding ViTs provides a strong foundation for comprehending future advancements in general-purpose AI and the development of increasingly powerful foundation models.

Conclusion

Vision Transformers represent a monumental shift in computer vision, moving beyond the long-held CNN-centric paradigm. What began as a bold experiment in applying NLP architectures to images has blossomed into a vibrant and rapidly evolving field. From addressing initial challenges like data hunger and computational complexity to integrating hierarchical processing and enabling multimodal AI, ViTs have demonstrated remarkable adaptability and power. Their continuous evolution, particularly in improving efficiency, reducing data requirements, and integrating into complex multimodal systems, solidifies their position as a cornerstone of modern visual AI. For anyone looking to understand the cutting edge of artificial intelligence, the journey of Vision Transformers offers an exciting and indispensable narrative.